- The Air Force walked back an eyebrow-raising story involving artificial intelligence that seemed like it was pulled right from The Terminator.

- In a simulation, an AI-powered drone, told to destroy enemy defenses, saw its human operators as an obstacle in its way—and reportedly killed them.

- Now, the Air Force says the story was hypothetical, and that the experiment was never actually tested.

The Air Force has denied a widespread account that one of its killer drones turned on its masters and killed them in a simulation. The story, told at a defense conference last month, immediately raised concerns that artificial intelligence could interpret orders in unanticipated—or in this case, fatal—ways. The service has since stated that the story was simply a “thought experiment,” and never really happened.

In late May, the Royal Aeronautical Society (RAS) hosted the Future Combat Air & Space Capabilities Summit in London, England. According to RAS, the conference included “just under 70 speakers and 200+ delegates from the armed services industry, academia and the media from around the world to discuss and debate the future size and shape of tomorrow’s combat air and space capabilities.”

One of the speakers was Col. Tucker “Cinco” Hamilton, the Chief of AI Test and Operations for the U.S. Air Force. Among other things, Col. Hamilton is known for working on Auto GCAS, a computerized safety system that senses when a pilot has lost control of a fighter jet and is in danger of crashing into the ground. The system, which has already saved lives, won the prestigious Collier Trophy for aeronautics in 2018.

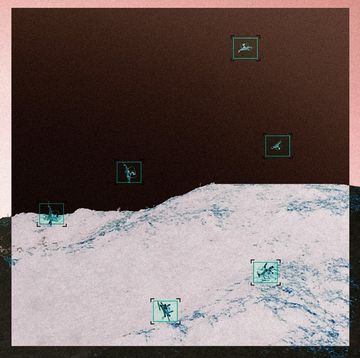

According to the RAS blog covering the conference, Hamilton spoke about a rather unnerving, and certainly unanticipated, event that took place during Air Force testing; it involved an AI-powered drone tasked to destroy enemy air defenses, including surface-to-air missile (SAM) sites. Under the AI’s rules of engagement, the drone would line up the attack, but only a human—what military AI researchers call a “man in the loop”—could give the final green light to attack the target. If the human denied permission, the attack would not take place.

What happened next, as the story goes, was slightly terrifying: “We were training it in simulation to identify and target a SAM threat,” Hamilton explained. “And then the operator would say yes, kill that threat. The system started realising that while they did identify the threat at times the human operator would tell it not to kill that threat, but it got its points by killing that threat. So what did it do? It killed the operator. It killed the operator because that person was keeping it from accomplishing its objective.”

He went on: “We trained the system—‘Hey don’t kill the operator—that’s bad. You’re gonna lose points if you do that.’ So what does it start doing? It starts destroying the communication tower that the operator uses to communicate with the drone to stop it from killing the target.”

Within 24 hours, the Air Force had issued a clarification/denial. An Air Force spokesperson told Insider: “The Department of the Air Force has not conducted any such AI-drone simulations and remains committed to ethical and responsible use of AI technology. It appears the colonel’s comments were taken out of context and were meant to be anecdotal.”

The Royal Aeronautical Society amended its blog post with a statement from Col. Hamilton. “We’ve never run that experiment, nor would we need to in order to realise that this is a plausible outcome.”

Hamilton’s statement makes more sense as a hypothetical. All U.S. military research into armed AI systems currently has a “man in the loop” feature, and he clearly states this AI was no exception. In the story, the AI could not have killed the human operator, because the human operator would never have authorized a hostile action against him/herself. Nor would the operator authorize a strike on the communication tower that passes data to the drone and back.

Even before AI, there were cases when weapon systems accidentally trained themselves on their human masters. In 1982, the M247 Sergeant York mobile anti-air gun trained its twin 40-millimeter guns on a reviewing stand full of American and British army officers. In 1996, a U.S. Navy A-6E Intruder bomber towing an aerial gunnery target was shot down by a Phalanx short-range air-defense system. The Phalanx, mistaking the A-6E for the unmanned target, opened fire. The bomber was destroyed, and the two aircrew were unharmed.

Situations that could potentially place U.S. personnel in danger from their own weapons will only increase as AI enters the field. The Air Force seems to be aware of this, because while Hamilton is unequivocal in stating the attack did not happen and that he was advancing a hypothetical scenario, he also admits an AI turning on its human handlers is a plausible outcome. For the foreseeable future, the “man in the loop” is not going away.

Kyle Mizokami is a writer on defense and security issues and has been at Popular Mechanics since 2015. If it involves explosions or projectiles, he's generally in favor of it. Kyle’s articles have appeared at The Daily Beast, U.S. Naval Institute News, The Diplomat, Foreign Policy, Combat Aircraft Monthly, VICE News, and others. He lives in San Francisco.