SECURITY

SECURITY

SECURITY

SECURITY

SECURITY

SECURITY

Google LLC’s new application of generative AI to a tried-and-true cybersecurity method called fuzzing could help elevate it into the top tray of enterprises’ defensive tool chests.

Fuzzing is the process by which security researchers use various automated tools to cycle rapidly through random data inputs to make the target code crash or yield unexpected results, indicating a potential security flaw. The automation is key, because a tester wants to save time and run through many combinations quickly.

Part of the process is to monitor the results of the code as it processes these inputs. That’s especially important for software that requires external user inputs. Hackers often try to exploit these inputs to gain control over systems, for example.

What Google last week announced it’s doing is improving fuzzing by employing large language models, or LLMs, and other AI techniques. The researchers focus on these improvements to a seven-year-old project called OSS-Fuzz.

The combination of LLMs and fuzzing could be a potent development. That’s because these AI-based tools can generate a large number of test cases quickly, saving manpower for more important tasks and making security more proactive.

“There is a lot of progress in finding and fixing bugs with LLMs which looks promising,” Dudu Mimran, chief technology officer of Deutsche Telekom Innovation Labs at Ben Gurion University in Beersheva, Israel, told SiliconANGLE. “A good thing as it will allow better open-source hygiene while LLMs are also powering cyber offenders in many ways which will introduce vulnerable code.”

Fuzzing tools have been around for decades and are widely available, especially from open-source repositories. They have helped locate hundreds of vulnerabilities over the years, including a new fuzzing tool from Amazon Web Services Inc. called SnapChange earlier this year. Microsoft Corp. has had its OneFuzz tool for several years now, which provides fuzzing as a hosted service.

Google works on other fuzzing tools besides OSS-Fuzz: Two years ago, it announced ClusterFuzzLite, a companion to OSS-Fuzz, which helps integrate fuzzing into development workflows. Another project is its Atheris Python Fuzzer, used to find bugs in code written in that language. All are free to use.

Fuzzing has gotten plenty of attention over the years. It’s a typical strategy used in security contests such as capture-the-flag where teams compete to find bugs and find hidden files for prizes.

One noted case in point was the 2014 Heartbleed vulnerabilities that were found in the Secure Sockets Layer encryption routines. Many security researchers stated that fuzzing could have found this problem and avoiding a lot of the resulting pain from this exploit.

Some of the more notable contests are sponsored by the U.S. Defense Department with their DARPA software challenges. The most recent one is focused on using AI to improve critical infrastructure.

The news last month combines OSS-Fuzz with the power of AI to improve its automation. Google claims it is being used continuously across more than 1,000 open-source projects and wanted to scale it up in two different dimensions: It wanted to make the tool available for more projects and to use better automated routines to cover more of the code base in each project.

Google’s blog post states that OSS-Fuzz only can be used to analyze a third of the code, “meaning that a large portion of our users’ code remains untouched by fuzzing.” The team behind OSS-Fuzz wanted to use AI to write these enhancements for the remainder of cases.

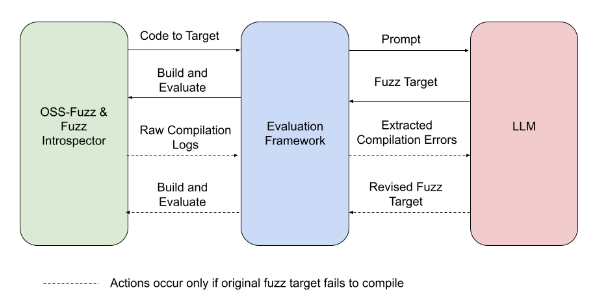

It built an automated evaluation framework to guide their software development of these enhancements. The role of LLMs is clear from the accompanying illustration of the process flows.

“We’re working towards a future of personalized vulnerability detection with little manual effort from developers,” the researchers wrote. “With the addition of LLM generated fuzz targets, OSS-Fuzz can help improve open source security for everyone.”

There is one major drawback, however. The same fuzzing tools are also used by hackers who test their own malware to expose potential vulnerabilities. What if the bad actors make use of LLMs to head defenders off and harden their own malware?

This issue was raised by Daniel DiFulvio in a blog post back in 2021, when he said, “If a cybercriminal gets a hold of AI fuzzing data for a popular software, for instance, they can quickly identify its security weaknesses and use these to launch attacks.”

It could happen, although given the training cost of LLMs and the specialized nature of building these models, it will take considerable effort. But the bad guys have already taken baby steps toward leveraging them.

Last week, Chainguard Inc. Chief Executive Dan Lorenc wrote on his LinkedIn page that a hacker has used automated routines to flood multiple bug reports for numerous open systems projects. “Someone is clearly scraping old issues and commits to file these in an automated fashion, without ever getting maintainers involved.”

Some security experts say these bugs are a diversion and may not be security flaws. “It is the same old story of every new technology,” said Mimran: Automation has both good and bad effects.

THANK YOU