The Blessing of Dimensionality?! (Part 1)

Last Updated on December 11, 2023 by Editorial Team

Author(s): Avi Mohan, Ph.D.

Originally published on Towards AI.

The Blessing of Dimensionality?! (Part 1)

From Dartmouth to LISP

“We think that a significant advance can be made in one or more of these problems if a carefully selected group of scientists work on it together for a summer,” said the proposal. Little did John McCarthy and his colleagues know while writing that line, that their short summer project was about to set in motion one of the greatest technological revolutions humanity has ever seen.

In many ways, the 1956 Dartmouth Summer Project on Artificial Intelligence [1,2] can be considered the place where AI, including the name AI, took birth. Since its inception, two schools of thought have emerged, each with its own distinct approach to understanding and creating intelligent systems.

Symbolic AI: the first school

Arguably, the more “ancient” of the two is called Symbolic AI (the other being Connectionist AI). Very simply put, this approach involves

- creating a big database of objects (say, animals),

- defining rules to manipulate these objects (what makes an animal a mammal, a reptile or an insect?) and

- using the first two steps to make logical inferences (“Is a cat an insect?”)

More formally, the symbolic approach to AI emphasizes the use of symbols and rules to represent and manipulate knowledge. Unlike connectionist AI, which is inspired by the structure of the human brain, symbolic AI focuses on explicit representations of concepts and relationships.

In symbolic AI systems, knowledge is encoded in the form of symbols, which can represent objects, actions, or abstract concepts. These symbols are then manipulated using rules of inference, which allow the system to draw conclusions and make decisions. This rule-based reasoning enables symbolic AI systems to solve problems in a logical and structured manner.

Very simple example

- I could load an AI with the following database

Facts about animals: name, type, and characteristics

a. animal(cat, mammal, fur, four_legs).

b. animal(fish, aquatic, scales, no_legs).

c. animal(bird, avian, feathers, two_legs).

d. animal(snake, reptile, scales, no_legs). - Then program the following RULES for classification based on characteristics

a. mammal(X) :- animal(X, mammal, fur, four_legs).

b. aquatic(X) :- animal(X, aquatic, scales, no_legs).

c. avian(X) :- animal(X, avian, feathers, two_legs).

d. reptile(X) :- animal(X, reptile, scales, no_legs). - Following this, we have a simple expert system that can respond to different queries, such as

[a] Query: mammal(cat). #We’re asking if a cat is or isn’t a mammal.

Expected Response: true

[b] Query: mammal(fish)

Expected response: false.

Here’s a more detailed primer on how Symbolic AI systems work

Symbolic AI has been particularly successful in areas that require reasoning and planning, such as expert systems and game-playing. Successes include:

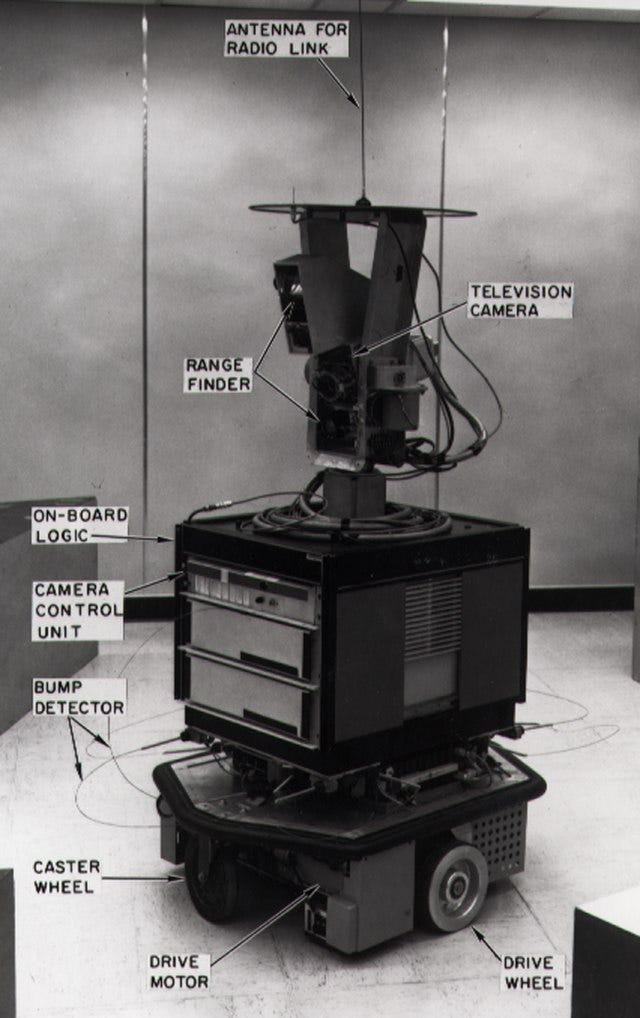

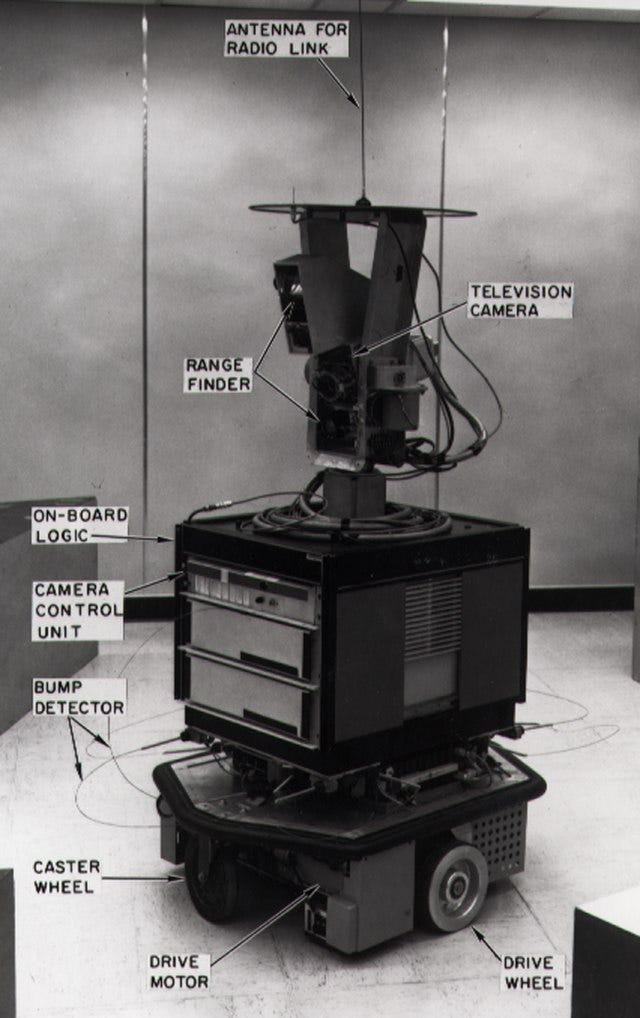

- Shakey the Robot (see the first figure) was built by SRI International, then, the Stanford Research Institute [5],

- expert systems such as the rule-based AI named “MYCIN,” developed at Stanford University in the 70s to diagnose and treat infections in humans, and

- ELIZA, one of the first natural language processing programs, was created by Joseph Weizenbaum at MIT.

Symbolic AI was, at least initially, exceptionally successful and employed in a wide range of applications. Indeed, entire families of formal languages, such as LISP (1950s) were created to facilitate thinking and coding for AI systems [3]. There are, however, some very fundamental flaws with this way of thinking about AI systems.

Some problems with Symbolic AI

- Handling Uncertainty

– Symbolic AI systems struggle with representing and reasoning about uncertainty.

– Real-world situations often involve incomplete or ambiguous information, and traditional symbolic approaches may struggle to handle uncertainty effectively.

– In domains where uncertainty is inherent, such as medical diagnosis or natural language understanding, symbolic AI may provide overly deterministic or inaccurate results. - (related) Knowledge Representation and Acquisition

– Symbolic AI systems rely on explicit representations of knowledge in the form of symbols and rules.

– This poses a challenge in capturing the vast and nuanced knowledge of the real world.

– A highly complex task such as autonomous driving, for example, simply cannot be represented as a set of “If-Then-Else” commands — how billions of such conditions will you code into the AI agent? - Lack of Learning and Adaptation

– There’s essentially no way to learn from new data and experience!

– The AI agent simply does whatever the programmer codes into it. Real world data can only be injected into the agent as the programmer’s knowledge of how said world works — which could be both very limited and biased.

These, and a few other rather serious handicaps, made progress in symbolic AI quite a frustrating endeavor. All this while however, a parallel line of thought had been quietly developing, supported by the researchers who, hearing Minsky and Papert’s XOR death knell for the Perceptron classifier [6], had responded with a defiant, “this ain’t over yet!”

Everything changed when the Connectionist Nation attacked…

The Connectionist approach to AI is (mostly) what you read about these days from self-driving Waymo cabs, to Apple’s facial recognition systems, to that robot army uncle Bob just told you about over Thanksgiving dinner.

The connectionist approach to artificial intelligence (AI) draws inspiration from the structure and function of the human brain, aiming to create intelligent systems by emulating the interconnectedness of neurons. Unlike symbolic AI, which relies on explicit rules and representations, connectionist AI utilizes computer programs called “Artificial Neural Networks” (ANNs) to learn from data and make decisions.

ANNs are composed of layers of interconnected artificial neurons, each receiving input signals, processing them, and transmitting output signals to other neurons. For example, on the right side of Fig. 4, each disc (blue, green or yellow) represents one “neuron.” These connections, or weights, are adjusted through a process called learning, allowing the ANN to identify patterns and relationships in the data. This learning ability enables connectionist AI systems to solve complex problems and make predictions based on new data, even without explicit programming.

Without getting into too much detail (and boy is there too much detail here) let us note that even by around 1990, Gradient Descent and Backpropagation had developed surprisingly well [4, 16] given the general lack of enthusiasm for the connectionist approach in the preceding decades. But attempts at solving more complex real-world problems using the connectionist approach were stymied by (1) poor computing hardware and (2) lack of training data.

Moore and Li to the rescue

By the late 2000s, Moore’s law had made enormous strides in helping solve the computing issue. Similarly, researchers like Fei Fei Li leveraged the vast amounts of image data available online to create gigantic data sets to help train ANNs (incidentally, Li’s creation of the ImageNet dataset is a saga exciting enough to merit its own article U+1F609). And when an AI solved¹ Li’s ImageNet challenge, connectionism emerged as the clear winner.

September 30th, 2012

Following what has now become famous as “The AlexNet moment,” (refer to [17, 15], or just listen to Pieter Abbeel talk for 5 mins), ANNs exploded into the limelight, revolutionizing within a few years, remarkably disparate fields like natural language processing, medicine, and robotics (Abbeel’s lab has famously been responsible for many of the robotics breakthroughs). But connectionist AI is no panacea.

Problems with Connectionist AI

Before you brush off the former approach as now being shambolic AI, let’s look at some issues that plague the latter.

- Lack of Explainability [18]

– Imagine that ANN shown in the previous figure, but with about 175 Billion discs instead of 11. How would one even begin to understand how this brobdingnagian monstrosity reasons? Most estimates put the number of GPT 3.5 has an estimated 175 billion parameters.

– Neural networks are often considered “black boxes” due to their complex architectures and large numbers of parameters.

– Obviously, in applications where understanding the rationale behind decisions is crucial (e.g., medical diagnosis, finance), the lack of explainability may limit the adoption of connectionist models. - Memory, Compute and Power

– ANNs require enormous amounts of power and computation both during the learning phase and for normal operation.

– This problem is so bad nowadays that most of the largest ANNs are being trained and operated by private companies because academic institutions simply cannot afford to foot the colossal electric bill or purchase enough processors to keep these behemoths crunching. - The Binding Problem [8]

– In the human brain, different regions process various aspects of sensory information, such as color, shape, and motion.

– The binding problem arises because the brain needs to integrate these distinct features into a cohesive perception of objects and scenes.

– For example, when you see a red ball rolling across a green field, your brain seamlessly combines information about color, shape, and motion to form a unified perception of a rolling red ball.

– Connectionist models often use distributed representations, where different features are encoded by the activation patterns of multiple neurons. While this allows for parallel processing and flexibility, it makes it challenging to explicitly link specific features together.

– While methods like attention have been used to address this issue, it is still far from solved, and one of the major drawbacks of the connectionist approach.

In the interest of brevity, I will limit myself to the above issues. We will also revisit them a bit later. For a more detailed discussion of the shortcomings of the connectionist approach, please check [9, Sec. 2.1.2].

It is, therefore, not surprising when researchers claim things along the lines of:

In our view, integrating symbolic processing into neural networks is of fundamental importance for realizing human-level AI, and will require a joint community effort to resolve. [8]

Can HDC, master of both elements, save us?

Hyperdimensional Computing (HDC) aka Vector Symbolic Architecture (VSA) brings together ideas from both the above approaches to help alleviate some of the problems we’ve discussed. The fundamental quantity at the heart of this third approach to AI, is called a “Hypervector,” which is just a vector in a very high (think R¹⁰⁰⁰⁰ U+1F62E) dimensional space.

Bind, bundle and permute [7, 19]

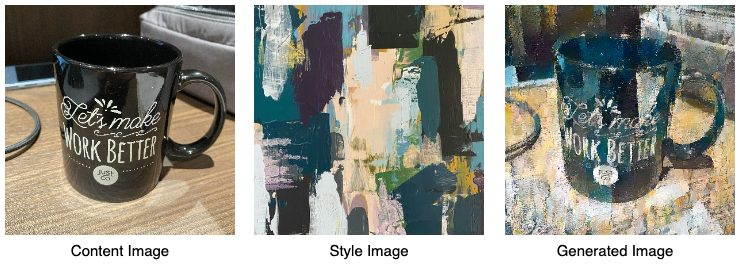

Very loosely speaking, these hypervctors are used to represent objects and relations between said objects (if this rings a U+1F514 you’re on the right track, symbolically speaking). For example, we could have a 10,000-dimensional hypervector α for “orange” and β for “color,” then the 10,000-dimensional vector obtained from elementwise multiplication, represented by γ = α ⊗ β represents “orange color.” In HDC jargon, this operation is called “binding.”

Combining two or more outputs of such binding operations through vanilla vector addition helps us superimpose multiple features. For example, if γ and δ represent “orange color” and “round shape” respectively, then γ+δ represents “orange color and round shape.” We are, of course, building towards a symbolic representation of an orange (U+1F34A). This operation is called “bundling.”

The third operation, called Permutation involves rearranging the entries of a hypervector. For example, if I begin with the vector x = [a,b,c], one permutation of x would be Πx = [b,c,a]. This operation is mainly used to maintain ordering, like a time sequence, for example, and outside the scope of an introductory article on the topic.

A True Child of the Symbolic and the Connectionist

Once we have a sufficiently rich representation of objects, fruits in our case, using this procedure, we have the HDC equivalent of a “database,” that can later be used to answer queries. This description of the general HDC process is quite loose and needs to be formalized. We will do that in Part 2 of this article. For now, let’s note that while strongly reminiscent of Symbolic AI, this procedure also incorporates some ideas of Connectionist AI.

For example, where in the 10,000 entries of γ are “orangeness” and “shape” stored? Similar to ANNS, the answer is across multiple coordinates. This distributed storage of information, called “Holographic Representation” makes HDC remarkably robust to errors, because it’s very hard to accidentally corrupt a significant proportion of the 10,000 entries. The other reason for this move to very high dimensional vectors is to preserve “orthogonality” — a slightly harder topic to grasp and will be dealt with in great detail in Part II of this article.

Where in the 10,000 entries of γ are “orangeness” and “shape” stored? Similar to ANNS, the answer is across multiple coordinates.

Moreover, as we will see in Part 2, these feature-representing hypervectors (γ+δ) can be constructed entirely from data, thus HDC can indeed learn from data and experience.

Finally, HDC involves significantly fewer operations (especially nonlinear operations) than ANNs and so tends to consume significantly less power, compute, and memory [9,10,20], with accuracy loss being quite acceptable unless the application is extremely performance critical (like self-driving vehicles or robotic surgery).

Hence, the title…

Over the decades, the machine learning community has, with good reason, developed an arsenal of tools to deal with dimensionality reduction. Techniques such as Principal Component Analysis, Factor Analysis and Independent Component Analysis are regularly used to move data from high dimensional manifolds to lower dimensions to ensure higher test accuracy with available data [21].

In HDC, however, the philosophy seems to move in exactly the reverse direction! We take data points from lower dimensional spaces and map them to extremely high dimensional manifolds. But, as we’ve seen before (and will see more clearly in Part II), this move offers two very important advantages, turning high dimensionality into a veritable blessing.

A Viable Alternative?

HDC has been tested on a very wide variety of machine learning (ML) datasets such as image classification, voice recognition, human activity detection etc. and has been shown to perform competitively with neural networks and standard ML algorithms, like support vector machines [9,10,14,20 and references therein].

However, while HDC definitely combines many advantages of Symbolic and Connectionist AI, most of the improvements in power and computing have been demonstrated on relatively² smaller datasets and on simpler tasks. Indeed, it is quite unlikely that HDC will ever replace heavy-duty connectionist architectures such as transformers because of the inherent simplicity of its operations and internal architecture.

Nonetheless, this paradigm is a promising new direction with plenty of room for exploration. Low-power alternatives to ANNs have several application areas, wireless sensor networks being some of the most important.

Coming up…

This article was intended to pique the reader’s curiosity about a rather quaint technique that is enjoying a resurgence since late 2010s. In the next part, we will see the precise HDC procedure that is adopted to actually perform classification.

A quick note about writing style

With a view to improving readability, I deliberately chose a semi-formal style of writing, including references only where absolutely necessary and eschewing my usual formal style of writing research papers. This is the style I will be using for the other parts of this article as well. Academics might find this a bit unnerving, but please note that the intent is not to create a survey article.

References

- McCarthy, John, et al. “A proposal for the dartmouth summer research project on artificial intelligence, august 31, 1955.” AI magazine 27.4 (2006): 12–12.

- Grace Solomonoff, “The Meeting of Minds That Launched AI,” IEEE Spectrum, May 6th, 2023.

- Edwin D. Reilly, “Milestones in computer science and information technology,” 2003, Greenwood Publishing Group. pp. 156–157.

- Rumelhart, David E., Geoffrey E. Hinton, and Ronald J. Williams. “Learning representations by back-propagating errors.” nature 323.6088 (1986): 533–536.

- SRI International Blogpost, “75 Years of Iinnovation: Shaky the Robot,” Link: https://www.sri.com/press/story/75-years-of-innovation-shakey-the-robot/ April 3rd, 2020.

- Minsky, Marvin L., and Seymour A. Papert. “Perceptrons.” (1969).

- Kanerva, Pentti. “Hyperdimensional computing: An introduction to computing in distributed representation with high-dimensional random vectors.” Cognitive computation 1 (2009): 139–159.

- Greff, Klaus, Sjoerd Van Steenkiste, and Jürgen Schmidhuber. “On the binding problem in artificial neural networks.” arXiv preprint arXiv:2012.05208 (2020).

- Kleyko, Denis, et al. “A Survey on Hyperdimensional Computing aka Vector Symbolic Architectures, Part I: Models and Data Transformations.” ACM Computing Surveys 55.6(2023)

- Kleyko, Denis, et al. “A survey on hyperdimensional computing aka vector symbolic architectures, part II: Applications, cognitive models, and challenges.” ACM Computing Surveys 55.9 (2023)

- Chen, Hanning, et al. “DARL: Distributed Reconfigurable Accelerator for Hyperdimensional Reinforcement Learning.” Proceedings of the 41st IEEE/ACM International Conference on Computer-Aided Design. 2022.

- Ma, Dongning, and Xun Jiao. “Hyperdimensional Computing vs. Neural Networks: Comparing Architecture and Learning Process.” arXiv preprint arXiv:2207.12932 (2022).

- Hernández-Cano, Alejandro, et al. “Reghd: Robust and efficient regression in hyper-dimensional learning system.” 2021 58th ACM/IEEE Design Automation Conference (DAC). IEEE, 2021.

- Hernández-Cano, Alejandro, et al. “Onlinehd: Robust, efficient, and single-pass online learning using hyperdimensional system.” 2021 Design, Automation & Test in Europe Conference & Exhibition (DATE). IEEE, 2021.

- Wikipedia contributors. “ImageNet.” Wikipedia, The Free Encyclopedia. Wikipedia, The Free Encyclopedia, 6 Nov. 2023. Web. 26 Nov. 2023.

- Widrow, Bernard, and Michael A. Lehr. “30 years of adaptive neural networks: perceptron, madaline, and backpropagation.” Proceedings of the IEEE 78.9 (1990): 1415–1442.

- Krizhevsky, Alex, Ilya Sutskever, and Geoffrey E. Hinton. “Imagenet classification with deep convolutional neural networks.” Advances in neural information processing systems 25 (2012).

- Zhang, Yu, et al. “A survey on neural network interpretability.” IEEE Transactions on Emerging Topics in Computational Intelligence 5.5 (2021): 726–742.

- Ananthaswamy, Anil. “A New Approach to Computation Reimagines Artificial Intelligence,” Quanta Magazine, April 2023.

- Imani, Mohsen, et al. “Revisiting hyperdimensional learning for fpga and low-power architectures.” 2021 IEEE International Symposium on High-Performance Computer Architecture (HPCA). IEEE, 2021.

- Shalev-Shwartz, Shai, and Shai Ben-David. Understanding machine learning: From theory to algorithms. Cambridge university press, 2014.

Footnotes

- I’ve used the word “solved” in a very relative sense here. It simply refers to the rather wide jump in classification accuracy of AlexNet over earlier attempts.

- Relative to the largest that ANNs today can handle.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Logo:

Logo:  Areas Served:

Areas Served: