Deep Reinforcement Learning is the combination of Reinforcement Learning and Deep Learning. This technology enables machines to solve a wide range of complex decision-making tasks. Hence, it opens up many new applications in industries such as healthcare, security and surveillance, robotics, smart grids, self-driving cars, and many more.

We will provide an introduction to deep reinforcement learning:

- What is Reinforcement Learning?

- Deep Learning with Reinforcement Learning

- Applications of Deep Reinforcement Learning

- Advantages and Challenges

About us: At viso, we provide the leading end-to-end platform for computer vision. Companies use it to implement custom, real-world computer vision applications. Read the whitepaper or get a demo for your organization!

What is Deep Reinforcement Learning?

Reinforcement Learning Concept

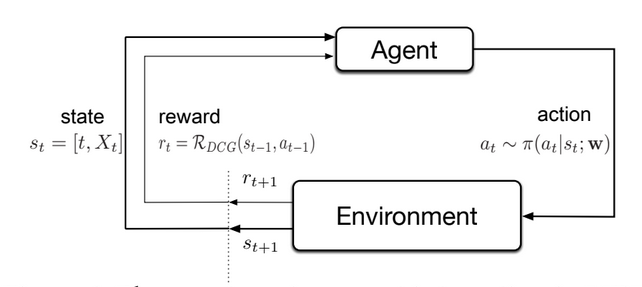

Reinforcement Learning (RL) is a subfield of Artificial Intelligence (AI) and machine learning. The Learning Method deals with learning from interactions with an environment in order to maximize a cumulative reward signal.

Reinforcement Learning relies on the concept of Trial and Error. An RL agent performs a sequence of actions in an uncertain environment to learn from experience by receiving feedback (rewards and penalties) in the form of a Reward Function to maximize reward.

With the experience gathered, the AI agent should be able to optimize some objectives given in the form of cumulative rewards. The objective of the agent is to learn the optimal policy, which is a mapping between states and actions that maximizes the expected cumulative reward.

The Reinforcement Learning Problem is inspired by behavioral psychology (Sutton, 1984). It led to the introduction of a formal framework to solve decision-making tasks. The concept is that an agent is able to learn by interacting with its environment, similar to a biological agent.

Reinforcement Learning Methods

Reinforcement Learning is different from other Learning Methods, such as Supervised Learning and Unsupervised Machine Learning. Other than those, it does not rely on a labeled dataset or a pre-defined set of rules. Instead, it uses trial and error to learn from experience and improve its policy over time.

Some of the common Reinforcement Learning methods are:

- Value-Based Methods: These RL methods estimate the value function, which is the expected cumulative reward for taking an action in a particular state. Q-Learning and SARSA are widely used Value-Based Methods.

- Policy-Based Methods: Policy-Based methods directly learn the policy, which is a mapping between states and actions that maximizes the expected cumulative reward. REINFORCE and Policy Gradient Methods are common Policy-Based Methods.

- Actor-Critic Methods: These methods combine both Value-Based and Policy-Based Methods by using two separate networks, the Actor and the Critic. The Actor selects actions based on the current state, while the Critic evaluates the goodness of the action taken by the Actor by estimating the value function. The Actor-Critic algorithm updates the policy using the TD (Temporal Difference) error.

- Model-Based Methods: Model-based methods learn the environment’s dynamics by building a model of the environment, including the state transition function and the reward function. The model allows the agent to simulate the environment and explore various actions before taking them.

- Model-Free Methods: These methods do not require the reinforcement learning agent to build a model of the environment. Instead, they learn directly from the environment by using trial and error to improve the policy. TD-Learning (Temporal difference learning), SARSA (State–action–reward–state–action), or Q-Learning are examples of a Model-Free Methods.

- Monte Carlo Methods: Monte Carlo methods follow a very simple concept where agents learn about the states and reward when they interact with the environment. Monte Carlo Methods can be used for both Value-Based and Policy-Based Methods.

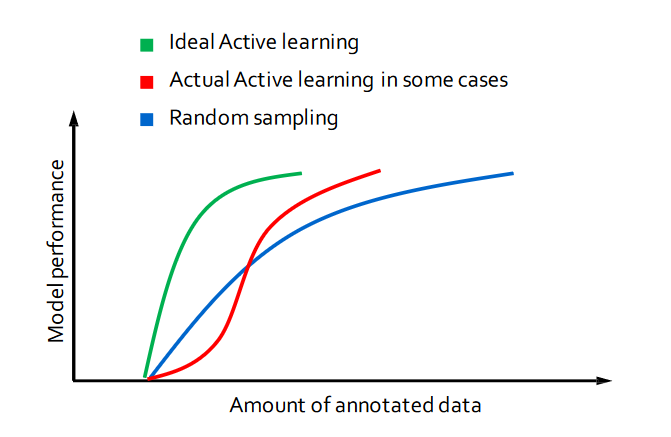

In reinforcement learning, Active Learning can be used to improve the learning efficiency and performance of the agent by selecting the most informative and relevant samples to learn from. This is particularly useful in situations where the state space is large or complex, and the agent may not be able to explore all possible states and actions in a reasonable amount of time.

Markov Decision Process (MDP)

The Markov Decision Process (MDP) is a mathematical framework used in Reinforcement Learning (RL) to model sequential decision-making problems. It is important because it provides a formal representation of the environment in terms of states, actions, transitions between states, and a reward function definition.

The MDP framework assumes that the current state depends only on the previous state and action, which simplifies the problem and makes it computationally tractable. Using the Markov Decision Process, reinforcement learning algorithms can compute the optimal policy that maximizes the expected cumulative reward.

Additionally, the MDP provides a framework for evaluating the performance of different RL algorithms and comparing them against each other.

Deep Reinforcement Learning

In the past few years, Deep Learning techniques have become very popular. Deep Reinforcement Learning is the combination of Reinforcement Learning with Deep Learning techniques to solve challenging sequential decision-making problems.

The use of deep learning is most useful in problems with high-dimensional state space. This means that with deep learning, Reinforcement Learning is able to solve more complicated tasks with lower prior knowledge because of its ability to learn different levels of abstractions from data.

To use reinforcement learning successfully in situations approaching real-world complexity, however, agents are confronted with a difficult task: they must derive efficient representations of the environment from high-dimensional sensory inputs, and use these to generalize past experience to new situations. This makes it possible for machines to mimic some human problem-solving capabilities, even in high-dimensional space, which only a few years ago was difficult to conceive.

Applications of Deep Reinforcement Learning

Some prominent projects used deep Reinforcement Learning in games with results that are far beyond what is humanly possible. Deep RL techniques have demonstrated their ability to tackle a wide range of problems that were previously unsolved.

Deep RL has achieved human-level or superhuman performance for many two-player or even multi-player games. Such achievements with popular games are significant because they show the potential of deep Reinforcement Learning in a variety of complex and diverse tasks that are based on high-dimensional inputs. With games, we have good or even perfect simulators, and can easily generate unlimited data.

- Atari 2600 games: Machines achieved superhuman-level performance in playing Atari games.

- Go: Mastering the game of Go with deep neural networks.

- Poker: AI is able to beat professional poker players in the game of heads-up no-limit Texas hold’em.

- Quake III: An agent achieved human-level performance in a 3D multiplayer first-person video game, using only pixels and game points as input.

- Dota 2: An AI agent learned to play Dota 2 by playing over 10,000 years of games against itself (OpenAI Five).

- StarCraft II: An agent was able to learn how to play StarCraft II a 99\% win-rate, using only 1.08 hours on a single commercial machine.

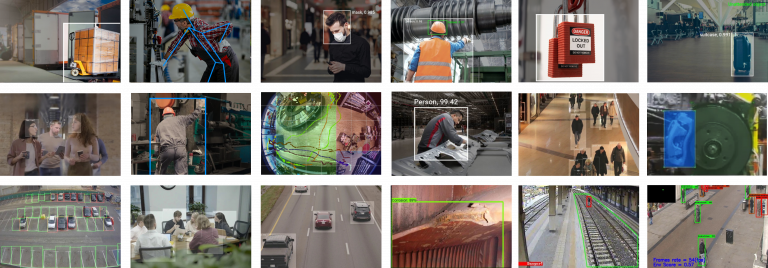

Those achievements set the basis for the development of real-world deep reinforcement learning applications:

- Robot control: Robotics is a classical application area for reinforcement learning. Robust adversarial reinforcement learning is applied as an agent operates in the presence of a destabilizing adversary that applies disturbance forces to the system. The machine is trained to learn an optimal destabilization policy. AI-powered robots have a wide range of applications, e.g. in manufacturing, supply chain automation, healthcare, and many more.

- Self-driving cars: Deep Reinforcement Learning is prominently used with autonomous driving. Autonomous driving scenarios involve interacting agents and require negotiation and dynamic decision-making, which suits Reinforcement Learning.

- Healthcare: In the medical field, Artificial Intelligence (AI) has enabled the development of advanced intelligent systems able to learn about clinical treatments, provide clinical decision support, and discover new medical knowledge from the huge amount of data collected. Reinforcement Learning enabled advances such as personalized medicine that is used to systematically optimize patient health care, in particular, for chronic conditions and cancers, using individual patient information.

- Other: In terms of applications, many areas are likely to be impacted by the possibilities brought by deep Reinforcement Learning, such as finance, business management, marketing, resource management, education, smart grids, transportation, science, engineering, or art. In fact, Deep RL systems are already in production environments. For example, Facebook uses Deep Reinforcement Learning for pushing notifications and for faster video loading with smart prefetching.

Challenges of Deep Reinforcement Learning

Multiple challenges arise in applying Deep Reinforcement Learning algorithms. In general, it is difficult to explore the environment efficiently or to generalize good behavior in a slightly different context. Therefore, multiple algorithms have been proposed for the Deep Reinforcement Learning framework, depending on a variety of settings of the sequential decision-making tasks.

Many challenges appear when moving from a simulated setting to solving real-world problems.

- Limited freedom of the agent: In practice, even in the case where the task is well-defined (with explicit reward functions), a fundamental difficulty lies in the fact that it is often not possible to let the agent interact freely and sufficiently in the actual environment, due to safety, cost or time constraints.

- Reality gap: There may be situations, where the agent is not able to interact with the true environment but only with an inaccurate simulation of it. The reality gap describes the difference between the learning simulation and the effective real-world domain.

- Limited observations: For some cases, the acquisition of new observations may not be possible anymore (e.g., the batch setting). Such scenarios occur, for example, in medical trials or tasks that depend on weather conditions or trading markets such as stock markets.

How those challenges can be addressed:

- Simulation: For many cases, a solution is the development of a simulator that is as accurate as possible.

- Algorithm Design: The design of the learning algorithms and their level of generalization have a great impact.

- Transfer Learning: Transfer learning is a crucial technique to utilize external expertise from other tasks to benefit the learning process of the target task.

Reinforcement Learning and Computer Vision

Computer Vision is about how computers gain understanding from digital images and video streams. Computer Vision has been making rapid progress recently, and deep learning plays an important role.

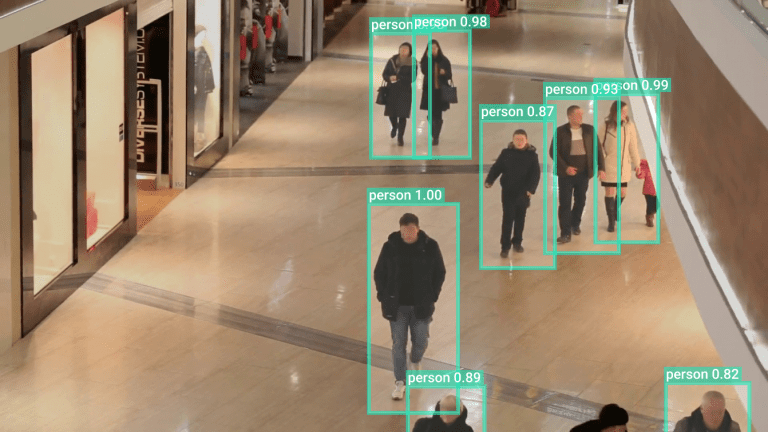

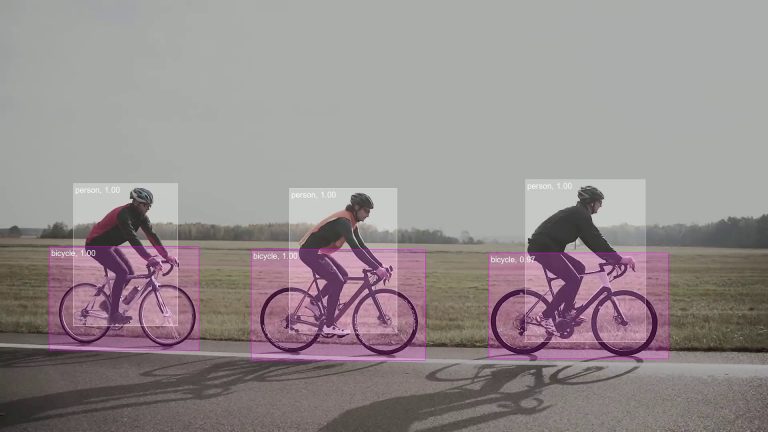

Reinforcement learning is an effective tool for many computer vision problems, like image classification, object detection, face detection, captioning, and more. Reinforcement Learning is an important ingredient for interactive perception, where perception and interaction with the environment would be helpful to each other. This includes tasks like object segmentation, articulation model estimation, object dynamics learning, haptic property estimation, object recognition or categorization, multimodal object model learning, object pose estimation, grasp planning, and manipulation skill learning.

More topics of applying Deep Reinforcement Learning to computer vision tasks, such as

- Semantic parsing of large-scale 3D point clouds for indoor scene understanding

- Teaching a machine to read maps with deep reinforcement learning

- Image-based data augmentation tasks deep reinforcement learning

- View Planning, to generate a sequence of viewpoints that are capable of sensing all accessible areas of a given object represented as a 3D model

- Face hallucination, to generate a high-resolution face image from a low-resolution input image

What’s next

In the future, we expect to see deep reinforcement algorithms going in the direction of meta-learning. Previous knowledge, for example, in the form of pre-trained Deep Neural Networks, can be embedded to increase performance and reduce training time. Advances in transfer learning capabilities will allow machines to learn complex decision-making problems in simulations (gathering samples in a flexible way) and then use the learned skills in real-world environments.

Check out our guide about supervised learning vs. unsupervised learning, or explore another related topic:

- Examples, methods, and applications of Self-Supervised Learning

- Explore an extensive list of Computer Vision Applications

- Learn about deep learning-based Mask R-CNN

- Read our easy-to-understand guide about Image Segmentation