Deploy Your Company’s Own Secure And Private ChatGPT with Azure OpenAI

Last Updated on November 6, 2023 by Editorial Team

Author(s): Stephen Bonifacio

Originally published on Towards AI.

By now, you are probably aware that it’s not a very good idea to use confidential company data with ChatGPT. Possible leakage of company trade secrets and IPs is a huge concern that even tech behemoths with the likes of Apple, Amazon, and Samsung have restricted or outright banned their employees from using the popular AI service.

When you use ChatGPT, you give OpenAI (the company behind ChatGPT) express consent to use data from your interaction — this includes the questions you enter and responses returned by the service — for their own use. As this includes using your data to train future versions of ChatGPT, it comes with the risk of company secrets being divulged as ChatGPT responses. (que horror!) Read the detailed explanation of their data usage policy here.

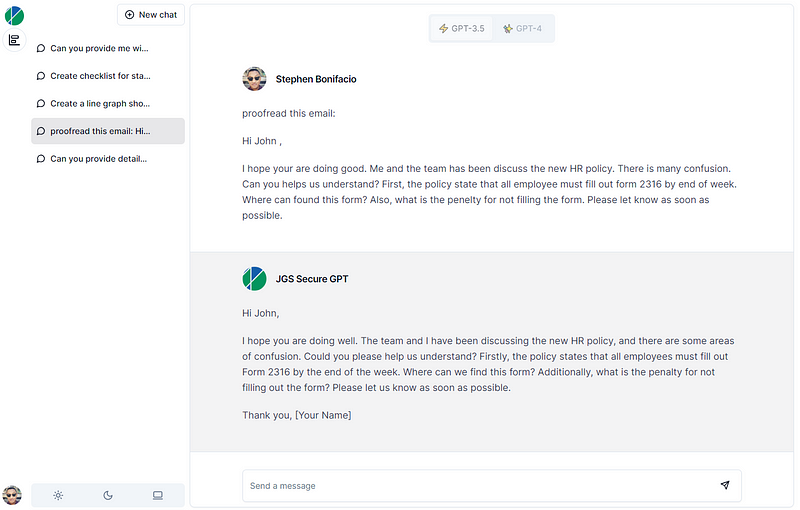

Microsoft Azure has released a solution to deploy a ChatGPT-like application hosted on customers’ own cloud environment. This will give employees access to a secure and data privacy-compliant chat assistant that they can actually use for work — whether to generate, summarize, or translate texts that contain proprietary information, proofread confidential e-mails, write or resolve code errors from internal codebases and various other tasks that can help improve employee productivity.

This solution is a web app that utilizes API calls to large language models (LLM) like the original ChatGPT (or gpt-3.5) and gpt-4. It can be accessed using browsers on laptops and mobile devices. The app was released as open source by Microsoft with a very permissive license, including commercial use. The GitHub repository can be found here. It offers one-click deployment to an app service hosted on customers’ own Azure subscription.

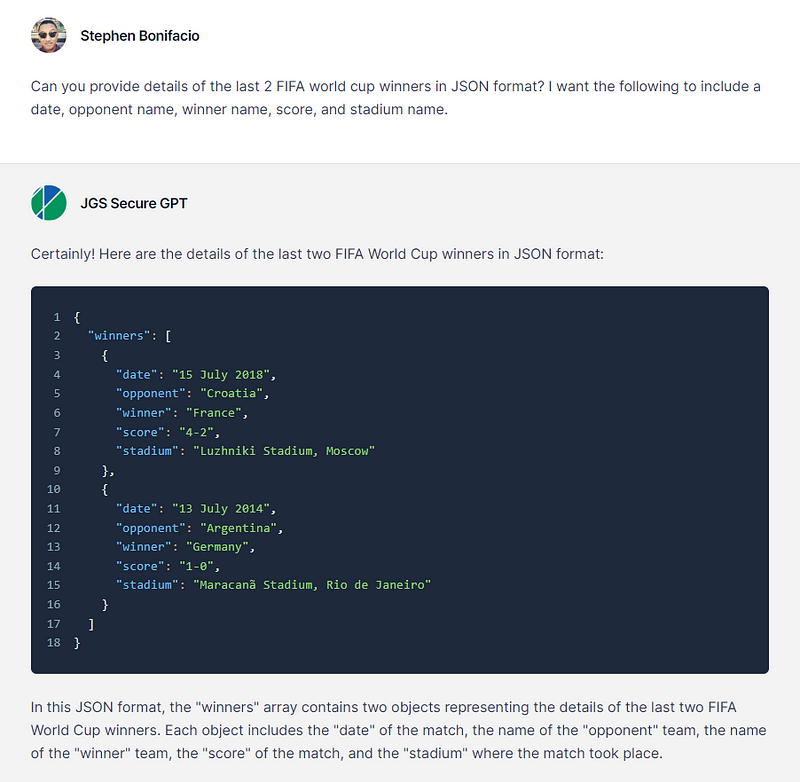

Azure ChatGPT is almost at par with the ‘retail’ ChatGPT when it comes to features. It includes user chat history, dark mode, and formatted responses depending on the context of the query, e.g., list, tables, charts, code snippets, JSON, CSV, etc. It comes bundled with Azure Active Directory (AD) integration as the primary method for user authentication so this is a plug-and-play deployment for Microsoft customers whose users are already using Office365 with AD.

Security and Privacy

First, let’s talk about the security of user data, as this is the main value proposition of this solution.

The LLMs called by this application are the same models (gpt-3.5/gpt-4) used in ChatGPT but are hosted on Azure data centers utilizing Azure’s own compute resources for inference (e.g., GPUs).

For comparison, the models that the retail ChatGPT uses, reside on OpenAI servers.

Thus, any user data, like questions and the bot’s responses, won’t go through any OpenAI channel and resides solely in the Azure environment. The Azure OpenAI data privacy policy explicitly states that user data won’t be shared with OpenAI and will not be used to train future OpenAI models. (See below)

The chat history for each user is stored in a secure Azure storage (called Azure Cosmos DB) within the customer’s own cloud environment and is also covered by a strict data privacy policy. Data stored in Cosmos DB supports redundancy options that enable backup of the data to multiple Azure data centers around the world.

Features

Chat history

Chat history is stored in Azure Cosmos DB for each unique user account.

Supported response formats

Here are the supported output formats that I have tried so far. (There might be more.)

- Lists

2. Tables

3. Code

4. Scorecard

5. JSON

6. CSV

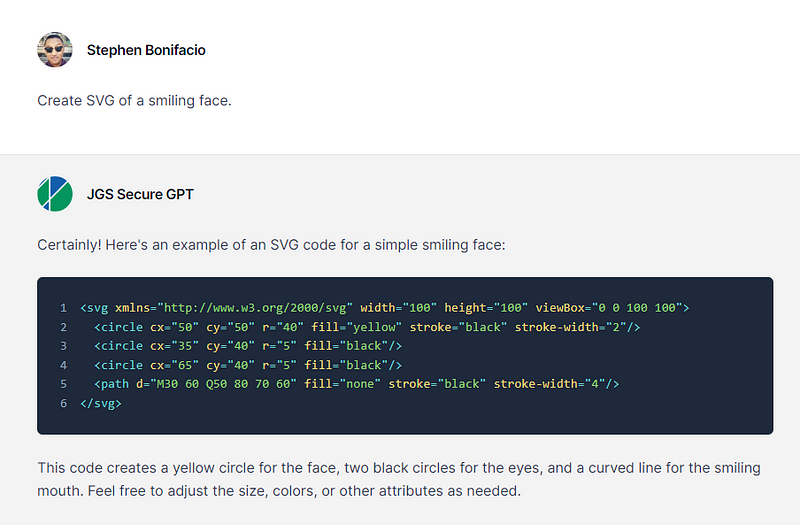

7. SVG

Dark mode

Comparison with ‘retail’ ChatGPT

‘Premium’ features like plug-ins, custom instructions, and code interpreters are not (yet) available in this solution.

This application only uses API calls to Azure OpenAI models and won’t deploy self-hosted models to the customer’s own cloud environment. Customers are charged depending on the models used and the token consumption.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Logo:

Logo:  Areas Served:

Areas Served: