This AI newsletter is all you need #87

Author(s): Towards AI Editorial Team

Originally published on Towards AI.

What happened this week in AI by Louie

Last week saw the two debatably largest model updates since GPT-4 revealed within three hours of each other: OpenAI’s Sora text-to-video model and Deepmind’s Gemini Pro 1.5 model.

Events began with the surprise reveal of Deepmind’s Gemini Pro 1.5, just eight weeks after the reveal and release of Gemini Pro 1.0 and just one week after the release of Gemino Ultra 1.0. For the first time, the DeepMind LLM model has shown significant improvements in terms of capability compared to OpenAI’s GPT-4. The DeepMind LLM model has a context window of 1 million tokens, relative to GPT-4’s 128k and Anthropic Claude’s 200k, with up to 10 million tests internally. Additionally, this new smaller Pro 1.5 model beats the larger older Ultra 1.5 and GPT-4 on many benchmarks. The new model is great at accurately retrieving within its long context. The improvements were made, particularly by moving to a Mixture of Experts architecture and many other changes across architecture and data. For Gemini Pro 1.0, input text tokens are approximately 20x cheaper than GPT-4 Turbo. So, if the price for v1.5 does not increase significantly, the combination of price and capability could enable many more use cases.

The Gemini Pro 1.5 model release was quickly overshadowed by the viral release of many video examples from OpenAI’s new text-to-video model “Sora.” The model is still in its relatively early stages and, unusually (for OpenAI), far from public release. Perhaps the release had already been prepared and was ready to be launched early to steal the limelight if needed. Sora is a diffusion model like DALL-E and Midjourney but uses a transformer architecture that operates on spacetime patches of video and image latent codes. The model sparked a heated debate on whether it has developed its own internal “world model” and real physics model as an “emergent property” of scale or is still just predicting pixels and does not understand what it generates.

Why should you care?

Regardless of your view of the ultimate limitations of transformers and generative architectures in achieving intelligence and true understanding, both Gemini Pro 1.5 and Sora are clearly big steps forward in AI capability and can enable many new applications. However, they can also allow new misuse cases — in particular, the potential use of an open-source Sora equivalent to generate one minute-deep fake videos when given a photo prompt. Both of these models are yet to be widely available, and the price is still unknown, so we still need to wait and see how impactful these models will be and when they will arrive at scale.

– Louie Peters — Towards AI Co-founder and CEO

This issue is brought to you by Super AI:

SuperAI (Singapore, 5–6 June 2024) is the most anticipated AI conference that will define the future of artificial intelligence. SuperAI will be the central draw of Singapore AI Week (3–9 June 2024), which features a week of various independently organized side events around SuperAI. Expect a wide range of meet-ups, workshops, hackathons, networking drinks, parties, and more, transforming Singapore into a vibrant meeting of minds that will shape the future of artificial intelligence.

Hottest News

OpenAI has introduced Sora, its latest innovation designed to transform text prompts into detailed videos up to a minute long, marking a significant evolution from the existing text-to-image models. Sora is becoming available to red teamers to assess critical areas for harm or risks.

2. Google Gemini 1.5 Crushes ChatGPT and Claude With the Largest-Ever 1 Mn Token Context Window

Google released Gemini 1.5, a model that outperforms ChatGPT and Claud, with a 1 million token context window. It can process vast amounts of information in one go, including 1 hour of video, 11 hours of audio, codebases with over 30,000 lines of code, or over 700,000 words. In their research, Google also successfully tested up to 10 million tokens.

3. Meta’s New AI Model Learns by Watching Videos

Yann LeCun proposes a machine learning paradigm, V-JEPA, to enable systems to build internal world models and learn intuitively like humans. Unlike conventional methods, V-JEPA employs a non-generative technique for video understanding, prioritizing abstract interpretation over detailed reproduction.

4. OpenAI Is Reportedly Developing an AI Web Search To Compete With Google

OpenAI is developing a web search product that will compete directly with Google and may be based at least partly on Microsoft’s Bing search. It may incorporate an improved ChatGPT that utilizes Bing for web-based information summarization.

5. Stability AI Announces Stable Cascade

Stability AI has introduced Stable Cascade, a research preview of a new text-to-image model based on the Würstchen architecture. Due to its innovative three-stage approach, this model is distributed under a non-commercial license and boasts ease of training and fine-tuning on consumer-grade hardware.

Five 5-minute reads/videos to keep you learning

OpenAI’s Sora stands out as a significant milestone, promising to reshape our understanding and capabilities in video generation. This article covers the technology behind Sora and its potential to inspire a new generation of models in image, video, and 3D content creation.

2. Evaluating LLM Applications

This post provides thoughts on evaluating LLMs and discusses some emerging patterns that work well in practice. It reflects experience with thousands of teams deploying LLM applications in production.

3. Machine Learning in Chemistry

Machine learning and neural networks like CNNs and RNNs significantly advance chemical research by identifying complex data patterns, aiding drug development, predicting toxicity, and understanding structure-activity relationships.

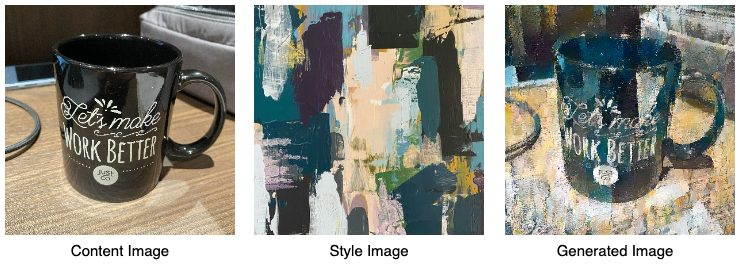

4. U+1F917 PEFT Welcomes New Merging Methods

This post explores model merging, covering methods such as Concatenation and linear/Task Arithmetic to combine/merge LoRA adapters. The article also shares how to use these merging methods for text-to-image generation.

5. Neural Network Training Makes Beautiful Fractals

Neural network training can inadvertently generate intricate fractals, reflecting the dynamic interplay of hyperparameter settings, particularly the learning rate. As the learning rate setting is fine-tuned to avoid divergence and ensure efficient training, the boundary between effective training and failure manifests as a fractal pattern.

Repositories & Tools

- Reor is an AI note-taking app that runs models locally: it automatically links related ideas, answers questions, and provides semantic search.

- ShellGPT, powered by GPT-4, helps you generate shell commands, code snippets, and documentation directly from the command line.

- CrewAI is a framework for orchestrating role-playing autonomous AI agents. It empowers agents to work together, tackling complex tasks.

- TACO is a new benchmark for assessing a system’s ability to generate code. It is substantially larger than existing datasets and contains more challenging problems.

- MetaTree is a transformer-based decision tree algorithm. It learns from classical decision tree algorithms for better generalization capabilities.

Top Papers of The Week

This paper introduces BASE TTS, a cutting-edge text-to-speech system with 100K hours of training. It has established a new benchmark for natural-sounding speech synthesis. It utilizes a 1-billion-parameter Transformer model to generate “speechcodes” from text, which are converted into waveforms by a convolutional decoder.

2. World Model on Million-Length Video and Language With RingAttention

This paper curates a large dataset of diverse videos and books, utilizing the RingAttention technique to scalably train on long sequences and gradually increase context size from 4K to 1M tokens. It paves the way for training on massive datasets of long videos and language to develop an understanding of both human knowledge and the multimodal world.

3. Training Language Models To Generate Text With Citations via Fine-Grained Rewards

Researchers have developed a method to train smaller language models (LMs) to generate responses with appropriate citations, using Llama 7B as a test case. Initially, they trained Llama 7B on ChatGPT outputs to answer questions with cited contexts and improved the model through rejection sampling and reinforcement learning. Results show that Llama 7B outperforms ChatGPT in providing cited answers.

4. Transformers Can Achieve Length Generalization but Not Robustly

The research demonstrates that standard Transformers can generalize integer addition to longer sequences through effective data representation and positional encoding methods. However, this generalization ability is sensitive to factors like weight initialization and training data sequence, resulting in considerable variability in the model’s performance.

5. GraphCast: Learning Skillful Medium-Range Global Weather Forecasting

The paper introduces a machine learning-based method called “GraphCast,” which can be trained directly from reanalysis data. It predicts hundreds of weather variables over ten days at 0.25-degree resolution globally in under one minute. GraphCast outperforms the most accurate operational deterministic systems on 90% of 1380 verification targets.

Quick Links

1. Cohere For AI Launches Aya, an LLM covering over 100 languages. It is an open-source multilingual LLM trained on the largest multilingual instruction fine-tuned dataset to date, with a size of 513 million and covering 114 languages.

2. Nvidia is releasing an early version of Chat with RTX, a demo app that runs locally on a PC. You only need an RTX 30- or 40-series GPU with at least 8GB of VRAM. You can feed it YouTube videos and your documents to create summaries and get relevant answers.

3. Lambda has raised a $320 million Series C led by the US Innovative Technology Fund. With this new financing, Lambda will ensure AI engineering teams can access NVIDIA GPUs with high-speed NVIDIA Quantum-2 InfiniBand networking.

4. AI cloud firm Together AI is set to raise more than $100 million in a new funding round. Since its launch, Together AI has raised $122.5 million across two previous funding rounds.

Who’s Hiring in AI

Our friends at Zoī are hiring their Chief AI Officer. Zoī is at the crossroads of 3 domains: Medical, Data Science, and BeSci. Their approach involves a fully integrated 360 check-up, both physical and digital, with the first center launched in Paris in 2023. In 2022, they successfully raised €20 million in the largest pre-seed round seen in European Healthtech, led by Stephane Bancel, CEO of Moderna. Zoī aims to create personalized user manuals for each member by gathering only the necessary data to provide tailored recommendations based on thousands of factors. If you’re interested in leading the effort to revolutionize Medicine X.0 towards Predictive Health, feel free to reach out to them at [email protected].

Senior Software Engineer, ChatGPT Model Optimization @OpenAI (San Francisco, CA, USA)

Senior Fullstack Developer @Text (Remote)

Actuary Platform Developer Associate @Global Atlantic Financial Group (Boston, USA/Hyrbid)

Senior AI Engineer @Tatari (Remote)

Conversational AI Engineer — Google Dialogflow @Netomi (Toronto, Canada/Freelance)

Senior Machine Learning Engineer @Recursion (Salt Lake City, USA/Remote)

Senior Fullstack Engineer — AI @Sweep (Remote)

Interested in sharing a job opportunity here? Contact [email protected].

If you are preparing your next machine learning interview, don’t hesitate to check out our leading interview preparation website, confetti!

Think a friend would enjoy this too? Share the newsletter and let them join the conversation.

Join thousands of data leaders on the AI newsletter. Join over 80,000 subscribers and keep up to date with the latest developments in AI. From research to projects and ideas. If you are building an AI startup, an AI-related product, or a service, we invite you to consider becoming a sponsor.

Published via Towards AI

Logo:

Logo:  Areas Served:

Areas Served: