|

Listen to this story

|

Across enterprises of all sizes, not every challenge can be tackled internally. This is where technology service providers step in to efficiently address critical issues such as IT support, ticketing, HR policies, finances and more. With the advent of generative AI, these solutions have reached unparalleled levels of excellence. One standout player in this space is the “enterprise copilot” platform Moveworks.

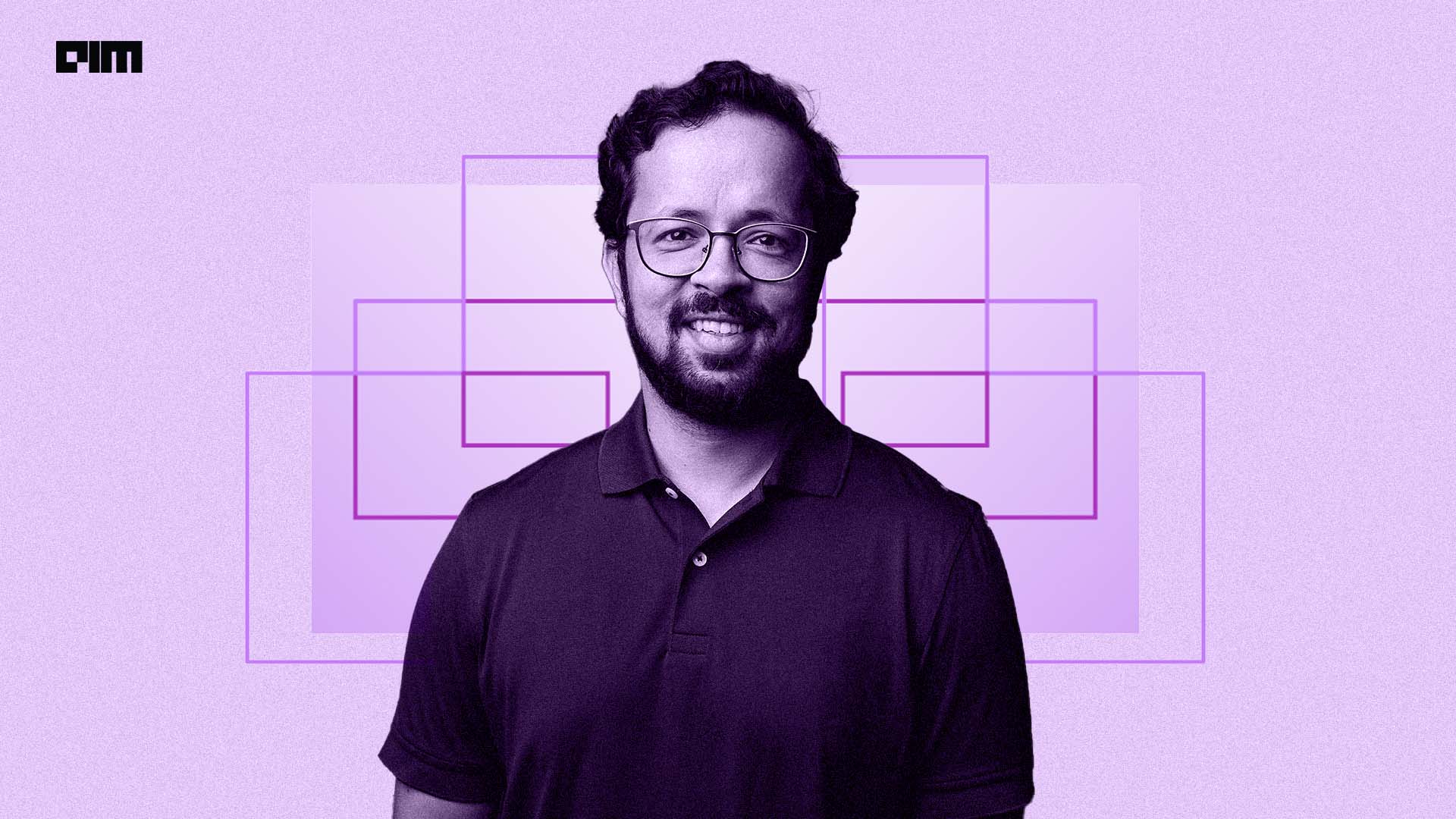

“We noticed that IT support for enterprises hadn’t seen much innovation for years. Employees faced delays and inefficiencies in getting IT issues resolved. This led us to develop a conversational AI service that could understand natural language and help employees troubleshoot IT problems seamlessly,” said Vaibhav Nivargi, Co-founder and CTO of California-based Moveworks.AI which aims to boost employee productivity by taking care of IT support, finance, facilities, HR and more, in an exclusive interaction with AIM.

Founded in 2016 by Bhavin Shah, CEO, Vaibhav Nivargi, Varun Singh, President of Product and Jiang Chen, CTO (AI) with the vision to strengthen individuals’ efficiency.

Over the years, their vision has expanded beyond IT support as similar issues exist in HR, finance, facilities, and various other domains. Additionally, they introduced a developer platform called Creator Studio designed to empower customers and partners to enhance the platform’s capabilities.

And now, with the emergence of generative AI models like ChatGPT, it is a cherry on the cake. These models showcased the boundless possibilities of generative AI, effortlessly generating images, videos, and more. There was an ongoing debate surrounding whether these models were evolving towards sentience or simply reaching the next level of predictive prowess. Nevertheless, they remained undeniably fascinating.

“We have a diverse range of customers including Coca-Cola, Palo Alto, DocuSign, Broadcom, Toyota, Slack, Nutanix and others and we use various models based on specific use cases. This significantly reduces the time it takes to resolve issues and tickets for employees,” said Nivargi.

Employees often don’t even realise they’re interacting with a Moveworks bot, as it becomes integrated into the company’s identity. For example, Palo Alto’s chatbot is called Sheldon while Slack’s is AskBot and Pinterest’s is known as K9. All of these AI chatbots are actually the Moveworks bot. It also enables targeted messages using the bots, which can be particularly useful for important announcements within the organization.

“Additionally, we’ve added data analytics to our offerings, allowing CIOs, finance teams, and others to gain insights from natural language data. This data-driven approach helps us continuously improve our models and provide better support to our customers,” commented the Standford alumni.

Implementation of Generative AI

Much before generative AI came into existence, Moveworks began its tryst with it, starting with Google’s language model BERT in 2019.

The tech team, headed by both Nivargi and Chen, employs a combination of LLMs to provide precise conversational support. They have also forged partnerships with tech giants like Microsoft Azure and OpenAI to leverage generative AI capabilities. These models are particularly useful for generating synthetic data when a client has limited data, allowing users to specify the data’s characteristics to generate more data that matches their requirements.

“Depending on the problem, we fine-tune the larger models to suit the customers’ needs,” he added. Choosing the right model depends on various factors such as modality, accuracy, and pricing.

For example, OpenAI’s GPT-4 offers broad language understanding and generation capabilities, enabling nuanced responses to employee queries. Task-specific discriminative models enhance precision for specific support functions, ensuring accurate responses to common service needs. Moveworks has even developed its custom LLM, MoveLM, fine-tuned on enterprise data to handle industry-specific vocabulary and workflows.

“Moreover, there are other models employed for communication, which are trained using specific data sets to teach the model how to communicate effectively. These intermediate models are often used in single and zero-shot settings,” he added.

The implementation of LLMs at Moveworks involves chaining the outputs of different models to deliver a cohesive conversational experience. This approach balances the versatility of foundation models with the precision of task-specific models, ensuring the accurate automation of various enterprise tasks.

Solving Customer Problems, One at a Time

When addressing customer pain points, it is a common notion that AI may replace human tasks; however, Nirvagi and his team observed that AI augments human capabilities rather than replacing them. In essence, their technology improves employees’ functions, making the corporate environment more efficient.

“We integrate with a multitude of systems, including IT, HR, knowledge, identity, and space management systems. Whether you need to book a conference room or solve an IT issue, Moveworks is there to assist,” he added.

Companies typically maintain internal support teams to manage these issues, which can be costly. But that is what Moveworks aims to do, at a lower cost.

“We address pain points that may seem infrequent on an individual basis but collectively add up to significant productivity challenges,” he said.

Back home, the company houses several partnerships with Indian IT giants like Infosys, TCS, Wipro, and HCL Tech. However, the problems are the same everywhere, regardless of their demographics, size, or industry. Whether it’s an MNC brand like Databricks or a smaller company, the ticketing problem is consistent.

Nirvagi commented, “While each company may have some unique aspects, the core issue is widespread. The company’s ability to integrate with various ticketing systems allows them to gather valuable feedback and continuously improve their service.” To grow even bigger and stronger, Moveworks is hiring across various verticals. Considering the tech talent India has to offer, Moveworks is in an expansion mode in the country, with their second headquarters based in Bengaluru.

Read more: Behind Indian IT’s Mixed Emotions for LGBTQ+