For the last six months, we have been part of the 2021 JournalismAI Collab Challenges, a project connecting global newsrooms to understand how artificial intelligence can improve journalism. Our particular challenge was to answer this question:

“How might we use modular journalism and AI to assemble new storytelling formats and reach underserved audiences?”

Participating newsrooms were organised into teams to define the challenges they would work on, imagine potential solutions, and turn them into prototypes. Our team included newsrooms from across Europe, Africa and the Middle East. Although we all attract different audiences, produce different types of content and have different business models, we share some of the same fundamental challenges.

Modules were defined as fragments of a story that live independently, can be repurposed, or even be replaced by another fragment. Based on this definition, quotes strongly qualify as a module.

There are a number of good reasons for using AI to identify quotes, from creating new content from them to tracking shifting opinions on the same subject over time, and fact checking. Another interesting use case is revealing hidden insights about our journalism. Who are our sources? How diverse are they? How often do we quote the same people or organisations? Do we give the same exposure to different gender and ethnic groups?

What is a quote?

The Guardian joined forces with Agence France-Presse (AFP) to work on a machine learning solution to accurately extract quotes from news articles and match them with the right source.

Existing solutions did not work that well on our content. The models struggled to recognise quotes that did not match a classic pattern such as:

They admitted: “The model was trained on a limited number of quotation styles.”

Some models were returning too many false positives and identifying generic statements as quotes. For example:

The government announced on Thursday that the means-tested support families receive with their care would not be counted towards the £85,000 total, meaning those with relatively modest assets could still see themselves paying that amount in full.

Co-referencing, the process of establishing the source of a quote by finding the correct reference in the text, was also an issue, especially when the source’s name was mentioned several sentences or even paragraphs before the quote itself.

Our previous attempts to solve this problem using regular expressions (sequences of characters that specify a search pattern) stumbled over words that content creators decided to put in quotation marks to indicate non-standard English terms (such as “woke”). We wanted to see if we could teach a machine to understand the difference between these two speech constructs. There was also an extra benefit in trying the machine learning approach as we could better mitigate typos resulting in mismatching or missing quotation marks or sources quoted inside another quote.

First, we needed a clear definition of a quote. We decided to use the Wikipedia definition as our starting point:

“A quotation is the repetition of a sentence, phrase, or passage from speech or text that someone has said or written. In oral speech, it is the representation of an utterance (ie of something that a speaker actually said) that is introduced by a quotative marker, such as a verb of saying. For example: John said: ‘I saw Mary today.’ Quotations in oral speech are also signalled by special prosody in addition to quotative markers. In written text, quotations are signalled by quotation marks.”

Following this definition we made a design decision to clearly separate paraphrases and quotes and focus our efforts on identifying text in quotation marks only.

Deep learning to the rescue

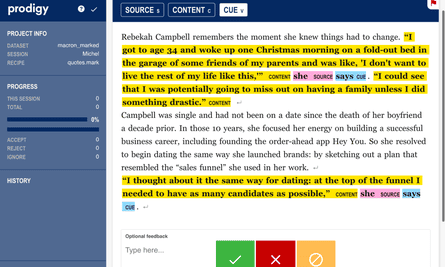

To train a model to identify quotes in text we used two tools created by Explosion. Spacy is one of the leading open-source libraries for advanced natural language processing using deep neural networks. Prodigy is an annotation tool that provides an easy-to-use web interface for quick and efficient labelling of training data.

Together with our AFP colleagues we manually annotated more than 800 news articles with three entities: content (the quote, in quotation marks), source (the speaker, which might be a person, an organisation, etc), and cue (usually a verb phrase, indicating the act of speech or expression).

Stick to the style guide, please!

Before rolling up our sleeves we needed to create a very clear and concise guide for annotating our data. To minimise noise and uncertainty in the training dataset we had to make sure that multiple annotators would understand the task in the same way.

The Guardian’s style guide offers an overview of how writers should quote sources. It was a good starting point and we found it very useful. However, we discovered that many quotes in our content deviated significantly from the suggested rules in this guide.

From the first model based on regular expressions we inherited a long list of different quotation styles and constructs. Initially, we counted 12 different ways journalists include quotes in their writing but we added many more during the annotation process.

“If only all quotes were like this,” we moaned.

The last item in this long list of different constructs was this one:

The annotator got annoyed and said: “When we thought we had listed all the quote styles we found this …” she said. 🙇

You can find the complete list in our public GitHub here.

Michaëla Cancela-Kieffer, AFP deputy news editor for editorial projects, says: “I like the idea that AI forces us to deconstruct our habits and understand how we do things, and what steps we take before telling the model what the rules are. By doing that we can sometimes identify necessary changes and improve our original ‘real life’ processes. That is why this type of experiment could also lead to changes in our style guide.”

Human learning and machine learning

The main challenge in building the training dataset was navigating the ambiguity of different journalistic styles. For several days, we discussed dozens of cases where it was difficult to make the right choice.

How should we treat song lyrics or poems? What about messages on placards? What if someone quotes their thoughts, something that has not been said aloud?

The first batch of our annotations turned out to be quite noisy and inconsistent but we were getting better and better with each iteration.

Collectively we experienced the same teaching process we were putting our model through. The more examples we looked at, the better we became at recognising different cases. Yet the question remained – if it is difficult for a human to make these decisions, can we teach a machine to cope with this task?

The results looked promising, especially for the content entity. The model managed to identify all three entities (content, source, cue) correctly in 89% of cases. Considering each entity separately, content scored the highest (93%) followed by cue (86%) and source (84%).

Interestingly, we achieved these results by discarding the very first annotations we made, indicating that we became much better and aligned between each other as we continued annotating more examples.

The difference between the three entities is not surprising. The content entity is encompassed in quotation marks, thus punctuation is a strong signal for matching this entity type. However, not every phrase in quotation marks is a quote – quotation marks are also used for other stylistic choices, adding noise to the entity extraction task. From our preliminary analysis, it looks like our model has learned to distinguish between genuine quotes and words in quotes indicating either non-standard terms or stylistic choices.

To evaluate our model, we used the strictest way of measuring the performance of named entity recognition, namely each predicted entity needs to match exactly (from start to end) with respect to the annotated data. Even in cases where the model is getting it wrong, we often find that it managed to partially match the entity. This is especially true for source entities.

What’s next?

Moving forward we need to build a robust coreference resolution system. We would like to explore deep learning options to help us with this mission.

Another challenge will be to identify meaningful quotes – content that is worth storing for future references. We are confident that a combination of machine learning, existing metadata about articles, and additional information extracted from sources and content might give us a strong signal for classifying quotes.

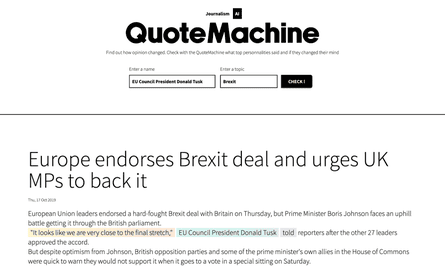

Another application would be a user interface for discovering quotes. This would enable journalists to surface previous quotes quickly in order to check them against current statements or to enrich their articles.

“This could lead to a user-facing tool with multiple applications. The data generated by that search could in return inform the newsroom about users’ interests,” says Cancela-Kieffer.

Chris Moran, the head of editorial innovation at the Guardian, says: “We’re committed to thinking about AI and automation through a journalistic lens, and will be experimenting as much as we can to find the really positive ways we can apply it and avoid the pitfalls.”

Attempting to identify and extract quotes from news articles using ML may seem arcane to some. But the potential benefits to readers, journalists and editors could be considerable – from making sure we are giving a platform to those who are often under-represented, to building products and formats that tell the whole story, rather than defaulting to a simple “he said, she said” formula.

Watch this space, we hope to update you on our progress soon.

This project is part of the 2021 JournalismAI Collab Challenges, a global initiative that brings together media organisations to explore innovative solutions to improve journalism via the use of AI technologies. It was developed as part of the EMEA cohort of the Collab Challenges that focused on modular journalism with the support of BBC News Labs and Clwstwr.

JournalismAI is a project of Polis – the journalism thinktank at the London School of Economics and Political Science – and it’s sponsored by the Google News Initiative. If you want to know more about the Collab Challenges and other JournalismAI activities, sign up for the newsletter or get in touch with the team via hello@journalismai.info