spaCy meets Transformers: Fine-tune BERT, XLNet and GPT-2

Explosion

AUGUST 1, 2019

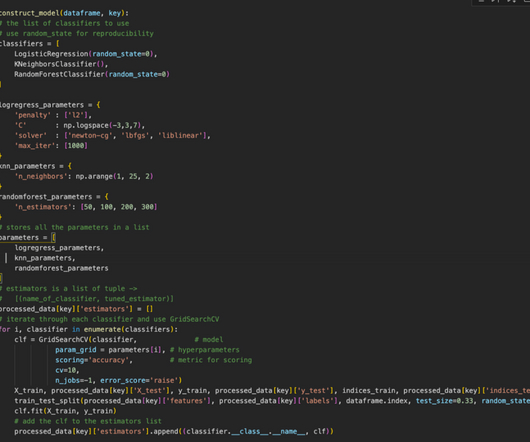

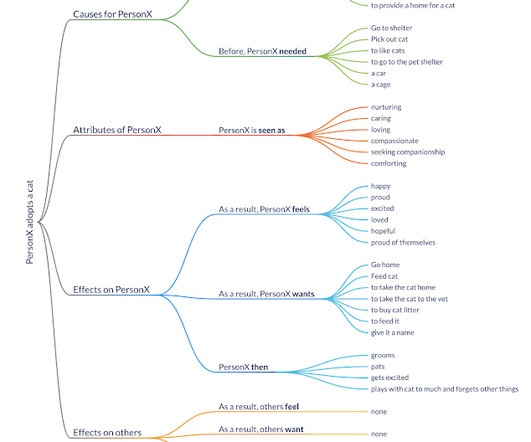

Huge transformer models like BERT, GPT-2 and XLNet have set a new standard for accuracy on almost every NLP leaderboard. In a recent talk at Google Berlin, Jacob Devlin described how Google are using his BERT architectures internally. We provide an example component for text categorization.

Let's personalize your content