How to Run LLM Locally Using LM Studio?

Analytics Vidhya

JULY 23, 2024

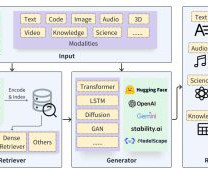

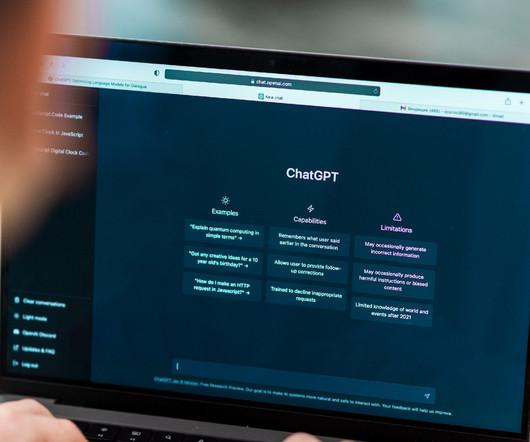

In this article, we’ll dive into how to run an LLM locally using LM Studio. We’ll walk through the essential steps, explore potential challenges, […] The post How to Run LLM Locally Using LM Studio? One fantastic tool that makes this easier is LM Studio. appeared first on Analytics Vidhya.

Let's personalize your content