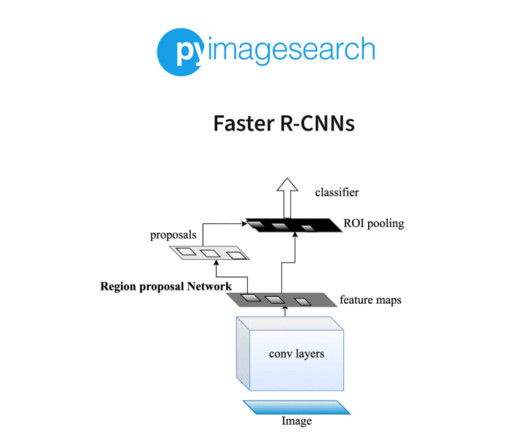

Faster R-CNNs

PyImageSearch

NOVEMBER 13, 2023

You’ll typically find IoU and mAP used to evaluate the performance of HOG + Linear SVM detectors ( Dalal and Triggs, 2005 ), Convolutional Neural Network methods, such as Faster R-CNN ( Girshick et al., 2015 ; Redmon and Farhad, 2016 ), and others. 2015 ), SSD ( Fei-Fei et al., 2015 ; He et al., MobileNets ).

Let's personalize your content