Commonsense Reasoning for Natural Language Processing

Probably Approximately a Scientific Blog

JANUARY 12, 2021

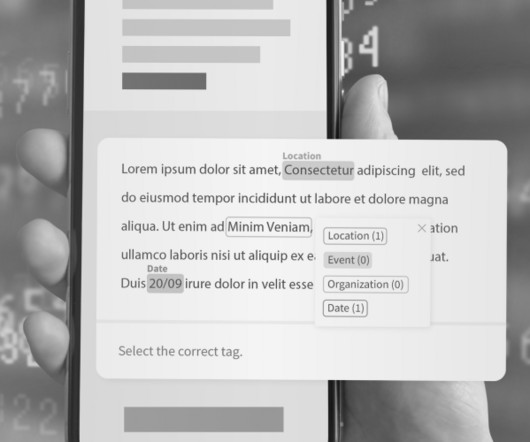

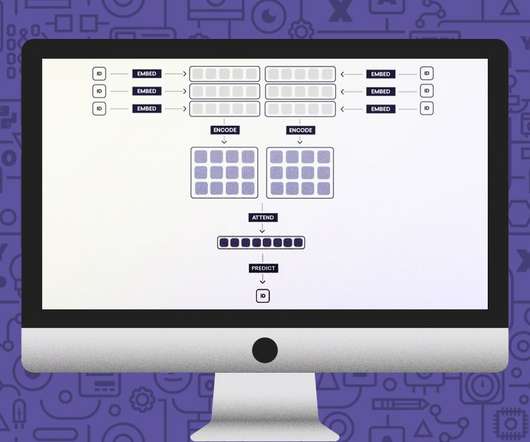

The release of Google Translate’s neural models in 2016 reported large performance improvements: “60% reduction in translation errors on several popular language pairs”. Figure 1: adversarial examples in computer vision (left) and natural language processing tasks (right).

Let's personalize your content