Top Computer Vision Tools/Platforms in 2023

Marktechpost

JULY 17, 2023

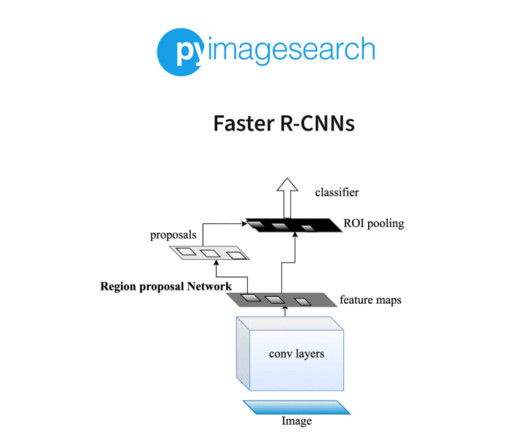

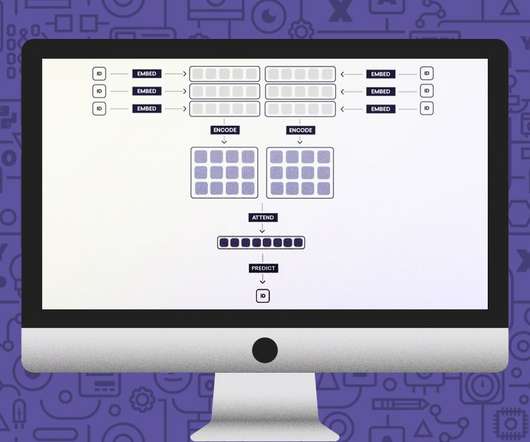

The system analyzes visual data before categorizing an object in a photo or video under a predetermined heading. One of the most straightforward computer vision tools, TensorFlow, enables users to create machine learning models for computer vision-related tasks like facial recognition, picture categorization, object identification, and more.

Let's personalize your content