Commonsense Reasoning for Natural Language Processing

Probably Approximately a Scientific Blog

JANUARY 12, 2021

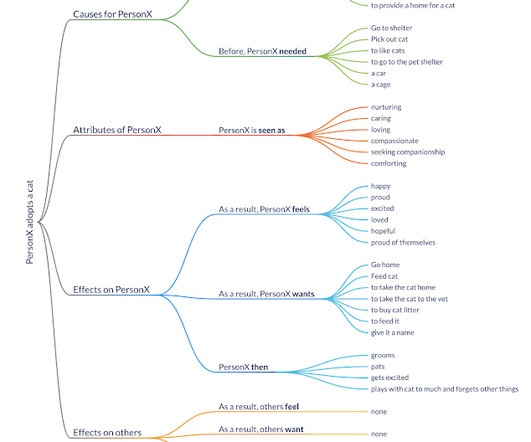

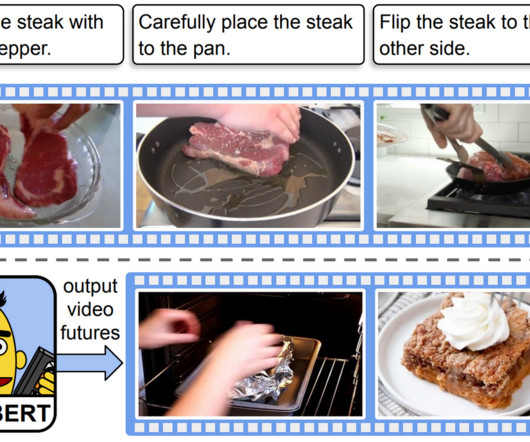

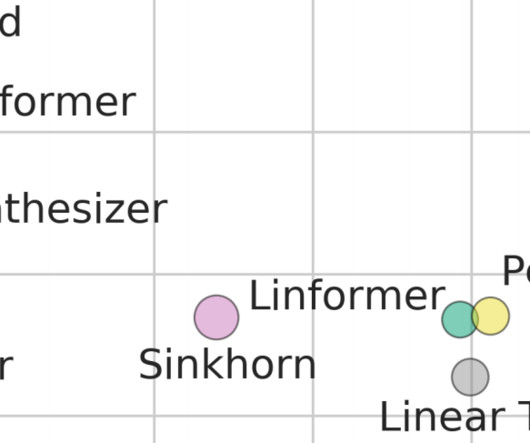

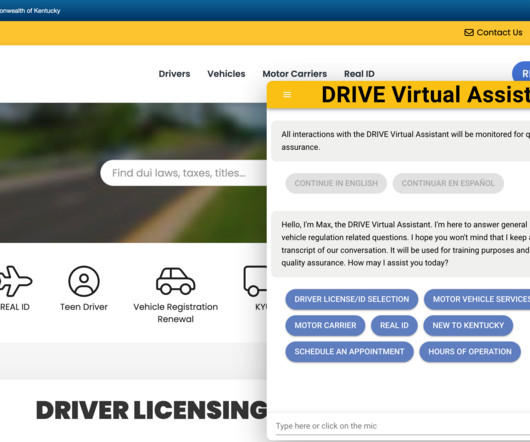

This long-overdue blog post is based on the Commonsense Tutorial taught by Maarten Sap, Antoine Bosselut, Yejin Choi, Dan Roth, and myself at ACL 2020. Figure 1: adversarial examples in computer vision (left) and natural language processing tasks (right). Image credit: Lin et al. Image credit: Lin et al.

Let's personalize your content