ACL 2021 Highlights

Sebastian Ruder

AUGUST 15, 2021

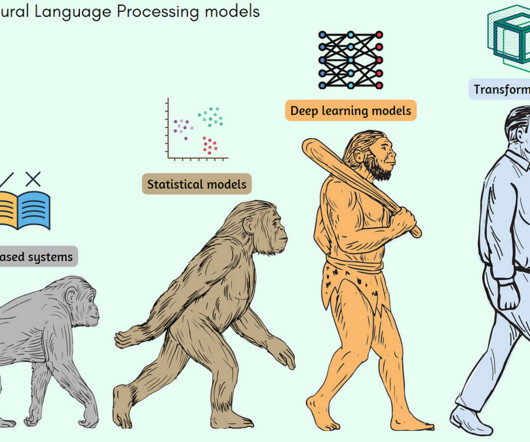

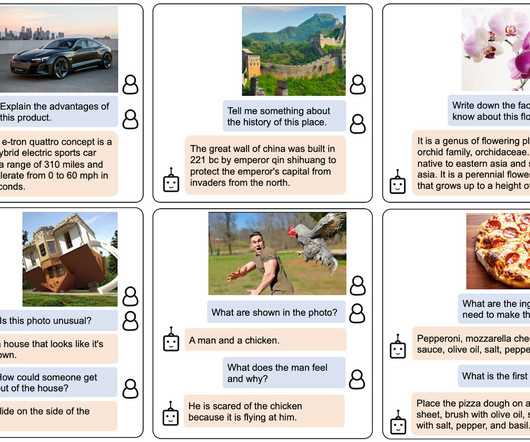

ACL 2021 took place virtually from 1–6 August 2021. These models are essentially all variants of the same Transformer architecture.

This site uses cookies to improve your experience. By viewing our content, you are accepting the use of cookies. To help us insure we adhere to various privacy regulations, please select your country/region of residence. If you do not select a country we will assume you are from the United States. View our privacy policy and terms of use.

Sebastian Ruder

AUGUST 15, 2021

ACL 2021 took place virtually from 1–6 August 2021. These models are essentially all variants of the same Transformer architecture.

Marktechpost

FEBRUARY 17, 2024

2021), Izacard et al. Initially, a Masked Language Modeling Pretraining phase utilized resources like BooksCorpus and a Wikipedia dump from 2023, employing the bert-base-uncased tokenizer to create data chunks suited for long-context training. Recent advancements, as highlighted by Lewis et al. 2022), and Ram et al.

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

AWS Machine Learning Blog

FEBRUARY 6, 2024

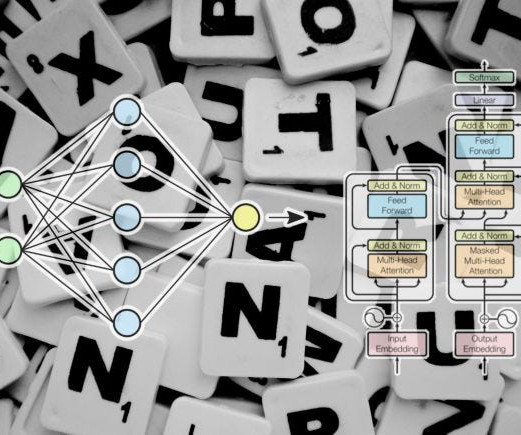

In 2021, the pharmaceutical industry generated $550 billion in US revenue. Transformers, BERT, and GPT The transformer architecture is a neural network architecture that is used for natural language processing (NLP) tasks. The other data challenge for healthcare customers are HIPAA compliance requirements.

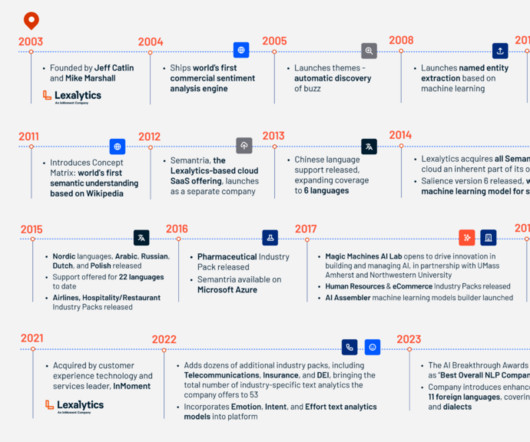

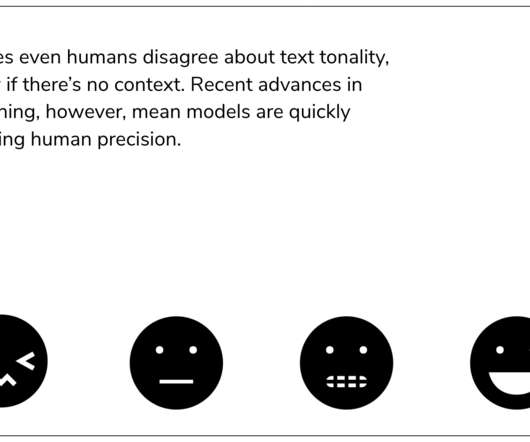

Lexalytics

JULY 12, 2023

And in 2021, we were acquired by leading CX provider InMoment, signaling an acknowledgement in the industry of the growing importance of AI and NLP in understanding and combining all forms of feedback and data.

Mlearning.ai

JUNE 14, 2023

— YouTube Introduction to Sequence Learning and Attention Mechanisms Stanford CS224N: NLP with Deep Learning | Winter 2019 | Lecture 8 — Translation, Seq2Seq, Attention — YouTube Stanford CS224N NLP with Deep Learning | Winter 2021 | Lecture 7 — Translation, Seq2Seq, Attention — YouTube 2. YouTube BERT Research — Ep.

Topbots

APRIL 11, 2023

We’ll start with a seminal BERT model from 2018 and finish with this year’s latest breakthroughs like LLaMA by Meta AI and GPT-4 by OpenAI. BERT by Google Summary In 2018, the Google AI team introduced a new cutting-edge model for Natural Language Processing (NLP) – BERT , or B idirectional E ncoder R epresentations from T ransformers.

Unite.AI

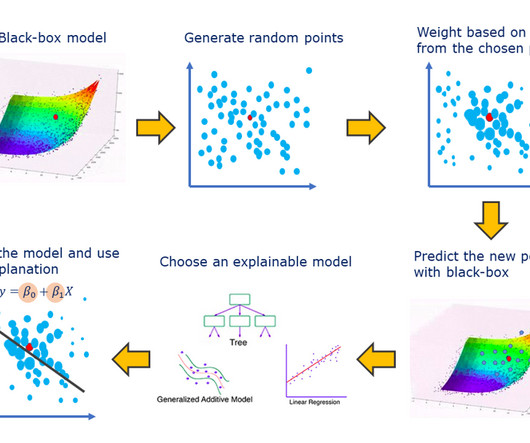

DECEMBER 1, 2023

Flawed Decision Making The opaqueness in the decision-making process of LLMs like GPT-3 or BERT can lead to undetected biases and errors. This presents an inherent tradeoff between scale, capability, and interpretability. Impact of the LLM Black Box Problem 1.

ML @ CMU

FEBRUARY 24, 2023

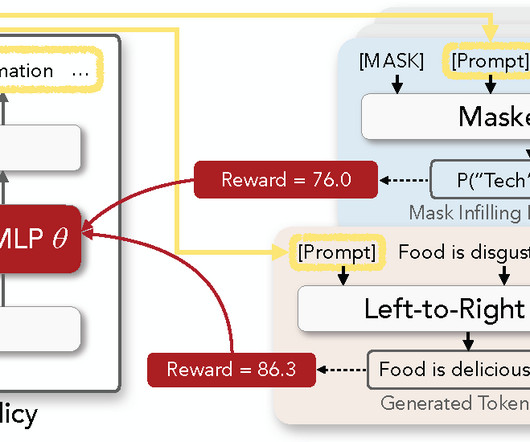

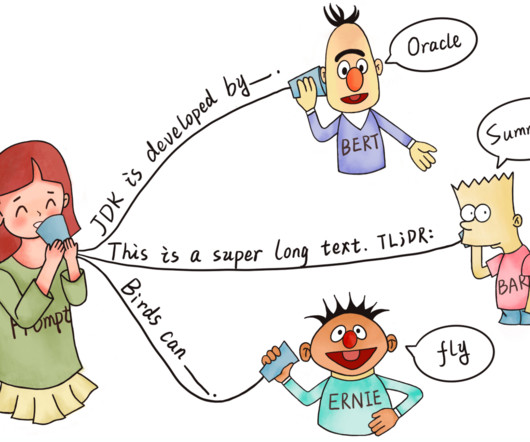

Prompting has emerged as a promising approach to solving a wide range of NLP problems using large pre-trained language models (LMs), including left-to-right models such as GPT s and masked LMs such as BERT , RoBERTa , etc. BERT and GPTs) for both classification and generation tasks. Webson and Pavlick (2021) , Zhao et al.,

Mlearning.ai

MARCH 2, 2023

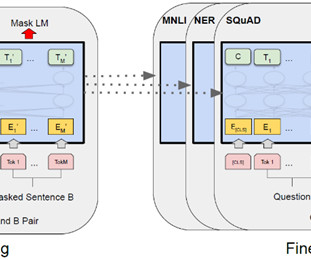

Pre-training of Deep Bidirectional Transformers for Language Understanding BERT is a language model that can be fine-tuned for various NLP tasks and at the time of publication achieved several state-of-the-art results. Finally, the impact of the paper and applications of BERT are evaluated from today’s perspective. 1 Architecture III.2

Mlearning.ai

APRIL 8, 2023

Popular Examples include the Bidirectional Encoder Representations from Transformers (BERT) model and the Generative Pre-trained Transformer 3 (GPT-3) model. 2017) “ BERT: Pre-training of deep bidirectional transformers for language understanding ” by Devlin et al. 2018) “ Language models are few-shot learners ” by Brown et al.

Towards AI

JULY 20, 2023

BioBERT and similar BERT-based NER models are trained and fine-tuned using a biomedical corpus (or dataset) such as NCBI Disease, BC5CDR, or Species-800. Additionally, data extraction can be more difficult to automate than other SLR elements. a text file with one word per line).

Mlearning.ai

OCTOBER 2, 2023

Famous models like BERT and others, begin their journey with initial training on massive datasets encompassing vast swaths of internet text. ArXiv , 2021, /abs/2106.10199. So, fine-tuning is a way that helps improve model performance by training on specific examples of prompts and desired responses. Attention Is All You Need.”

Sebastian Ruder

JANUARY 24, 2022

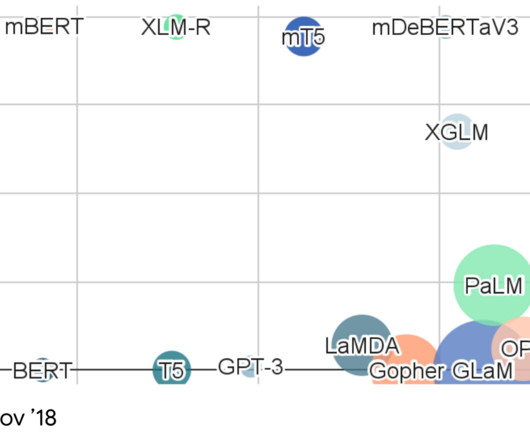

2021) 2021 saw many exciting advances in machine learning (ML) and natural language processing (NLP). 2021 saw the continuation of the development of ever larger pre-trained models. 6] such as W2v-BERT [7] as well as more powerful multilingual models such as XLS-R [8]. Credit for the title image: Liu et al.

Mlearning.ai

FEBRUARY 15, 2023

transformer.ipynb” uses the BERT architecture to classify the behaviour type for a conversation uttered by therapist and client, i.e, The fourth model which is also used for multi-class classification is built using the famous BERT architecture. The architecture of BERT is represented in Figure 14. 438 therapist_input 0.60

NVIDIA Developer

JUNE 2, 2021

Transformer-based models, such as Bidirectional Encoder Representations from Transformers (BERT), have revolutionized NLP by offering accuracy comparable to human baselines on benchmarks like SQuAD for question-answer, entity recognition, intent recognition, sentiment analysis, and more.

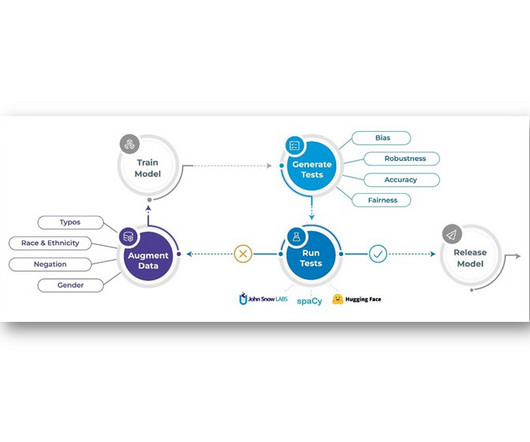

John Snow Labs

MAY 15, 2023

2021 ] – sometimes changing the likely answer more than 80% of the time. 2021 ] is the mislabeling of training examples. In addition, state-of-the-art question answering models have been shown to exhibit biases around race, gender, physical appearance, disability, and religion [ Parrish et. Finally, [ van Aken et.

Bugra Akyildiz

DECEMBER 18, 2022

Dragon is a new foundation model (improvement of BERT) that is pre-trained jointly from text and knowledge graphs for improved language, knowledge and reasoning capabilities. Dragon can be used as a drop-in replacement for BERT. The following is my hypothesis- 🧵⬇ 7:42 PM ∙ Sep 8, 2021 154 Likes 13 Retweets

Heartbeat

AUGUST 2, 2023

Large language models, such as GPT-3 (Generative Pre-trained Transformer 3), BERT, XLNet, and Transformer-XL, etc., It has become the backbone of many successful language models, like GPT-3, BERT, and their variants. They are usually trained on a massive amount of text data. Benefits of Using Language Models 1.

Sebastian Ruder

NOVEMBER 14, 2022

Research models such as BERT and T5 have become much more accessible while the latest generation of language and multi-modal models are demonstrating increasingly powerful capabilities. For a recent study [3] , we similarly reviewed papers from ACL 2021 and found that almost 70% of papers only evaluate on English. 2020) (Ahia et al.,

Applied Data Science

JANUARY 4, 2022

Hiding your 2021 resolution list under a glass of champagne? To write this post we shook the internet upside down for industry news and research breakthroughs and settled on the following 5 themes, to wrap up 2021 in a neat bow: ? In 2021, the following were added to the ever growing list of Transformer applications.

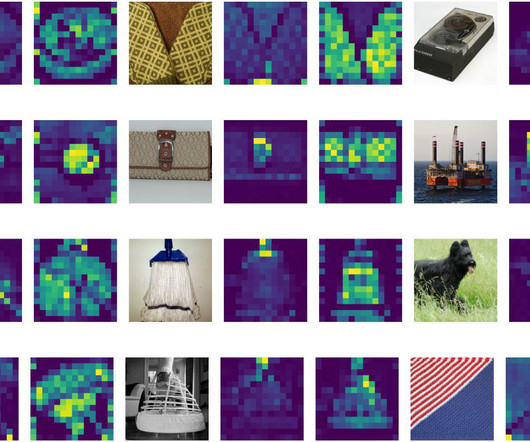

Jay Alammar

JANUARY 18, 2021

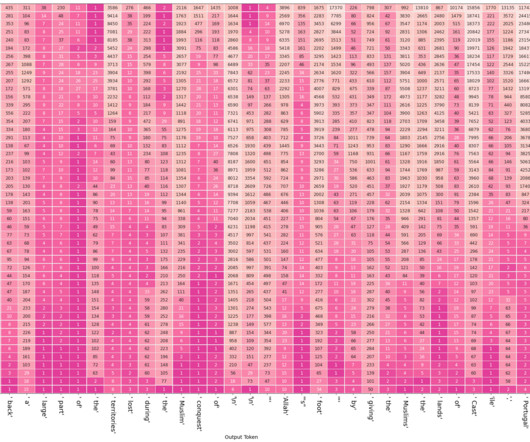

This is likely a similar effect to that observed in BERT of the final layer being the most task-specific. Retrieved from [link] BibTex: @misc{alammar2021hiddenstates, title={Finding the Words to Say: Hidden State Visualizations for Language Models}, author={Alammar, J}, year={2021}, url={[link] }

AWS Machine Learning Blog

DECEMBER 4, 2023

An important aspect of our strategy has been the use of SageMaker and AWS Batch to refine pre-trained BERT models for seven different languages. Fine-tuning multilingual BERT models with AWS Batch GPU jobs We sought a solution to support multiple languages for our diverse user base.

IBM Journey to AI blog

AUGUST 1, 2023

The term “foundation model” was coined by the Stanford Institute for Human-Centered Artificial Intelligence in 2021. BERT (Bi-directional Encoder Representations from Transformers) is one of the earliest LLM foundation models developed. An open-source model, Google created BERT in 2018.

Applied Data Science

DECEMBER 23, 2022

Then came the Transformer architecture, which solved the issue of long-range dependencies, and, along with it, t he family of BERT models , GPT-2 and its larger successor, GPT-3. This trend started in 2021, with OpenAI Codex , a GPT-3 based tool. The debate was on again: maybe language generation is really just a prediction task?

Snorkel AI

MARCH 1, 2023

BERT BERT, an acronym that stands for “Bidirectional Encoder Representations from Transformers,” was one of the first foundation models and pre-dated the term by several years. BERT proved useful in several ways, including quantifying sentiment and predicting the words likely to follow in unfinished sentences.

AssemblyAI

NOVEMBER 8, 2023

We’ve used the DistilBertTokenizer , which inherits from the BERT WordPiece tokenization scheme. 2021 (Google Research) propose an approach to Truecasing that hierarchically exploits word and character-level encoding. 2021 shared a pretty comprehensive survey on punctuation and casing restoration. billion words).

Sebastian Ruder

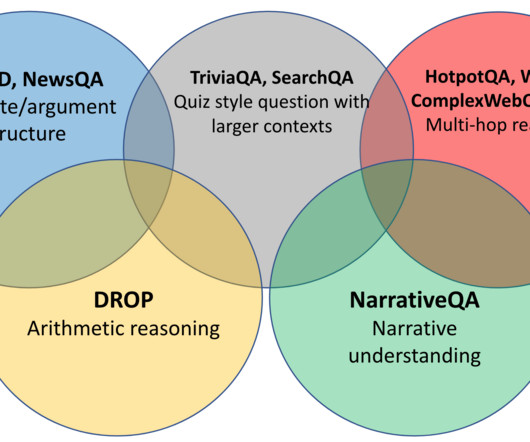

DECEMBER 6, 2021

This post expands on the EMNLP 2021 tutorial on Multi-domain Multilingual Question Answering. Reading Comprehension assumes a gold paragraph is provided Standard approaches for reading comprehension build on pre-trained models such as BERT. 2021 ), among others. The tutorial was organised by Avi Sil and me.

The Stanford AI Lab Blog

MAY 31, 2022

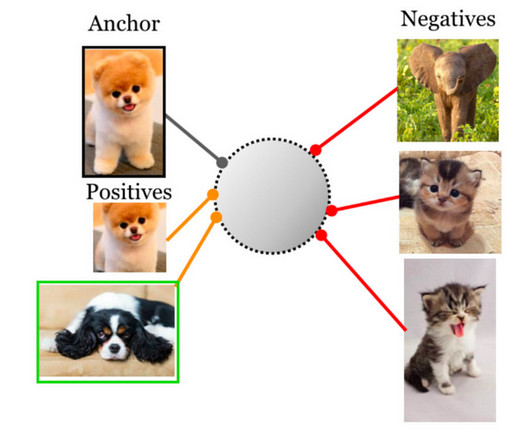

Language Model Pretraining Language models (LMs), like BERT 1 and the GPT series 2 , achieve remarkable performance on many natural language processing (NLP) tasks. To achieve this, we first chunk each document into segments of roughly 256 tokens, which is half of the maximum BERT LM input length.

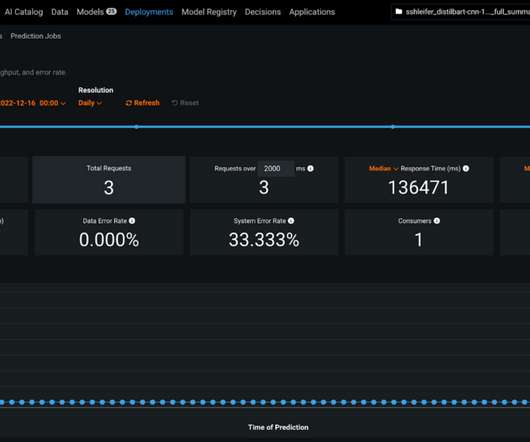

DataRobot Blog

FEBRUARY 2, 2023

As an example, getting started with a BERT model for question answering (bert-large-uncased-whole-word-masking-finetuned-squad) is as easy as executing these lines: !pip writefile $BASE_PATH/custom.py """ Copyright 2021 DataRobot, Inc. Let’s create a JSON to ask a question to our question-answering BERT model.

The Stanford AI Lab Blog

JANUARY 21, 2022

2021 with natural language descriptions for each video. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. RoBERTa: A Robustly Optimized BERT Pretraining Approach. DistilBERT, a distilled version of BERT: smaller, faster, cheaper and lighter. Chen, Suraj Nair, Chelsea Finn. Neumann, M.,

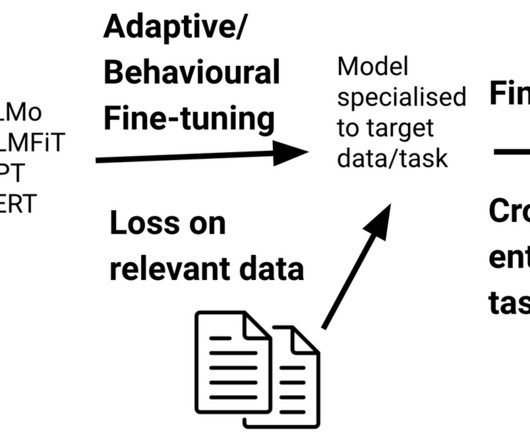

Sebastian Ruder

FEBRUARY 24, 2021

2021) fine-tune on around 50 labelled datasets in a massively multi-task setting and observe that a large, diverse collection of tasks is important for good transfer performance. For instance, Dou and Neubig (2021) fine-tune a model for word alignment with an objective that teaches it to identify parallel sentences, among others.

NVIDIA Developer

MAY 20, 2021

Chung , posted May 21 2021 at 12:03AM Businesses worldwide are using artificial intelligence to solve their greatest challenges. Languages: English Overview Title: Fundamentals of Deep Learning Date: Thursday, June 10, 2021, 09:00 AM +05 Status: Open Businesses worldwide are using artificial intelligence to solve their greatest challenges.

Towards AI

AUGUST 10, 2023

And in 2021, they unveiled LaMDA — an LLM rivaling the capabilities of GPT-3. Google also has open-source models like BERT, T5, ViT, and EfficientNet for easy deployment on GCP. Access to Google’s AI models is provided through Model Garden, a managed service on the Google Cloud Platform (GCP).

Topbots

JULY 6, 2023

Like other large language models, including BERT and GPT-3, LaMDA is trained on terabytes of text data to learn how words relate to one another and then predict what words are likely to come next. How is the problem approached?

Viso.ai

FEBRUARY 25, 2023

The Vision Transformer (ViT) model architecture was introduced in a research paper published as a conference paper at ICLR 2021 titled “An Image is Worth 16*16 Words: Transformers for Image Recognition at Scale” It was developed and published by Neil Houlsby, Alexey Dosovitskiy, and 10 more authors of the Google Research Brain Team.

John Snow Labs

JUNE 5, 2023

Let's just peek into the pre-BERT world… For creating models, we need words to be represented in a form n understood by the training network, ie, numbers. This is called a pre-trained model. This pre-trained model is then fine-tuned for each NLP tasks according to need.

Snorkel AI

MARCH 8, 2023

The agenda today is to first learn how to build a unified foundation model, which is a unit paper from ICCV (International Conference on Computer Vision) 2021. BERT shares this common domain across all of the NLP tasks. As you might know, in the NLP domain, BERT has been the starting foundation model.

Snorkel AI

MARCH 8, 2023

The agenda today is to first learn how to build a unified foundation model, which is a unit paper from ICCV (International Conference on Computer Vision) 2021. BERT shares this common domain across all of the NLP tasks. As you might know, in the NLP domain, BERT has been the starting foundation model.

Sebastian Ruder

JANUARY 19, 2021

A plethora of language-specific BERT models have been trained for languages beyond English such as AraBERT ( Antoun et al., This is similar to findings for distilling an inductive bias into BERT ( Kuncoro et al., Powerful multilingual models that cover around 100 languages emerged including XML-R ( Conneau et al., 2020 ; Rust et al.,

DataRobot Blog

MARCH 9, 2022

The company was acquired by DataRobot in 2021. These embeddings are sometimes trained jointly with the model, but usually additional accuracy can be attained by using pre-trained embeddings such as Word2Vec, GloVe, BERT, or FastText. This article was originally published at Algorithimia’s website. and discern what’s behind it.

Mlearning.ai

JANUARY 17, 2024

Major milestones in the last few years comprised BERT (Google, 2018), GPT-3 (OpenAI, 2020), Dall-E (OpenAI, 2021), Stable Diffusion (Stability AI, LMU Munich, 2022), ChatGPT (OpenAI, 2022).

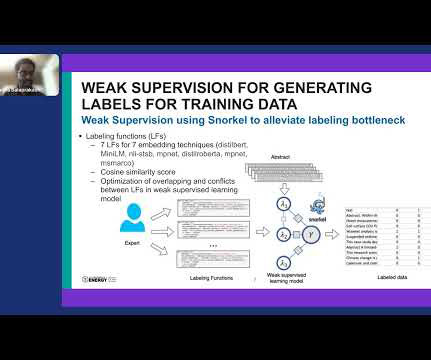

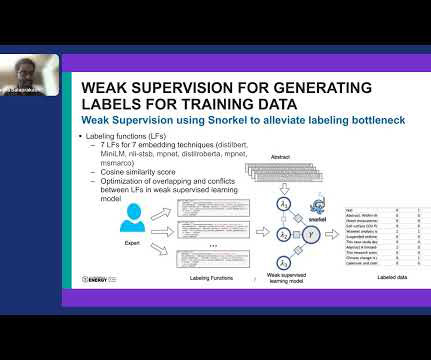

Snorkel AI

APRIL 18, 2023

For that, the teams actually looked into the 2021 IPCC, NASEM, and USGCRP reports. So for each of these high-level climate change hazards, some of these hazards are already reported in the 2021 IPCC report. These are all different reports that talk about various types of climate change and climate change hazards.

Snorkel AI

APRIL 18, 2023

For that, the teams actually looked into the 2021 IPCC, NASEM, and USGCRP reports. So for each of these high-level climate change hazards, some of these hazards are already reported in the 2021 IPCC report. These are all different reports that talk about various types of climate change and climate change hazards.

Chatbots Life

MAY 12, 2023

There are many approaches to language modelling, we can for example ask the model to fill in the words in the middle of a sentence (as in the BERT model) or predict which words have been swapped for fake ones (as in the ELECTRA model). The most recent training data is of ChatGPT from 2021 September.

Expert insights. Personalized for you.

We have resent the email to

Are you sure you want to cancel your subscriptions?

Let's personalize your content