Calibration Techniques in Deep Neural Networks

Heartbeat

JUNE 14, 2023

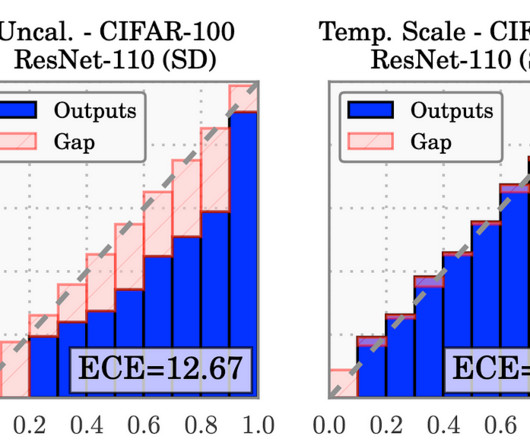

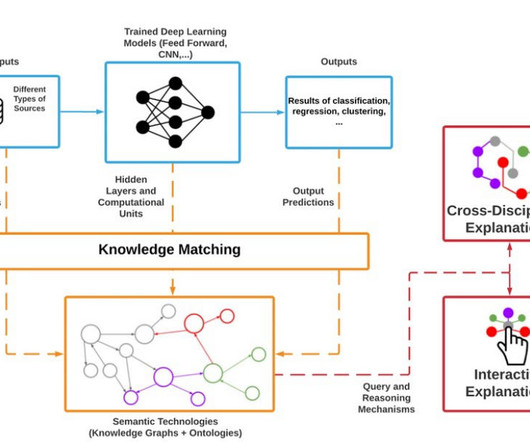

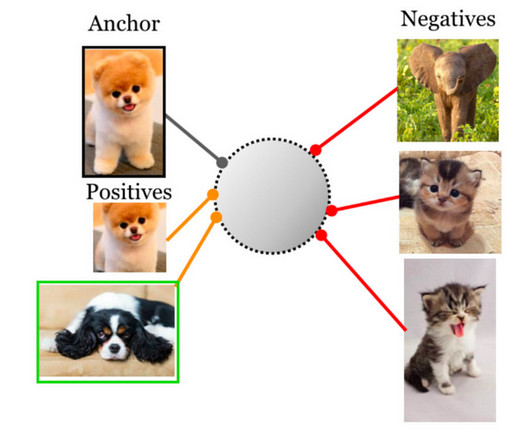

Introduction Deep neural network classifiers have been shown to be mis-calibrated [1], i.e., their prediction probabilities are not reliable confidence estimates. For example, if a neural network classifies an image as a “dog” with probability p , p cannot be interpreted as the confidence of the network’s predicted class for the image.

Let's personalize your content