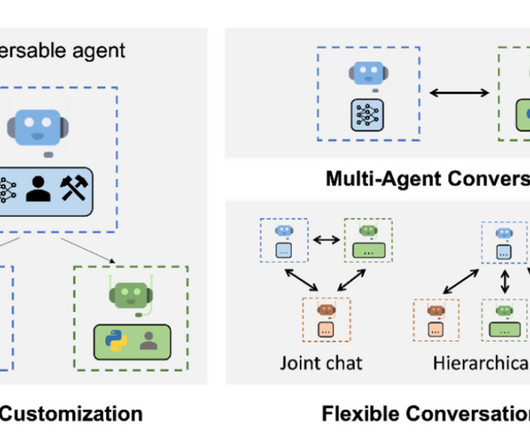

AutoGen: Powering Next Generation Large Language Model Applications

Unite.AI

OCTOBER 18, 2023

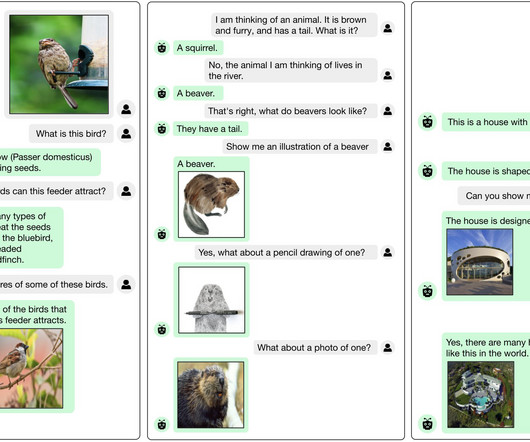

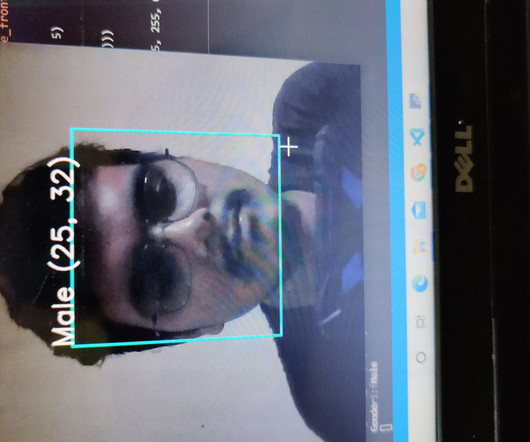

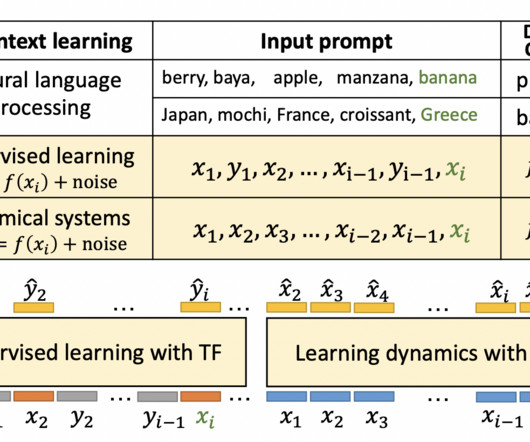

Large Language Models (LLMs) are currently one of the most discussed topics in mainstream AI. Developers worldwide are exploring the potential applications of LLMs. Large language models are intricate AI algorithms.

Let's personalize your content