Can We Optimize Large Language Models More Efficiently? Check Out this Comprehensive Survey of Algorithmic Advancements in LLM Efficiency

Marktechpost

DECEMBER 7, 2023

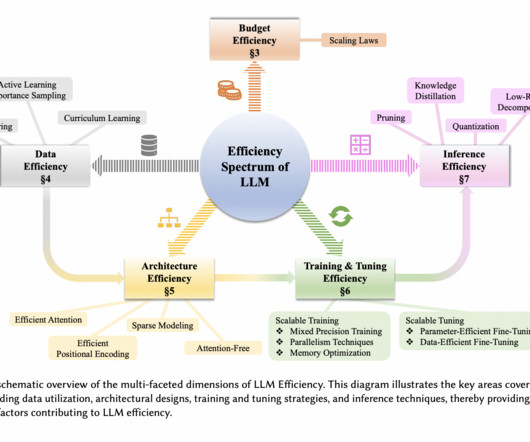

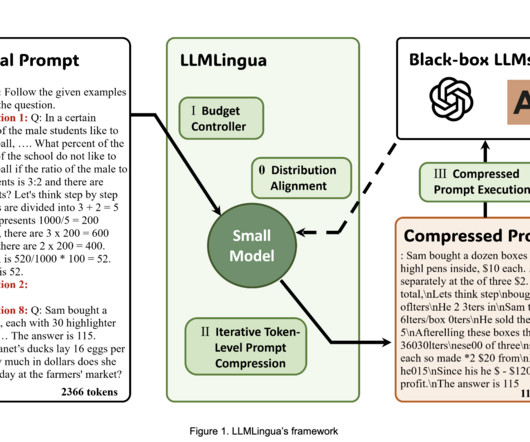

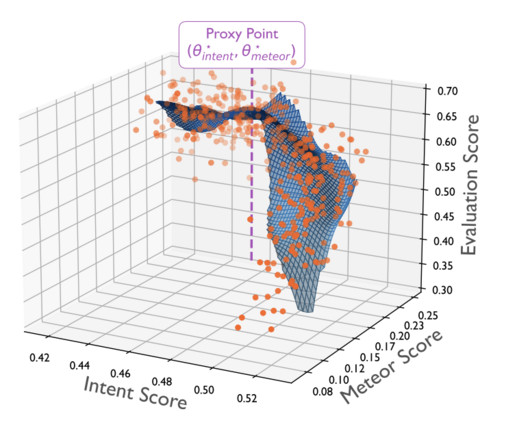

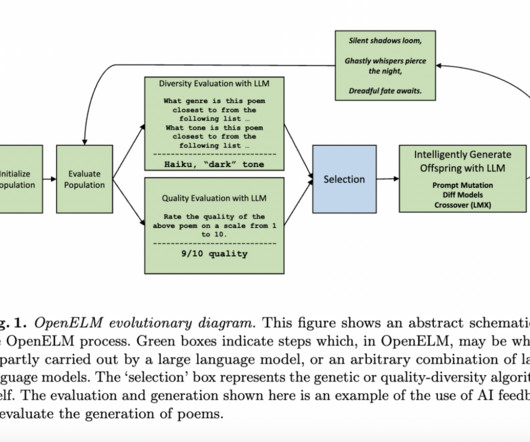

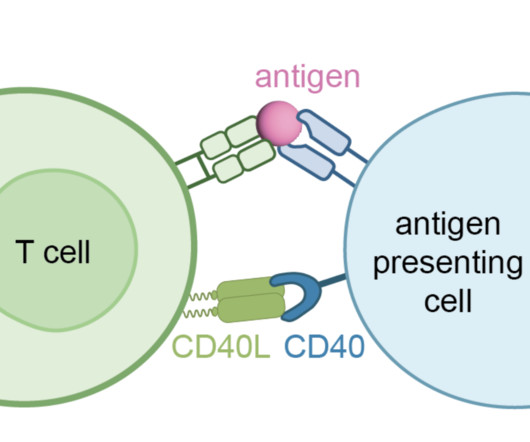

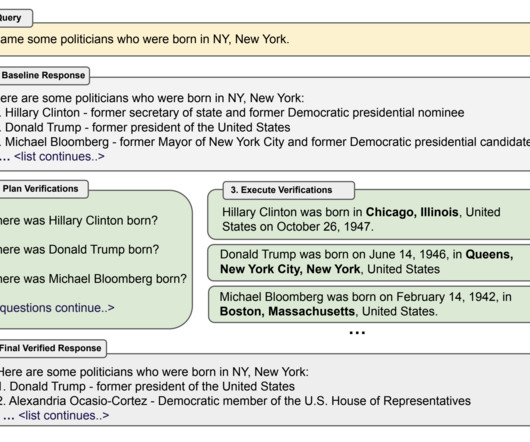

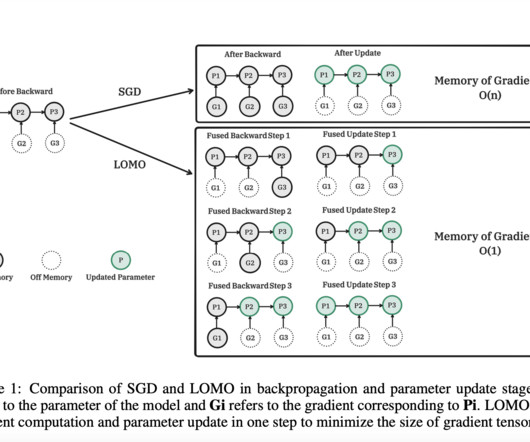

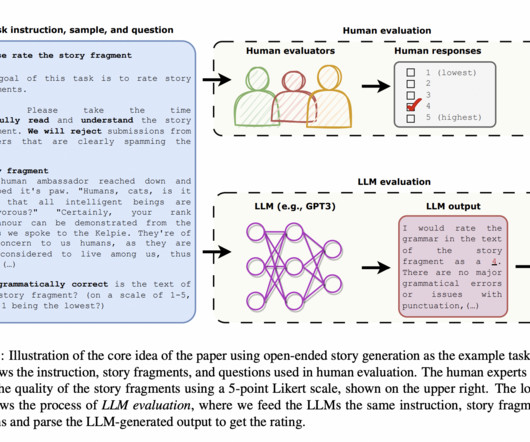

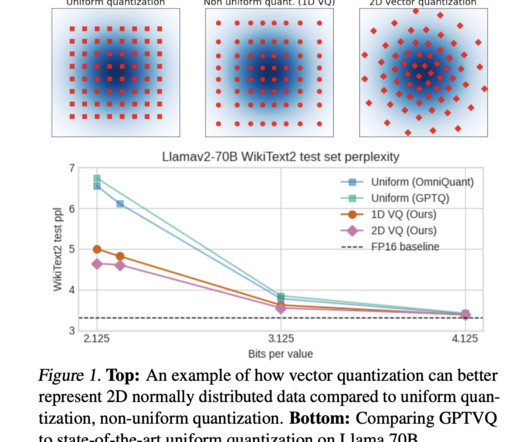

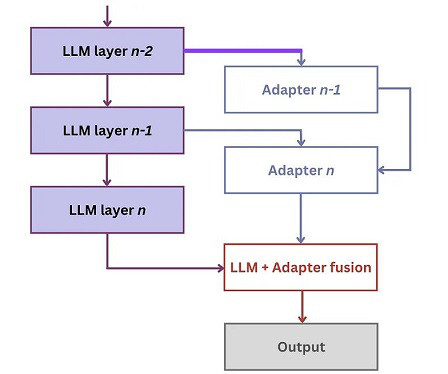

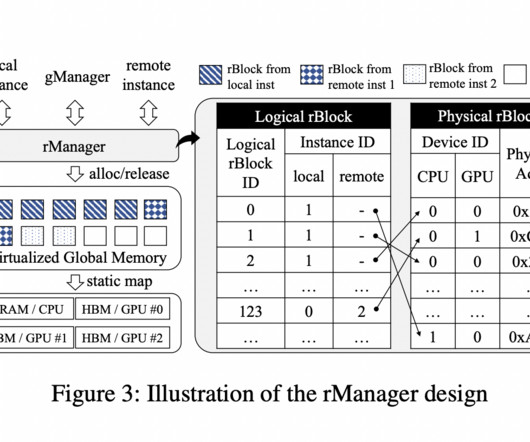

Can We Optimize Large Language Models More Efficiently? To overcome this challenge, researchers continuously make algorithmic advancements to improve their efficiency and make them more accessible. The study surveys algorithmic advancements that enhance the efficiency of LLMs.

Let's personalize your content