A New Study from the University of Wisconsin Investigates How Small Transformers Trained from Random Initialization can Efficiently Learn Arithmetic Operations Using the Next Token Prediction Objective

Marktechpost

JULY 13, 2023

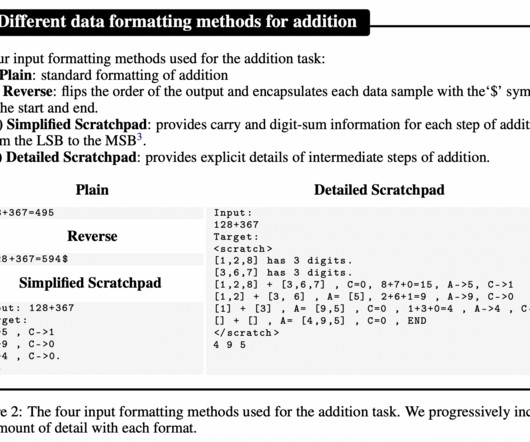

Perhaps surprisingly, the training objective of the model, which is often an auto-regressive loss based on the prediction of the next token, does not directly encode these objectives. These skills have been explored in depth in earlier studies, along with how they change as training compute scale, data type, and model size.

Let's personalize your content