Supercharging Graph Neural Networks with Large Language Models: The Ultimate Guide

Unite.AI

MAY 8, 2024

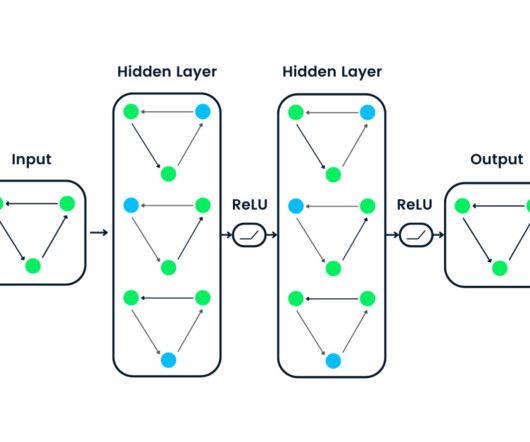

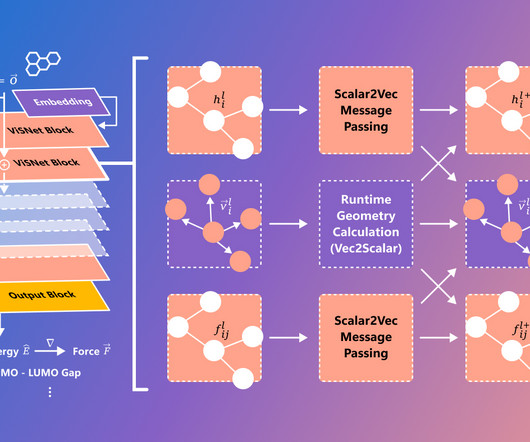

The ability to effectively represent and reason about these intricate relational structures is crucial for enabling advancements in fields like network science, cheminformatics, and recommender systems. Graph Neural Networks (GNNs) have emerged as a powerful deep learning framework for graph machine learning tasks.

Let's personalize your content