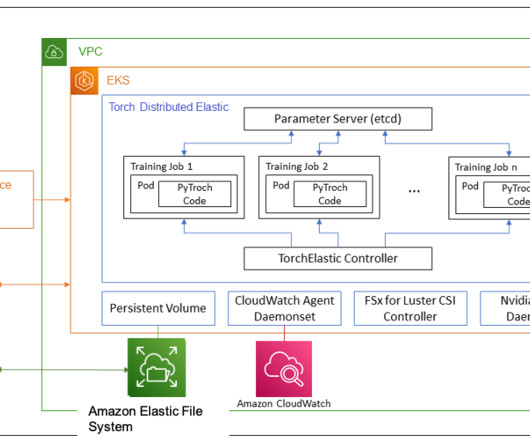

Accelerate hyperparameter grid search for sentiment analysis with BERT models using Weights & Biases, Amazon EKS, and TorchElastic

AWS Machine Learning Blog

MARCH 2, 2023

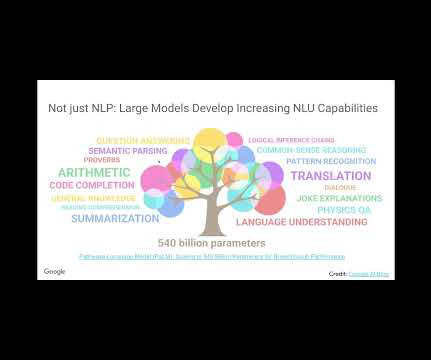

Transformer-based language models such as BERT ( Bidirectional Transformers for Language Understanding ) have the ability to capture words or sentences within a bigger context of data, and allow for the classification of the news sentiment given the current state of the world. W&B Sweeps will automate this kind of exploration.

Let's personalize your content