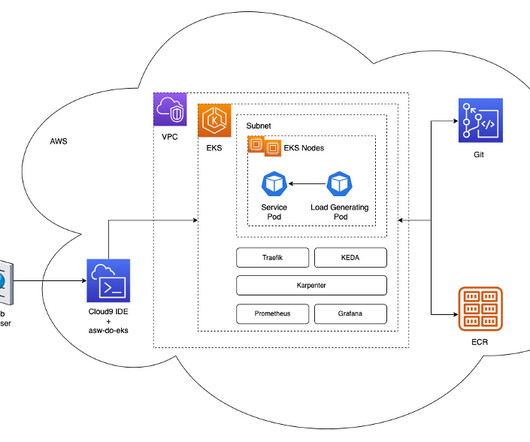

Scale AI training and inference for drug discovery through Amazon EKS and Karpenter

AWS Machine Learning Blog

APRIL 19, 2024

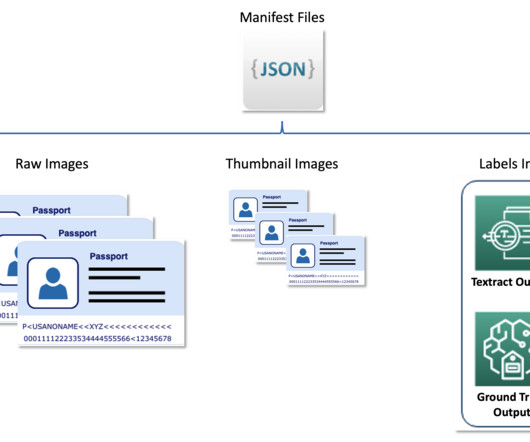

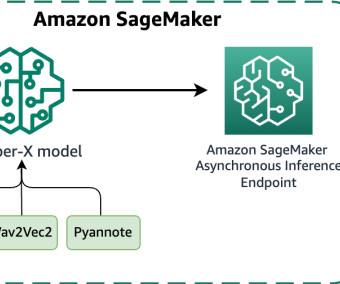

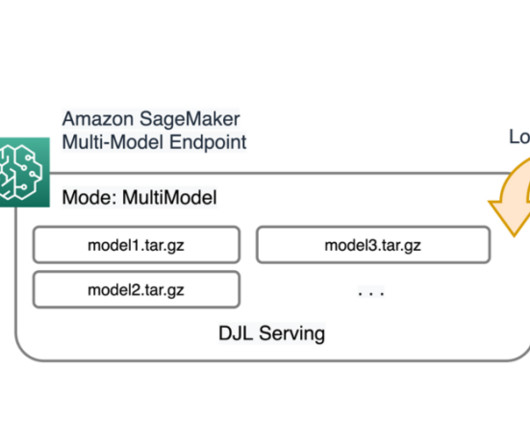

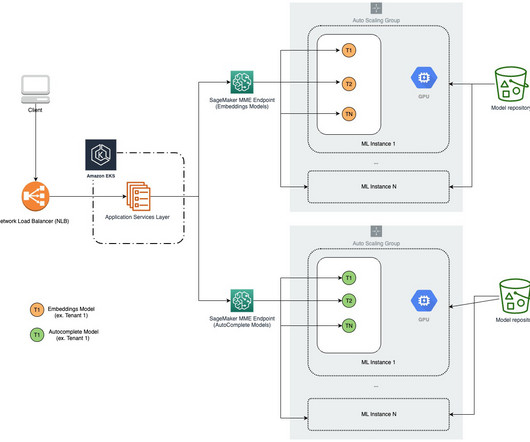

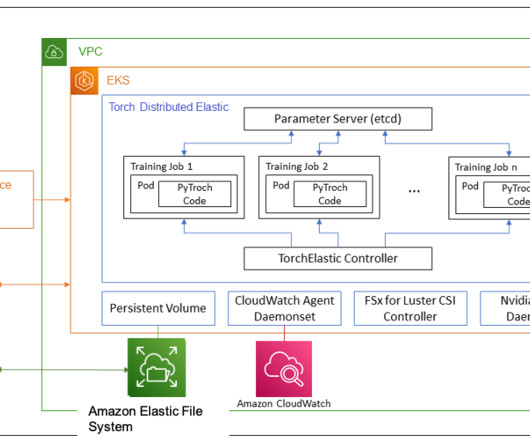

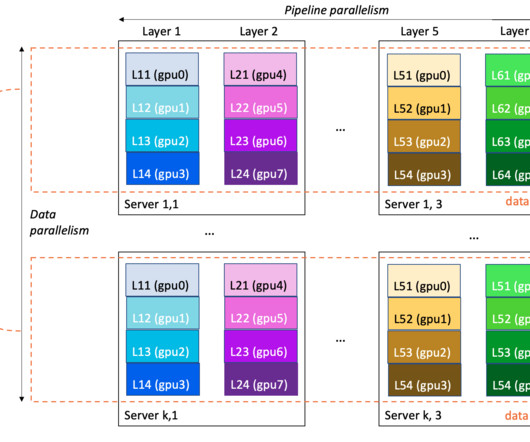

The platform both enables our AI—by supplying data to refine our models—and is enabled by it, capitalizing on opportunities for automated decision-making and data processing. Our deep learning models have non-trivial requirements: they are gigabytes in size, are numerous and heterogeneous, and require GPUs for fast inference and fine-tuning.

Let's personalize your content