Create a document lake using large-scale text extraction from documents with Amazon Textract

AWS Machine Learning Blog

JANUARY 8, 2024

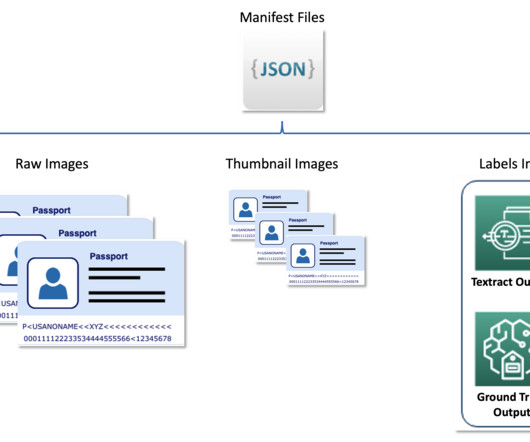

When the script ends, a completion status along with the time taken will be returned to the SageMaker studio console. These JSON files will contain all the Amazon Textract metadata, including the text that was extracted from within the documents. His focus is natural language processing and computer vision.

Let's personalize your content