Host ML models on Amazon SageMaker using Triton: TensorRT models

AWS Machine Learning Blog

MAY 8, 2023

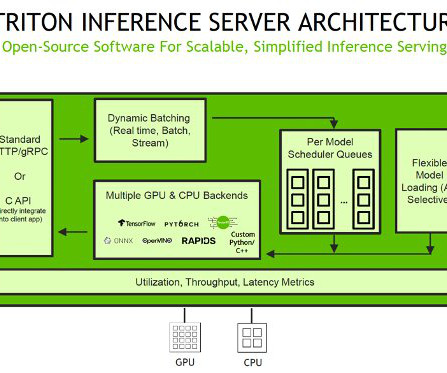

TensorRT is an SDK developed by NVIDIA that provides a high-performance deep learning inference library. It’s optimized for NVIDIA GPUs and provides a way to accelerate deep learning inference in production environments. Triton Inference Server supports ONNX as a model format.

Let's personalize your content