Basil Faruqui, BMC: Why DataOps needs orchestration to make it work

AI News

AUGUST 29, 2023

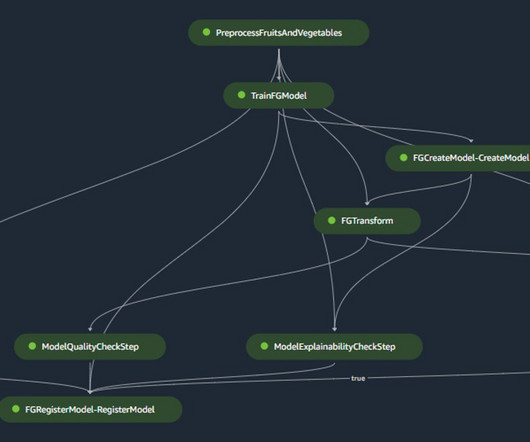

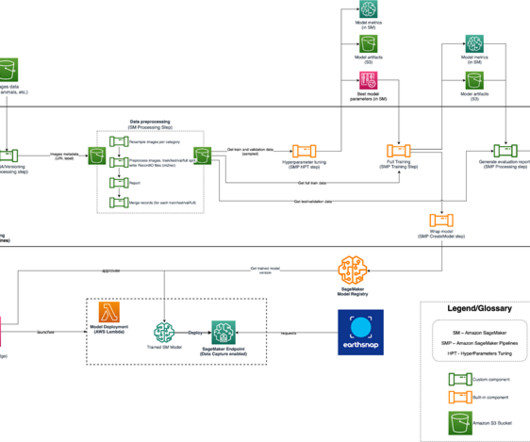

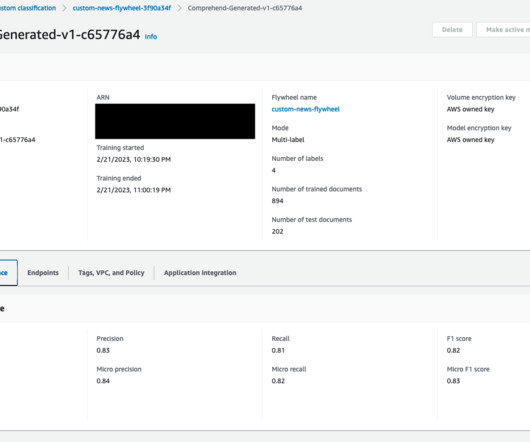

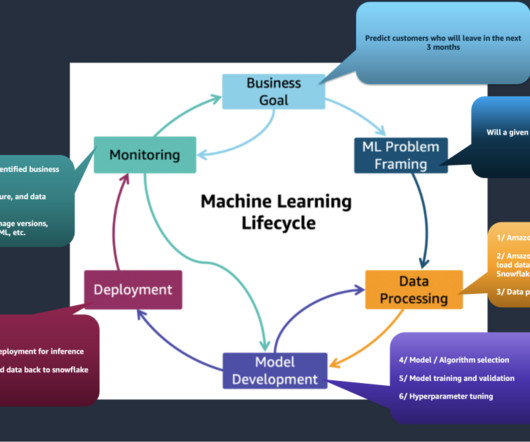

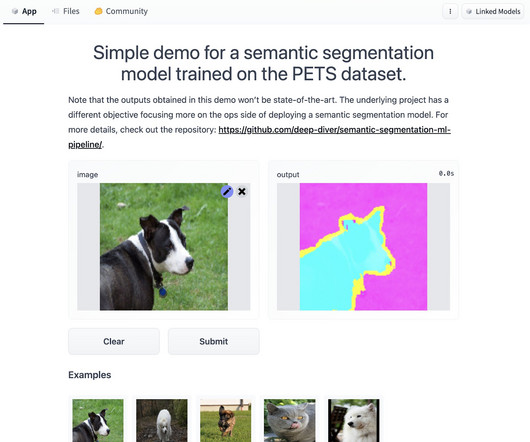

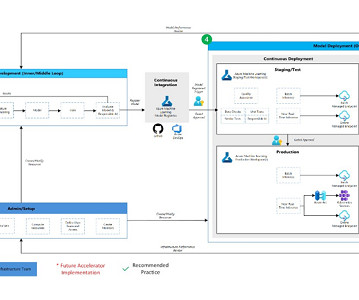

The operationalisation of data projects has been a key factor in helping organisations turn a data deluge into a workable digital transformation strategy, and DataOps carries on from where DevOps started. And everybody agrees that in production, this should be automated.” It’s all data driven,” Faruqui explains.

Let's personalize your content