Most Frequently Asked Azure Data Factory Interview Questions

Analytics Vidhya

FEBRUARY 20, 2023

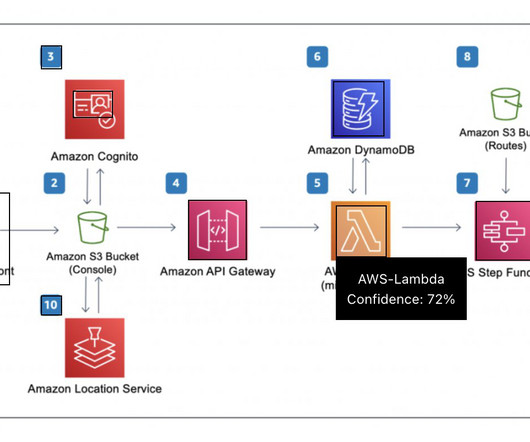

Introduction Azure data factory (ADF) is a cloud-based data ingestion and ETL (Extract, Transform, Load) tool. The data-driven workflow in ADF orchestrates and automates data movement and data transformation.

Let's personalize your content