A General Introduction to Large Language Model (LLM)

Artificial Corner

JULY 30, 2023

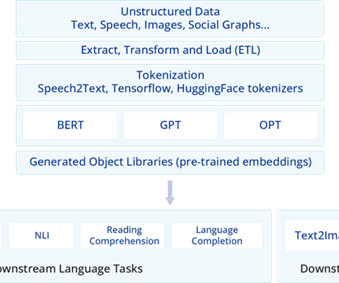

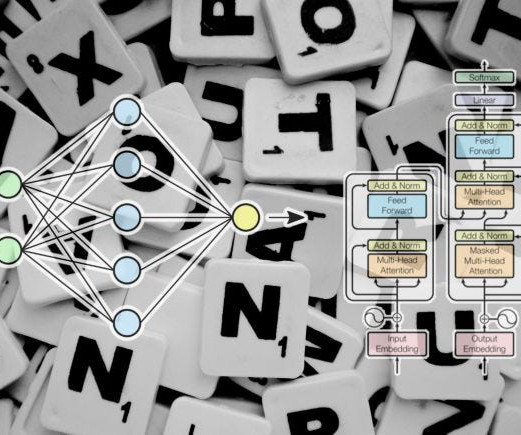

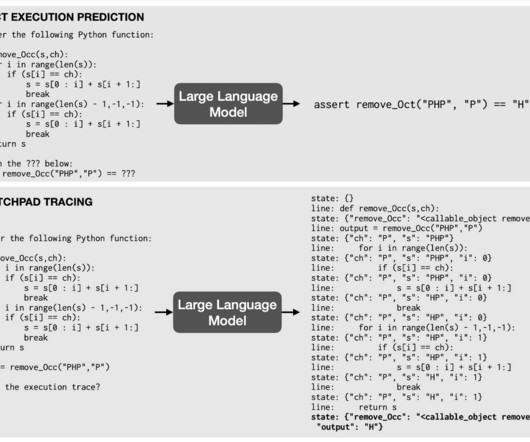

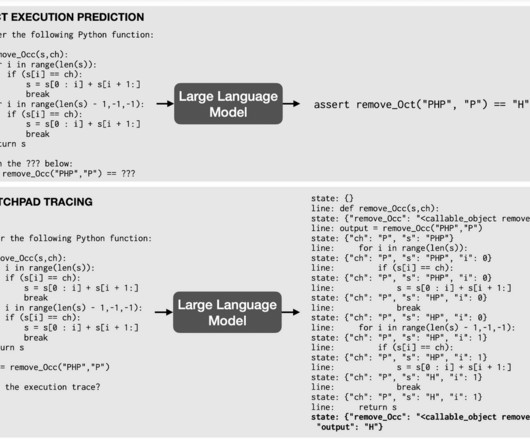

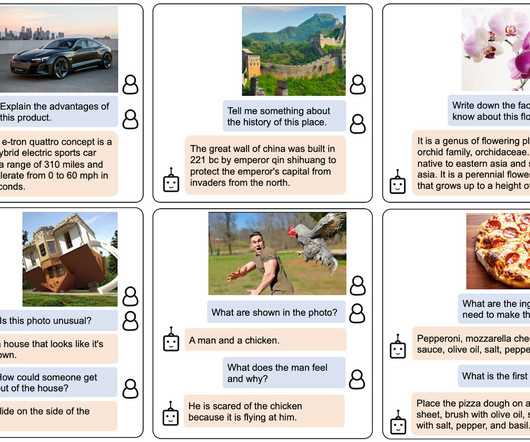

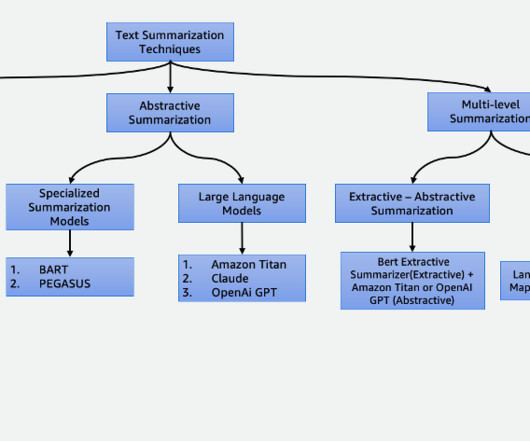

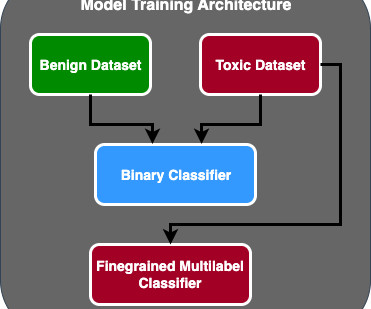

In this world of complex terminologies, someone who wants to explain Large Language Models (LLMs) to some non-tech guy is a difficult task. So that’s why I tried in this article to explain LLM in simple or to say general language. No training examples are needed in LLM Development but it’s needed in Traditional Development.

Let's personalize your content