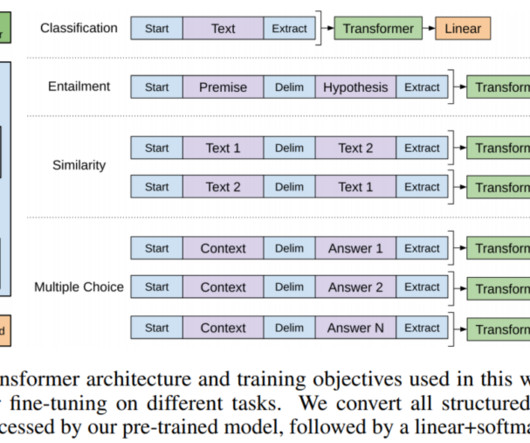

Transfer Learning for NLP: Fine-Tuning BERT for Text Classification

Analytics Vidhya

JULY 20, 2020

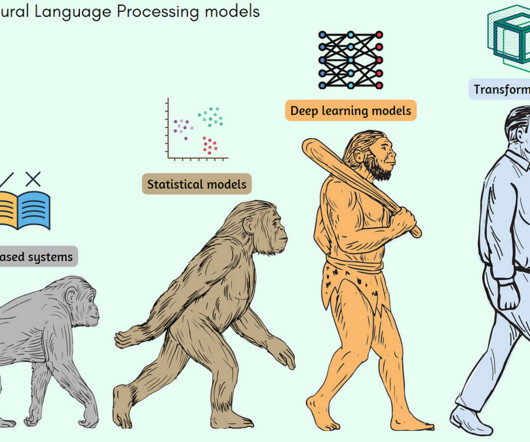

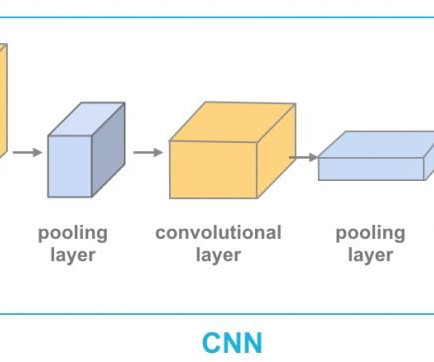

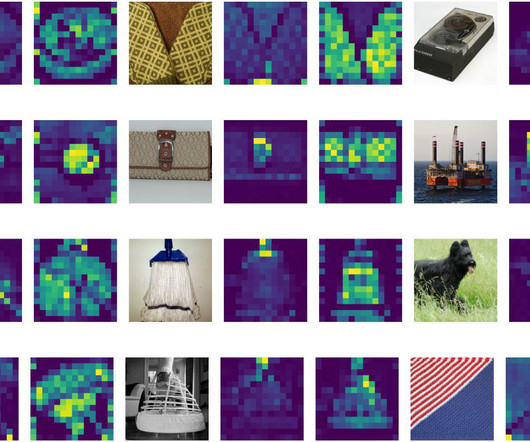

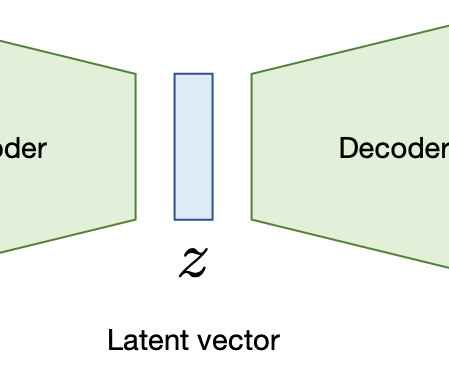

Introduction With the advancement in deep learning, neural network architectures like recurrent neural networks (RNN and LSTM) and convolutional neural networks (CNN) have shown. The post Transfer Learning for NLP: Fine-Tuning BERT for Text Classification appeared first on Analytics Vidhya.

Let's personalize your content