A Quick Recap of Natural Language Processing

Mlearning.ai

JUNE 7, 2023

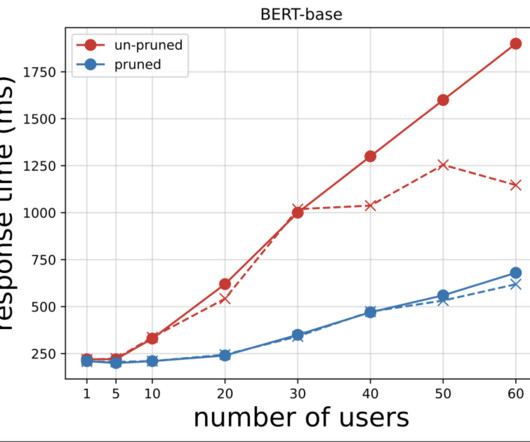

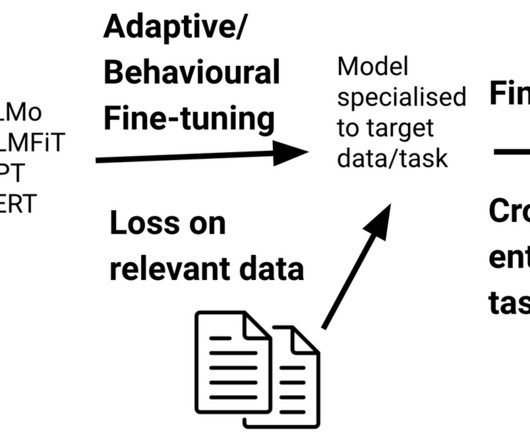

In 2018 when BERT was introduced by Google, I cannot emphasize how much it changed the game within the NLP community. This ability to understand long-range dependencies helps transformers better understand the context of words and achieve superior performance in natural language processing tasks.

Let's personalize your content