Hyperparameter Tuning With Bayesian Optimization

Heartbeat

MARCH 21, 2024

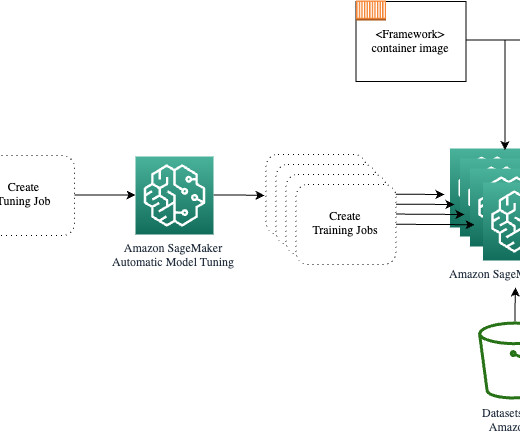

What is Bayesian Optimization used for in hyperparameter tuning? Photo by Abbas Tehrani on Unsplash Hyperparameter tuning, the process of systematically searching for the best combination of hyperparameters that optimize a model's performance, is critical in machine learning model development.

Let's personalize your content