Building and Customizing GenAI with Databricks: LLMs and Beyond

databricks

JANUARY 22, 2024

Generative AI has opened new worlds of possibilities for businesses and is being emphatically embraced across organizations. According to a recent MIT Tech.

building-custom-genai-llms-and-beyond

building-custom-genai-llms-and-beyond  Blog Related Topics

Blog Related Topics

databricks

JANUARY 22, 2024

Generative AI has opened new worlds of possibilities for businesses and is being emphatically embraced across organizations. According to a recent MIT Tech.

IBM Journey to AI blog

JANUARY 11, 2024

To achieve business agility and keep up with competitive challenges and customer demand, companies must absolutely modernize these applications. While many cool possibilities are emerging in this space, there’s a nagging “hallucination factor” of LLMs when applied to critical business workflows.

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

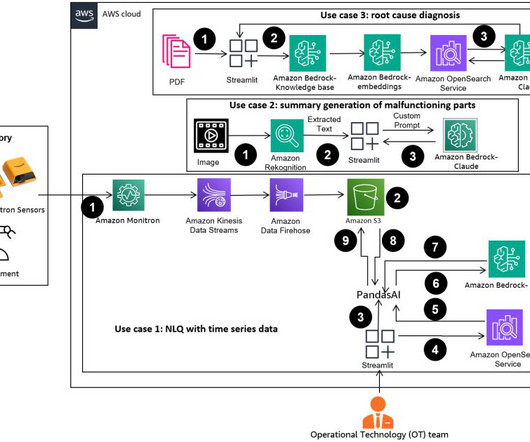

AWS Machine Learning Blog

MARCH 14, 2024

Generative artificial intelligence (generative AI) has enabled new possibilities for building intelligent systems. Recent improvements in Generative AI based large language models (LLMs) have enabled their use in a variety of applications surrounding information retrieval. The SQL is run by Amazon Athena to return the relevant data.

AWS Machine Learning Blog

MARCH 19, 2024

Real-time data is critical for applications like predictive maintenance and anomaly detection, yet developing custom ML models for each industrial use case with such time series data demands considerable time and resources from data scientists, hindering widespread adoption.

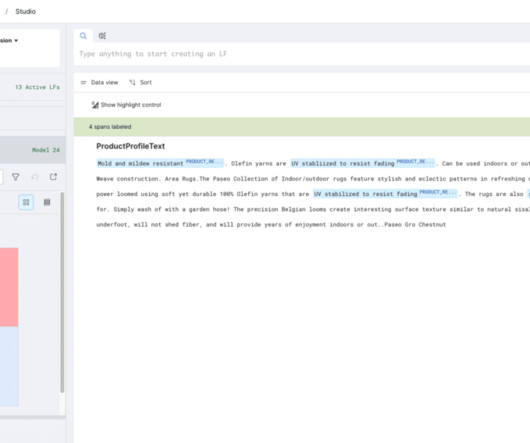

Snorkel AI

AUGUST 9, 2023

Large Language Models ( LLMs ) have grown increasingly capable in recent years across a wide variety of tasks. Building upon these foundations to create production-quality applications requires further development. In this blog post, we’ll look specifically at the task of extracting “product resistances” for rugs.

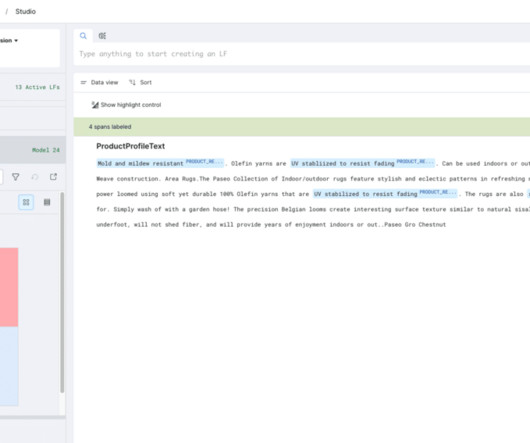

Snorkel AI

AUGUST 9, 2023

Large Language Models ( LLMs ) have grown increasingly capable in recent years across a wide variety of tasks. Building upon these foundations to create production-quality applications requires further development. In this blog post, we’ll look specifically at the task of extracting “product resistances” for rugs.

AWS Machine Learning Blog

DECEMBER 6, 2023

Conversational AI has come a long way in recent years thanks to the rapid developments in generative AI, especially the performance improvements of large language models (LLMs) introduced by training techniques such as instruction fine-tuning and reinforcement learning from human feedback.

Let's personalize your content