Automate caption creation and search for images at enterprise scale using generative AI and Amazon Kendra

AWS Machine Learning Blog

AUGUST 2, 2023

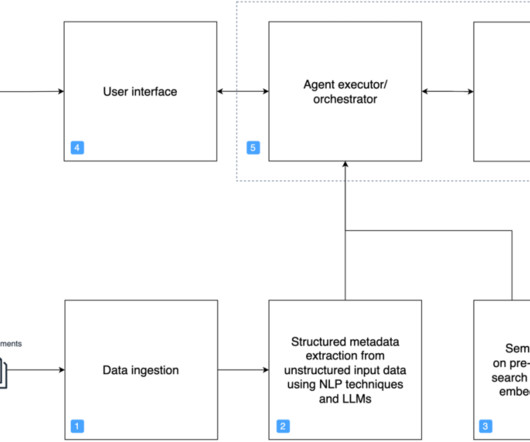

Amazon Kendra supports a variety of document formats , such as Microsoft Word, PDF, and text from various data sources. The Amazon Kendra index can then be enriched with the generated metadata during document ingestion to enable searching the images without any manual effort.

Let's personalize your content