Unleashing the potential: 7 ways to optimize Infrastructure for AI workloads

IBM Journey to AI blog

MARCH 21, 2024

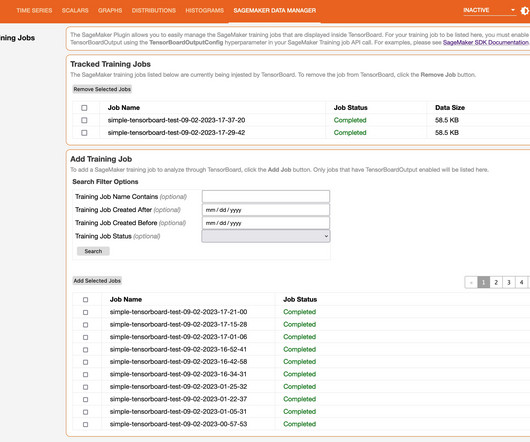

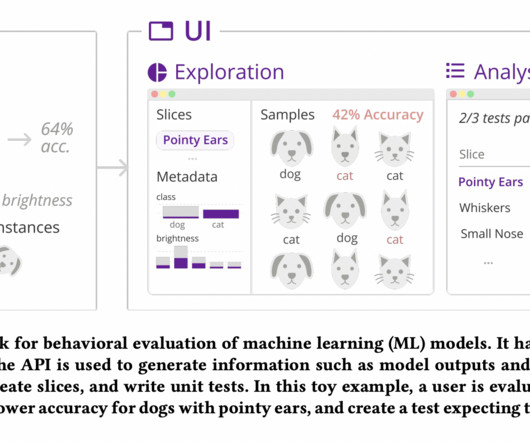

In this blog, we’ll explore seven key strategies to optimize infrastructure for AI workloads, empowering organizations to harness the full potential of AI technologies. High-performance computing systems Investing in high-performance computing systems tailored for AI accelerates model training and inference tasks.

Let's personalize your content