Explainability in AI and Machine Learning Systems: An Overview

Heartbeat

SEPTEMBER 13, 2023

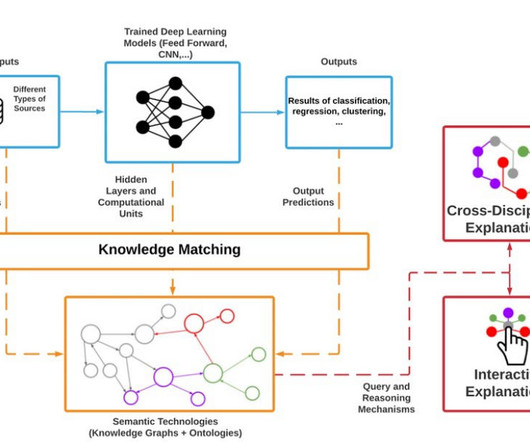

Source: ResearchGate Explainability refers to the ability to understand and evaluate the decisions and reasoning underlying the predictions from AI models (Castillo, 2021). Explainability techniques aim to reveal the inner workings of AI systems by offering insights into their predictions. What is Explainability?

Let's personalize your content