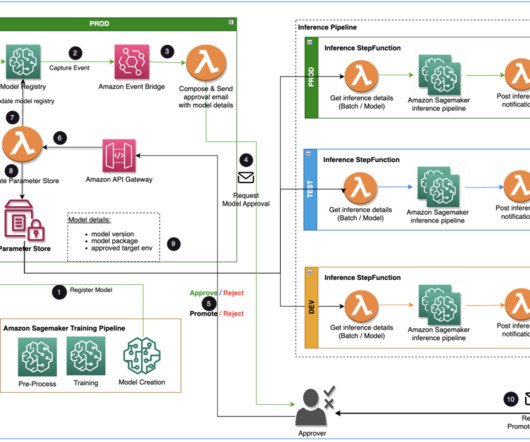

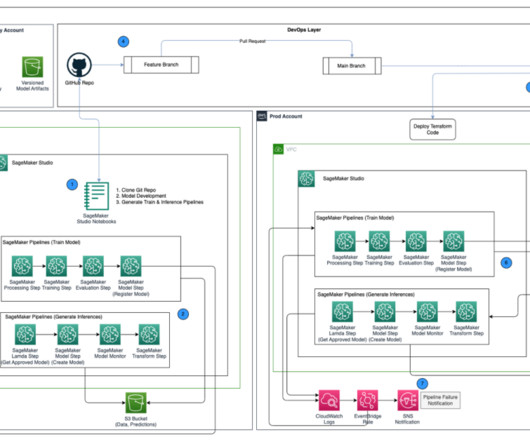

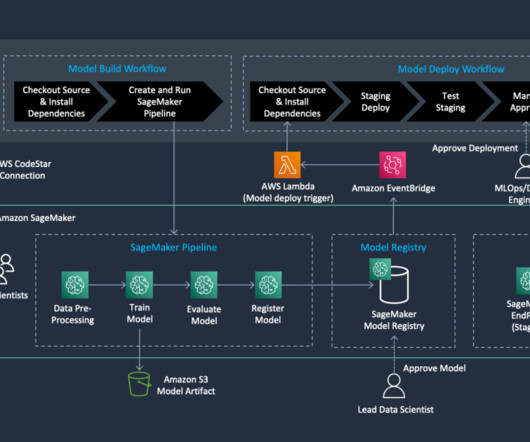

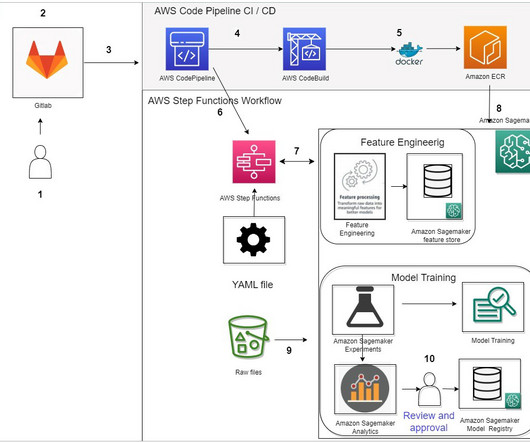

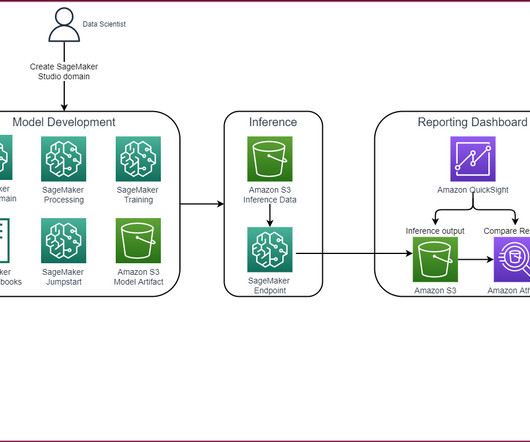

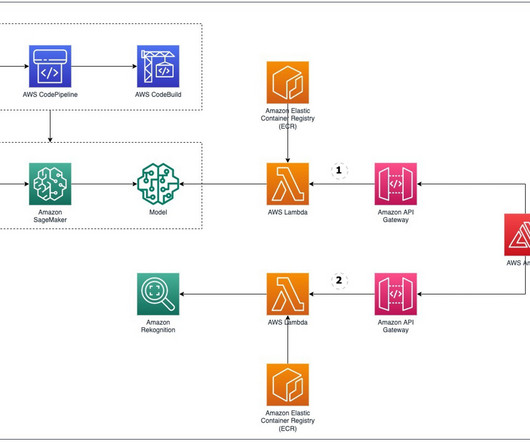

Build an Amazon SageMaker Model Registry approval and promotion workflow with human intervention

AWS Machine Learning Blog

JANUARY 10, 2024

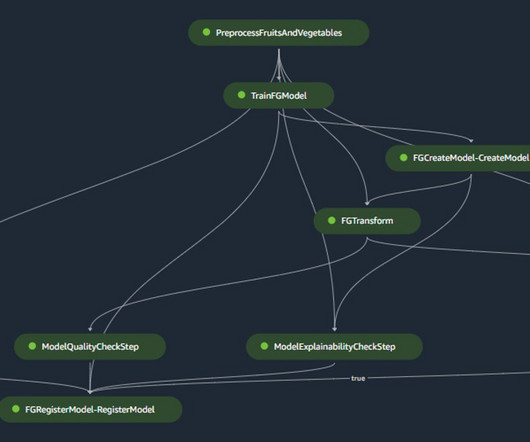

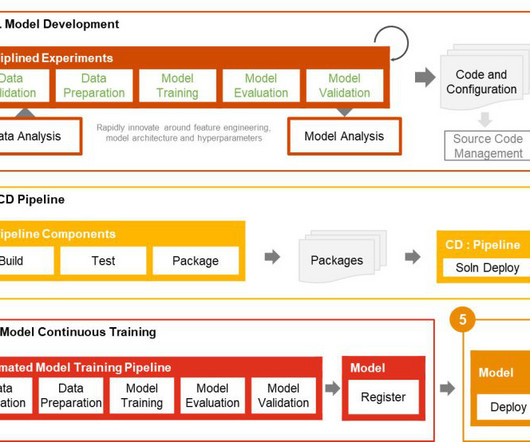

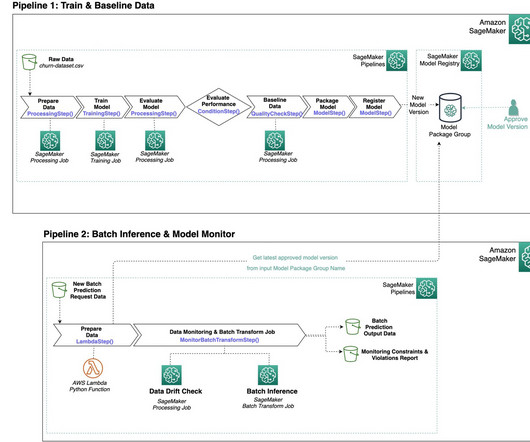

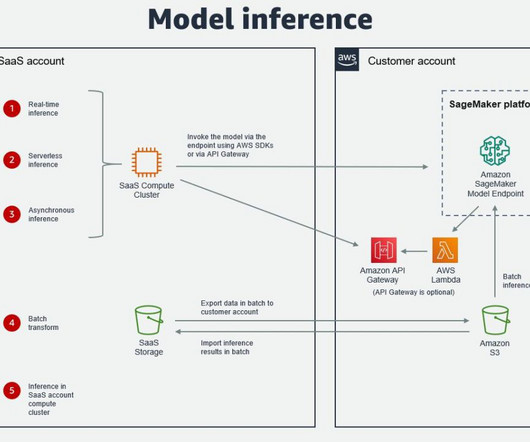

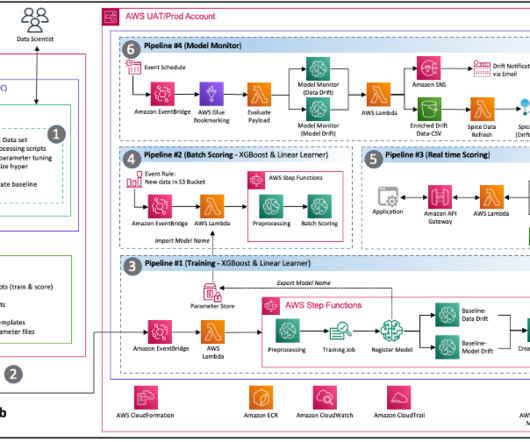

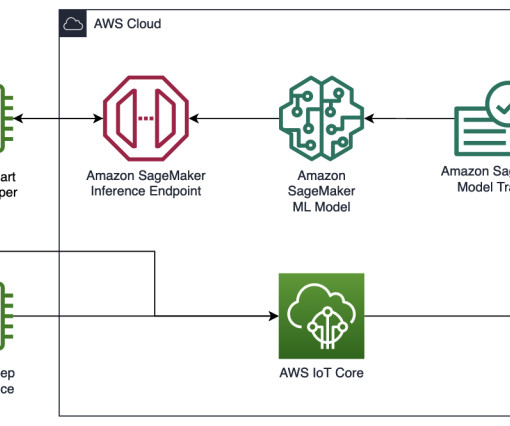

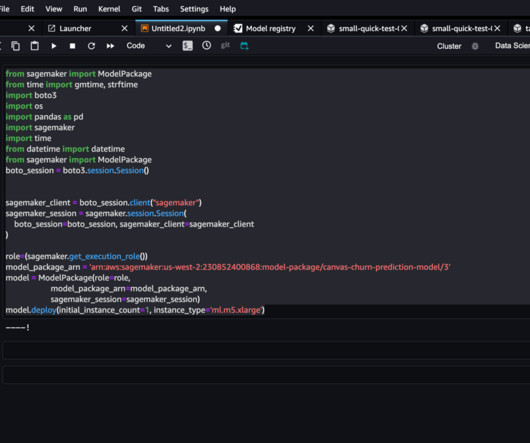

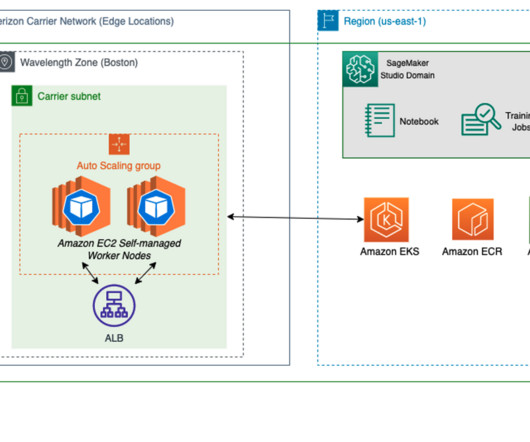

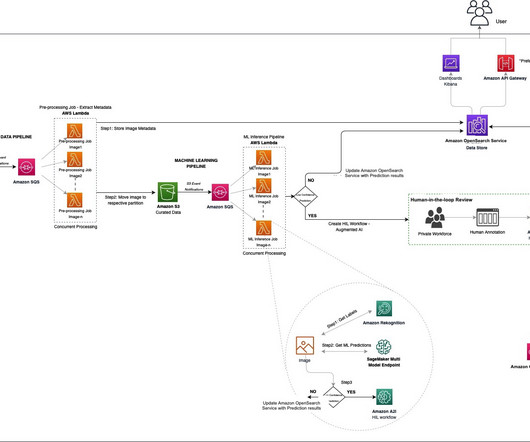

ML Engineer at Tiger Analytics. The large machine learning (ML) model development lifecycle requires a scalable model release process similar to that of software development. Model developers often work together in developing ML models and require a robust MLOps platform to work in.

Let's personalize your content