How Axfood enables accelerated machine learning throughout the organization using Amazon SageMaker

AWS Machine Learning Blog

FEBRUARY 27, 2024

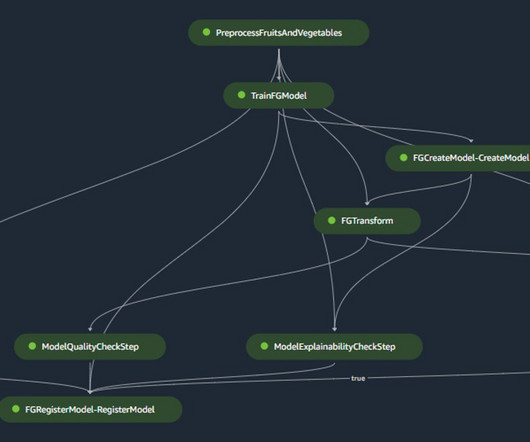

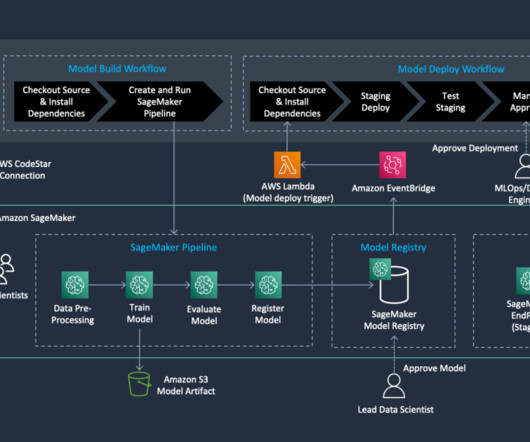

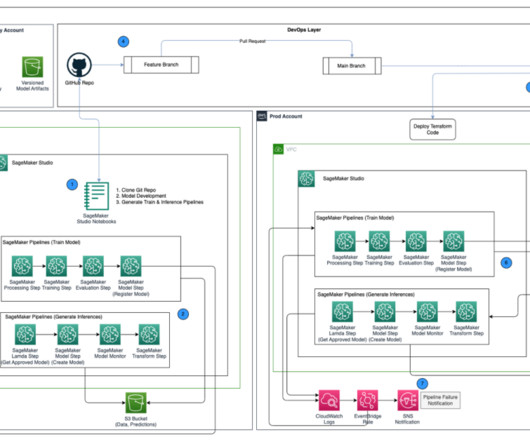

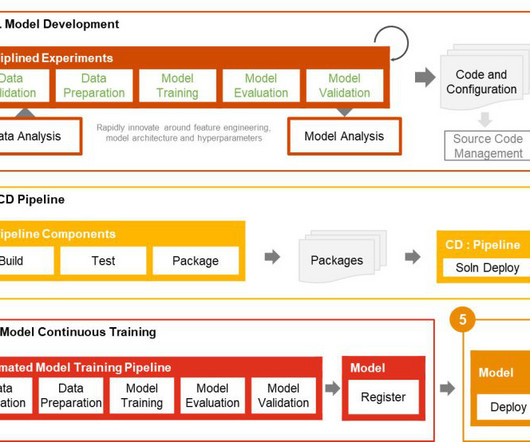

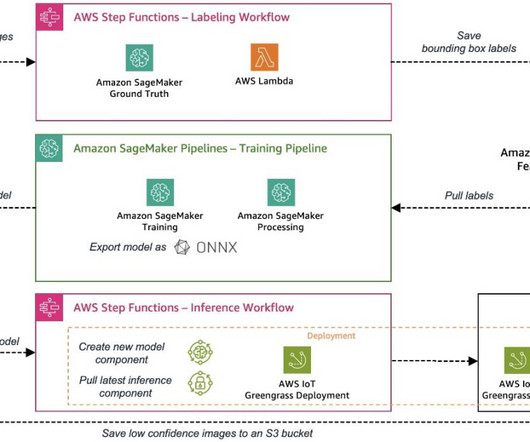

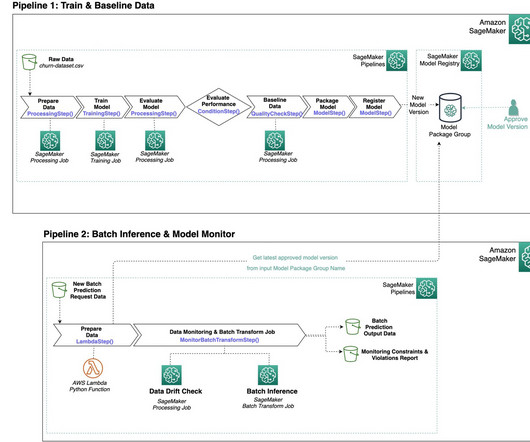

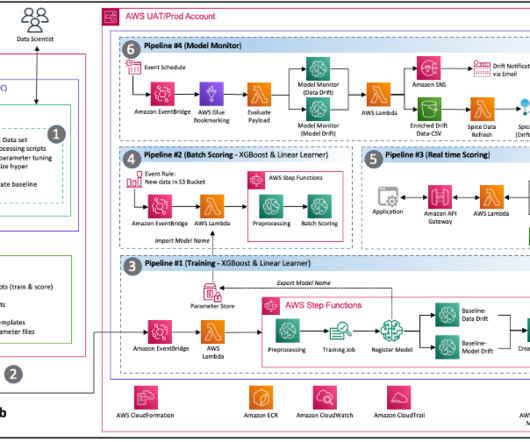

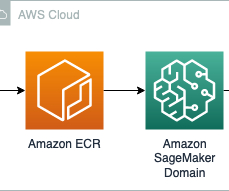

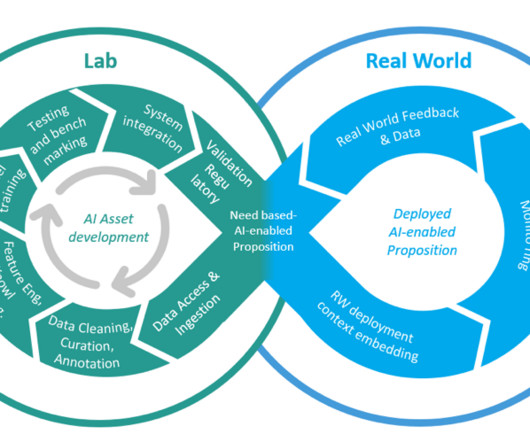

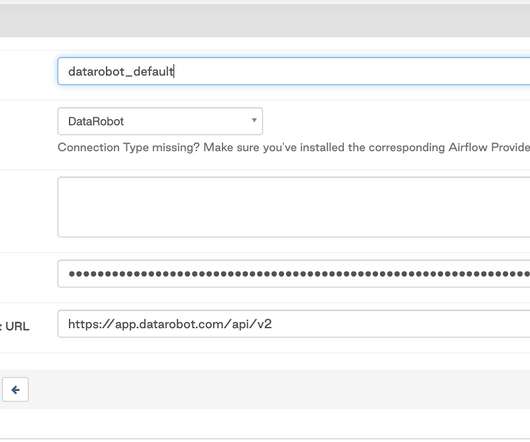

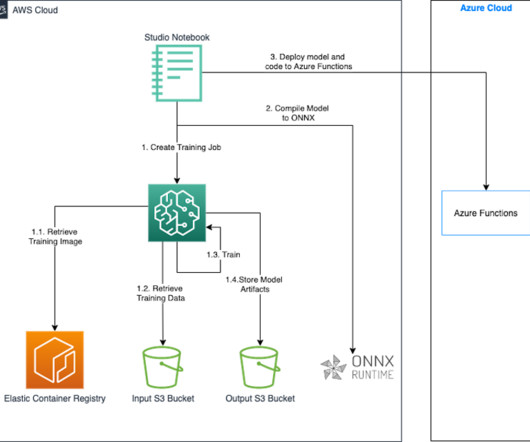

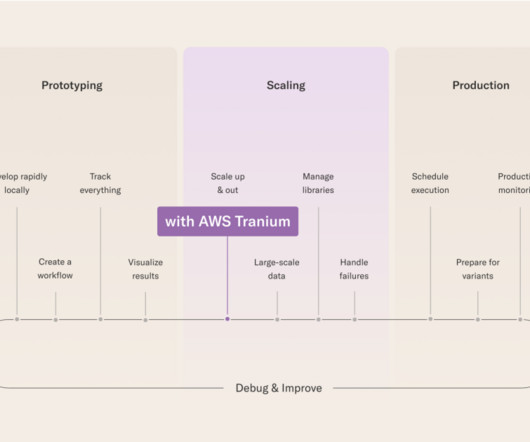

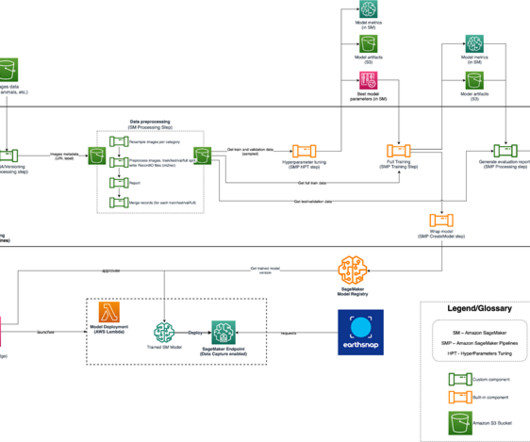

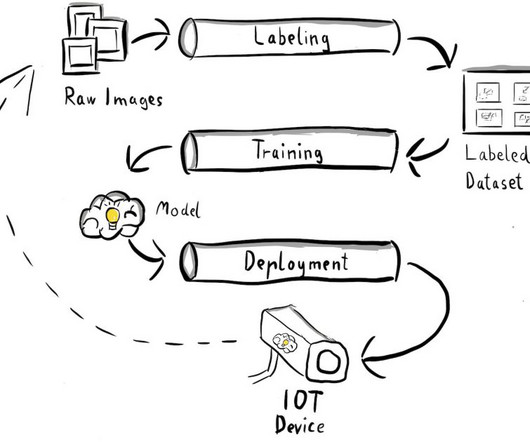

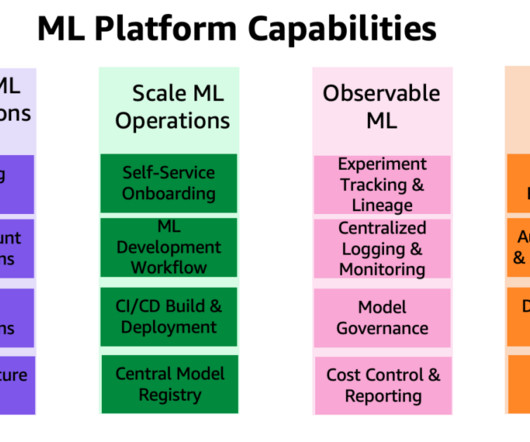

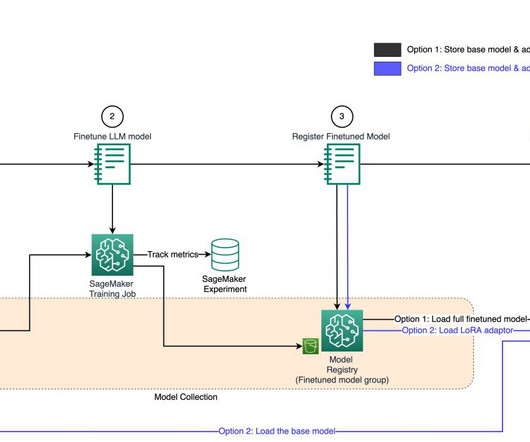

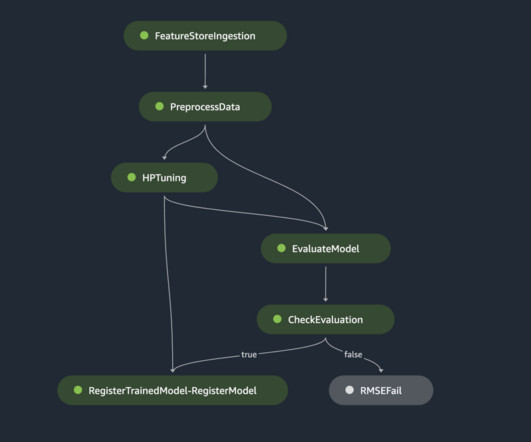

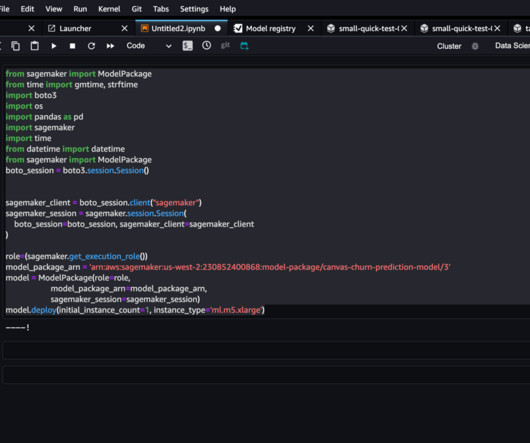

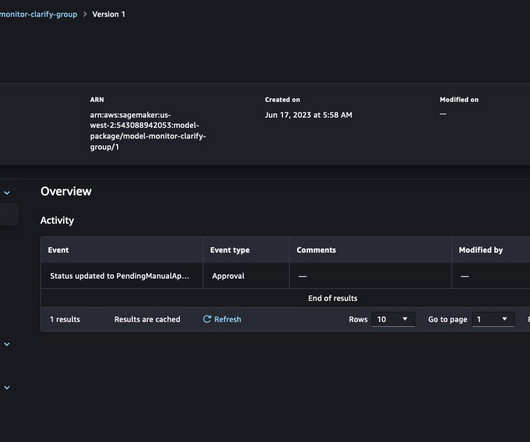

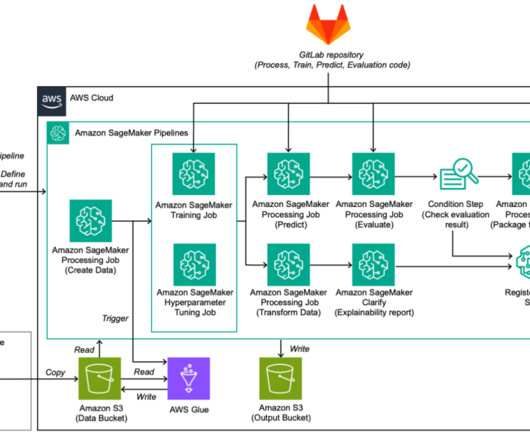

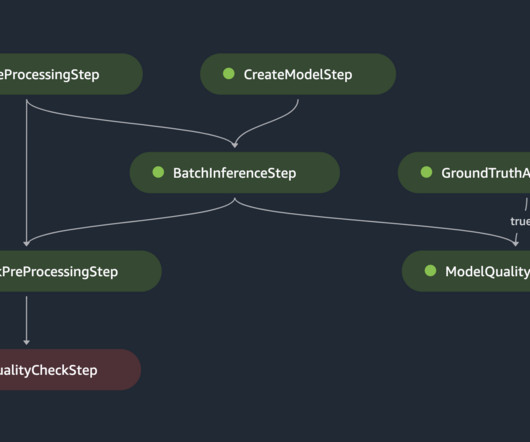

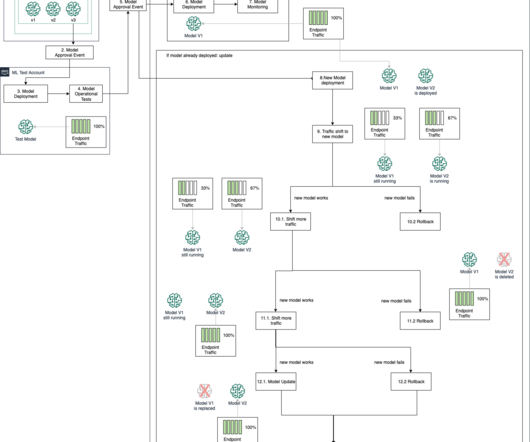

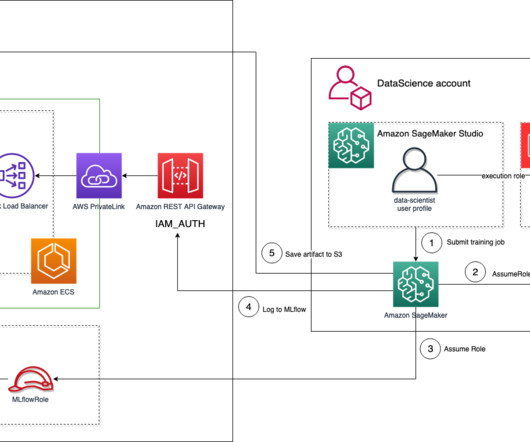

Axfood has been using Amazon SageMaker to cultivate their data using ML and has had models in production for many years. Lately, the level of sophistication and the sheer number of models in production is increasing exponentially. We decided to put in a joint effort to build a prototype on a best practice for MLOps.

Let's personalize your content