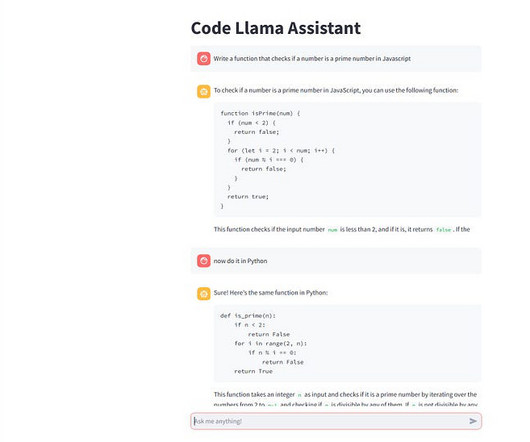

How to Build Your Own LLM Coding Assistant With Code Llama ?

Towards AI

FEBRUARY 13, 2024

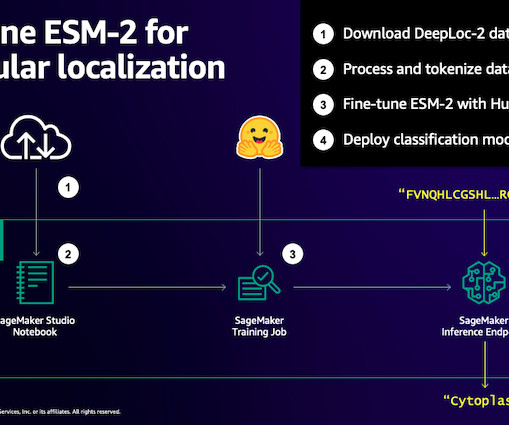

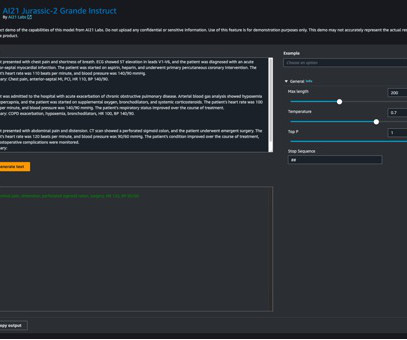

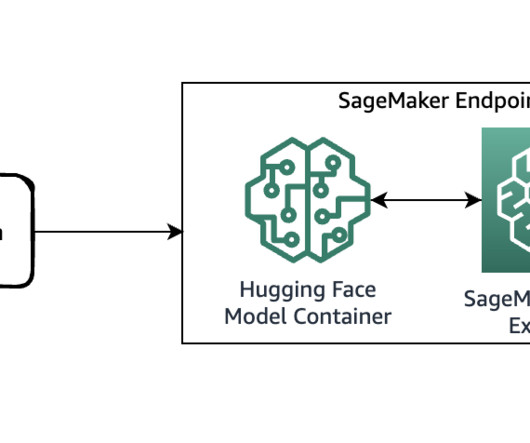

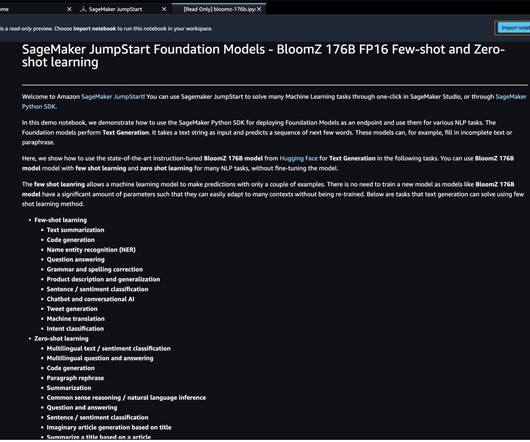

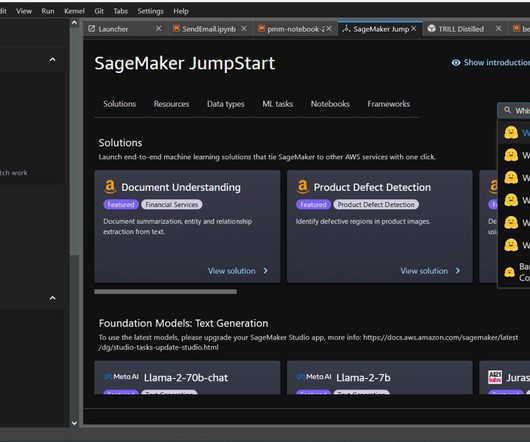

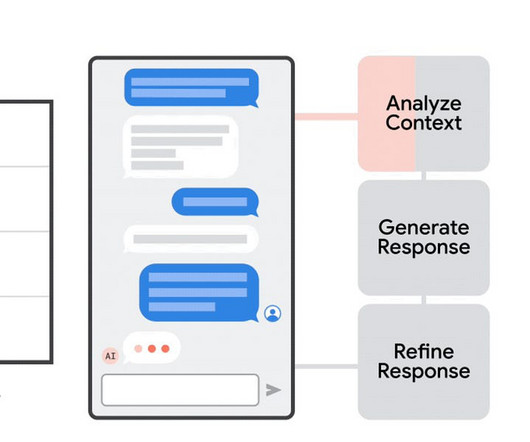

You can ask the chatbot questions, and it will answer in natural language and with code in multiple programming languages. We will use the Hugging Face transformer library to implement the LLM and Streamlit for the Chatbot front end. This makes them very good at text generation. Not this Llama.

Let's personalize your content