Machine learning with decentralized training data using federated learning on Amazon SageMaker

AWS Machine Learning Blog

AUGUST 22, 2023

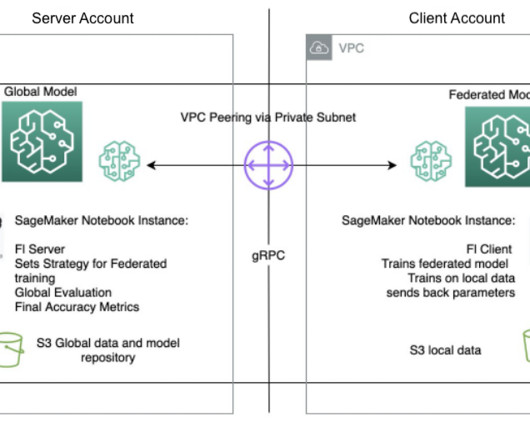

When choosing an FL framework, we usually consider its support for model category, ML framework, and device or operation system. The Flower clients receive instructions (messages) as raw byte arrays via the network. Instances in either VPC can communicate with each other as if they are within the same network.

Let's personalize your content