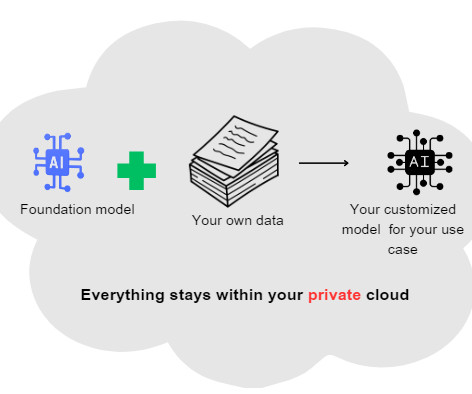

Building Domain-Specific Custom LLM Models: Harnessing the Power of Open Source Foundation Models

Towards AI

MAY 20, 2023

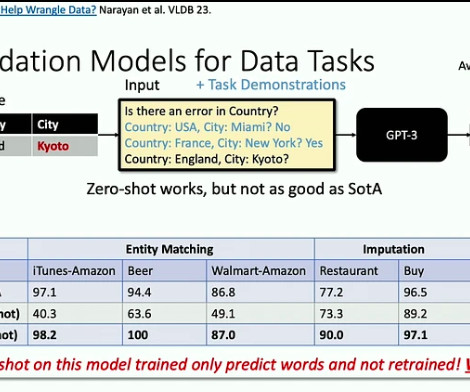

Challenges of building custom LLMs Building custom Large Language Models (LLMs) presents an array of challenges to organizations that can be broadly categorized under data, technical, ethical, and resource-related issues. Ensuring data quality during collection is also important.

Let's personalize your content