Using Comet for Interpretability and Explainability

Heartbeat

SEPTEMBER 7, 2023

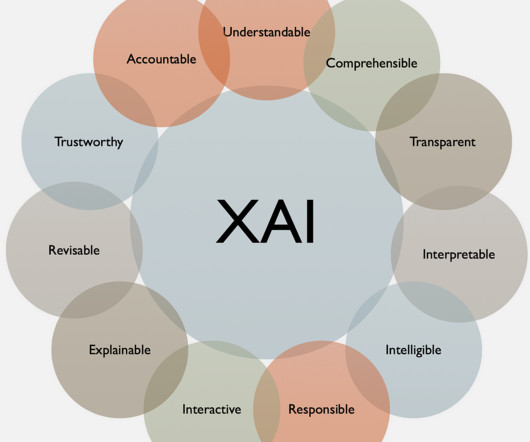

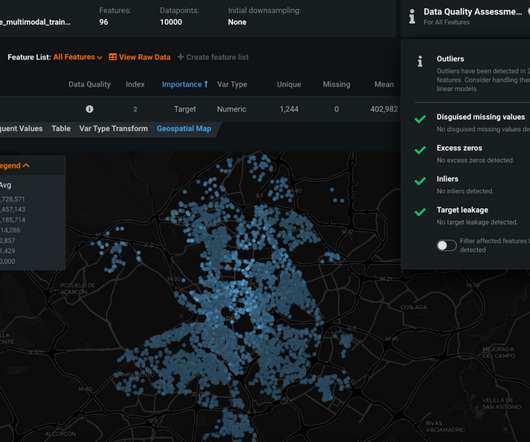

In the ever-evolving landscape of machine learning and artificial intelligence, understanding and explaining the decisions made by models have become paramount. Enter Comet , that streamlines the model development process and strongly emphasizes model interpretability and explainability. Why Does It Matter?

Let's personalize your content