Deciphering Transformer Language Models: Advances in Interpretability Research

Marktechpost

MAY 5, 2024

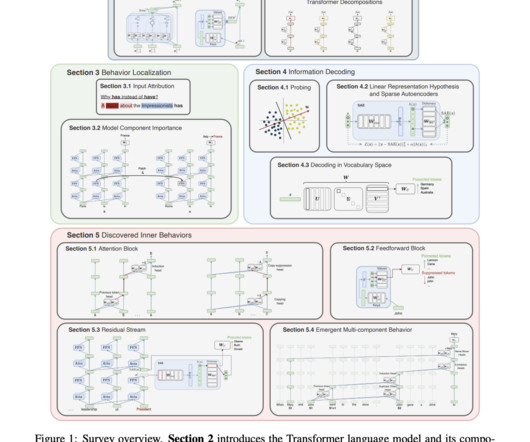

Existing surveys detail a range of techniques utilized in Explainable AI analyses and their applications within NLP. The LM interpretability approaches discussed are categorized based on two dimensions: localizing inputs or model components for predictions and decoding information within learned representations.

Let's personalize your content