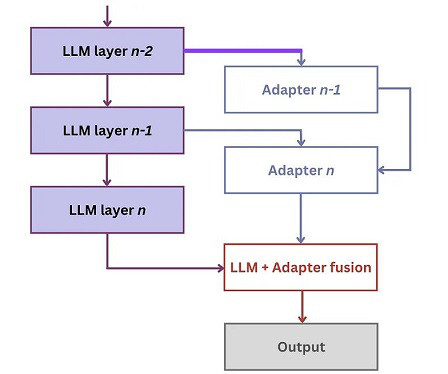

Modular Deep Learning

Sebastian Ruder

FEBRUARY 23, 2023

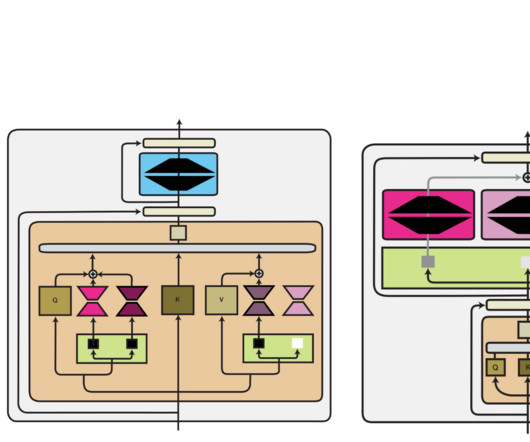

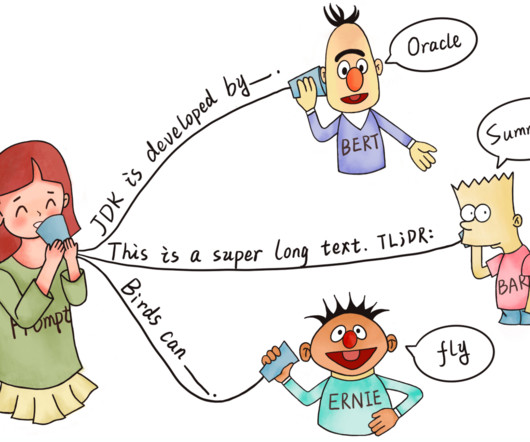

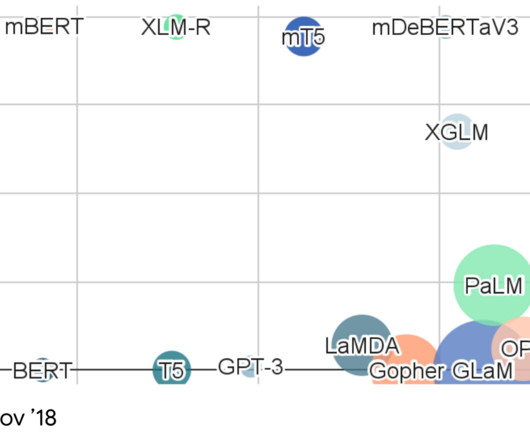

This post gives a brief overview of modularity in deep learning. For modular fine-tuning for NLP, check out our EMNLP 2022 tutorial. Fuelled by scaling laws, state-of-the-art models in machine learning have been growing larger and larger. We give an in-depth overview of modularity in our survey on Modular Deep Learning.

Let's personalize your content