Data Integration: Strategies for Efficient ETL Processes

Analytics Vidhya

JUNE 3, 2024

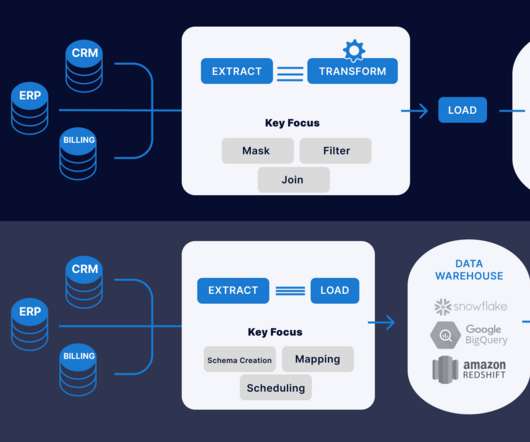

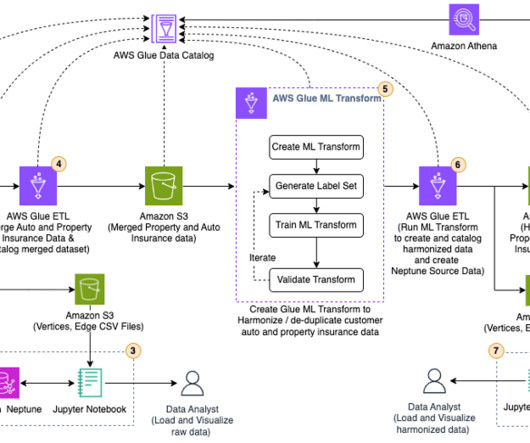

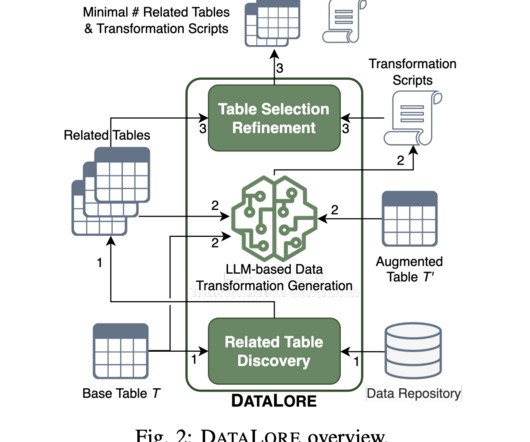

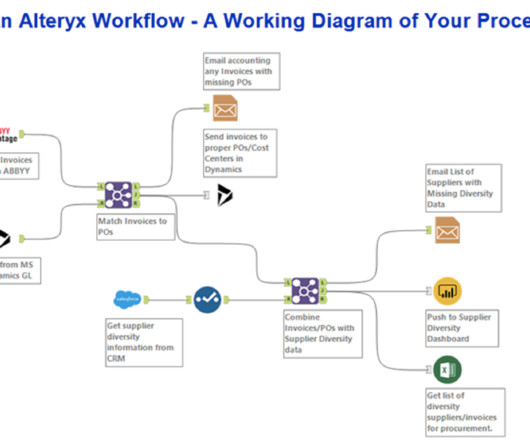

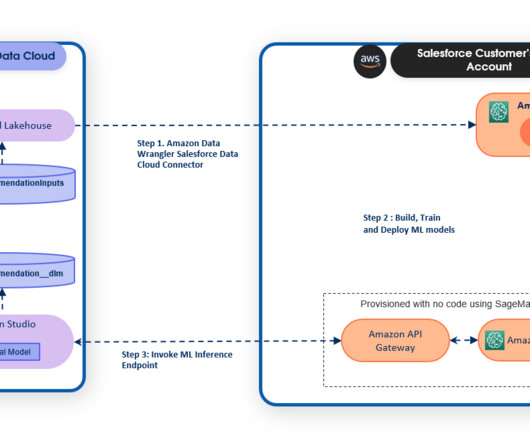

This crucial process, called Extract, Transform, Load (ETL), involves extracting data from multiple origins, transforming it into a consistent format, and loading it into a target system for analysis.

Let's personalize your content