How Axfood enables accelerated machine learning throughout the organization using Amazon SageMaker

AWS Machine Learning Blog

FEBRUARY 27, 2024

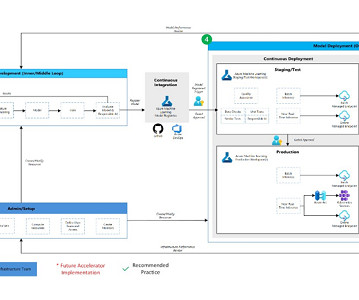

In this post, we share how Axfood, a large Swedish food retailer, improved operations and scalability of their existing artificial intelligence (AI) and machine learning (ML) operations by prototyping in close collaboration with AWS experts and using Amazon SageMaker. This is a guest post written by Axfood AB.

Let's personalize your content