LLMs Exposed: Are They Just Cheating on Math Tests?

Analytics Vidhya

MAY 5, 2024

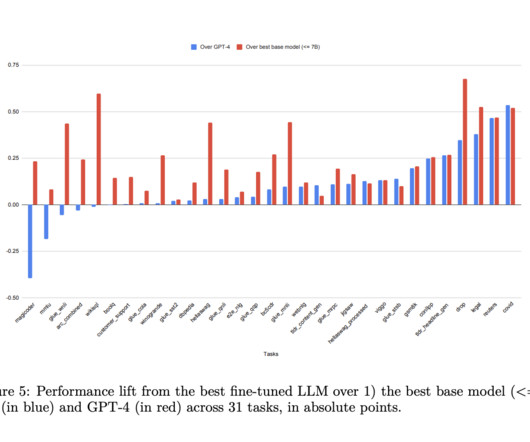

Introduction Large Language Models (LLMs) are advanced natural language processing models that have achieved remarkable success in various benchmarks for mathematical reasoning. These models are designed to process and understand human language, enabling them to perform tasks such as question answering, language translation, and text generation. LLMs are typically trained on large datasets scraped from […] The post LLMs Exposed: Are They Just Cheating on Math Tests?

Let's personalize your content