NLP Rise with Transformer Models | A Comprehensive Analysis of T5, BERT, and GPT

Unite.AI

NOVEMBER 8, 2023

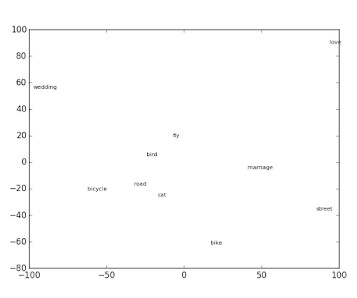

Early NLP Techniques: The Foundations Before Transformers Word Embeddings: From One-Hot to Word2Vec In traditional NLP approaches, the representation of words was often literal and lacked any form of semantic or syntactic understanding. The introduction of word embeddings, most notably Word2Vec, was a pivotal moment in NLP.

Let's personalize your content