RAFT – A Fine-Tuning and RAG Approach to Domain-Specific Question Answering

Unite.AI

MARCH 29, 2024

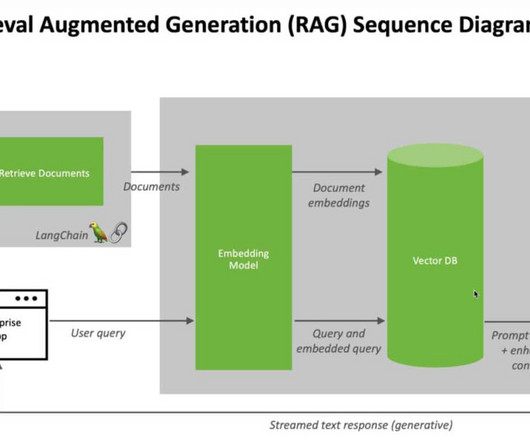

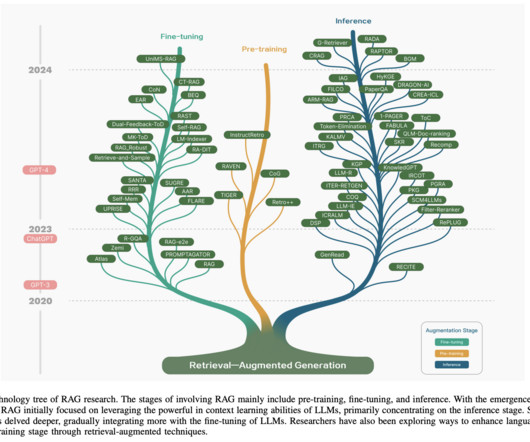

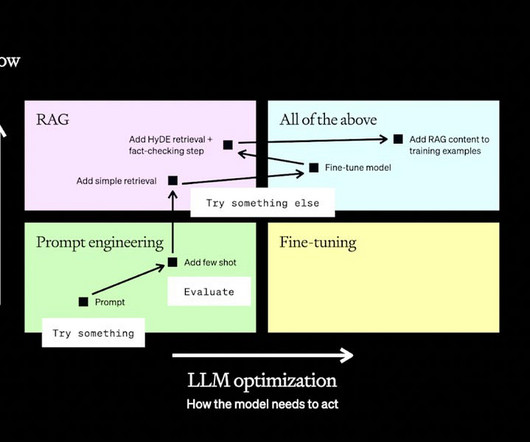

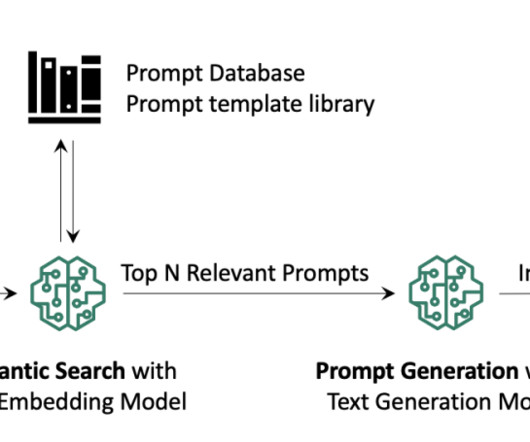

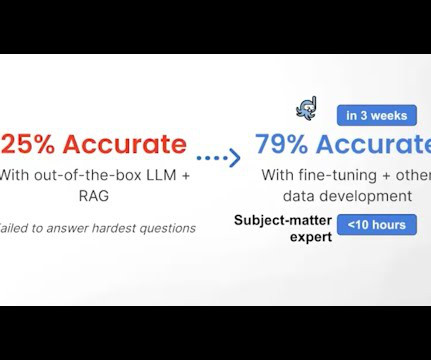

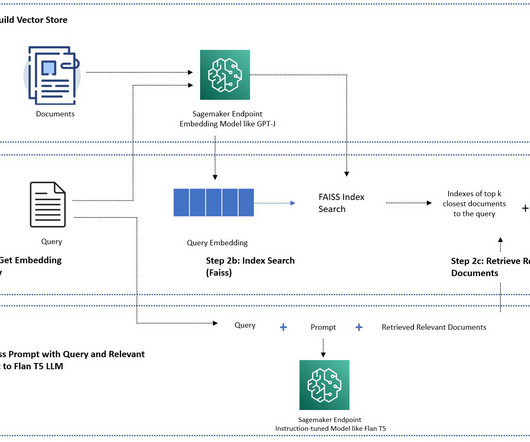

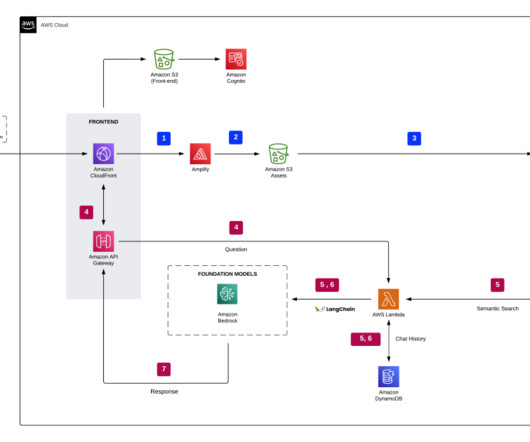

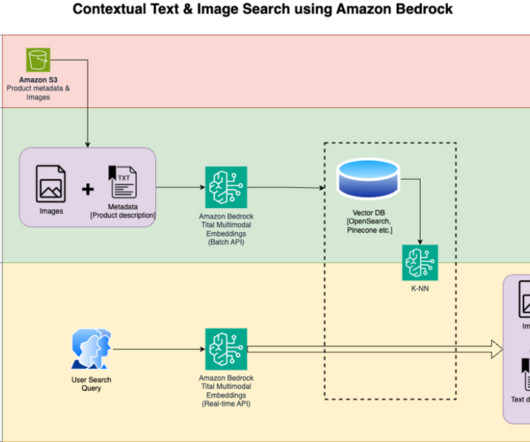

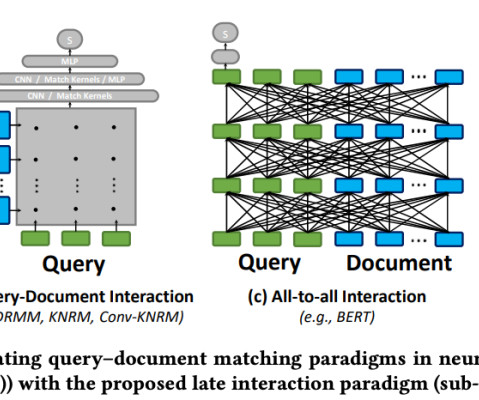

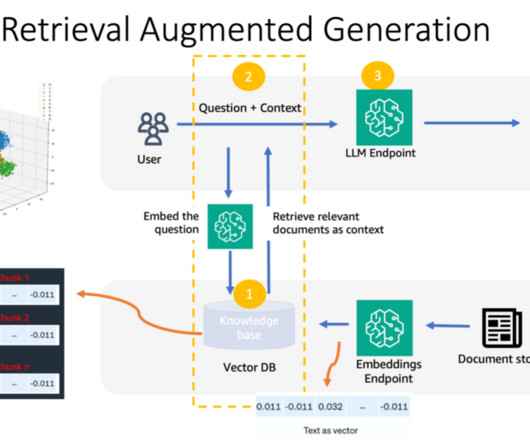

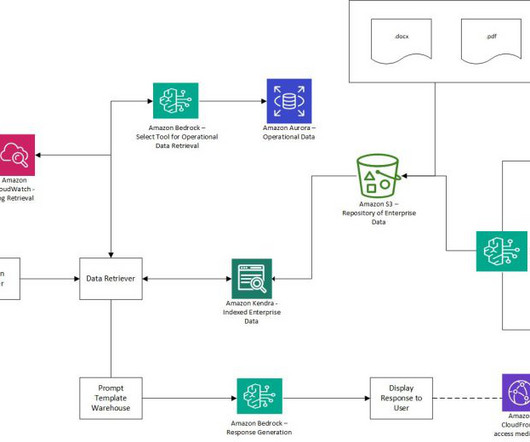

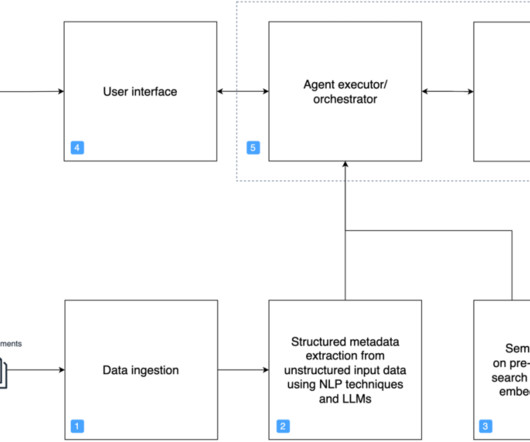

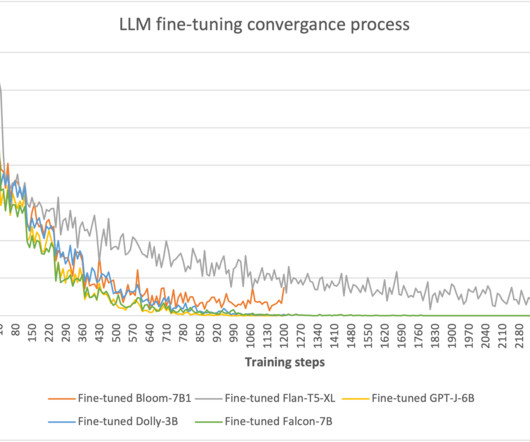

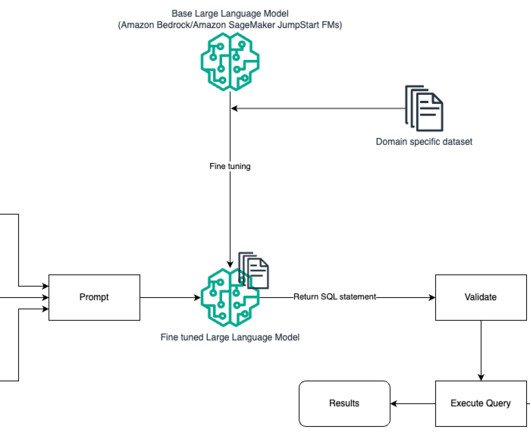

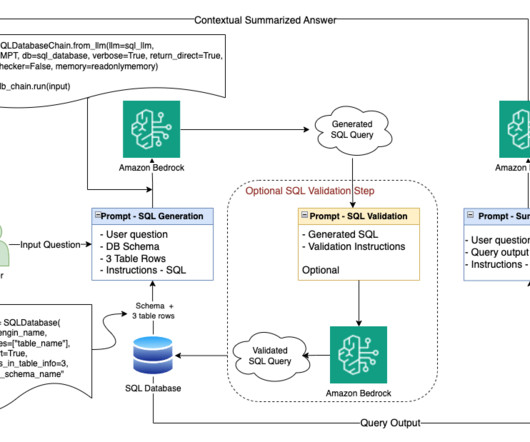

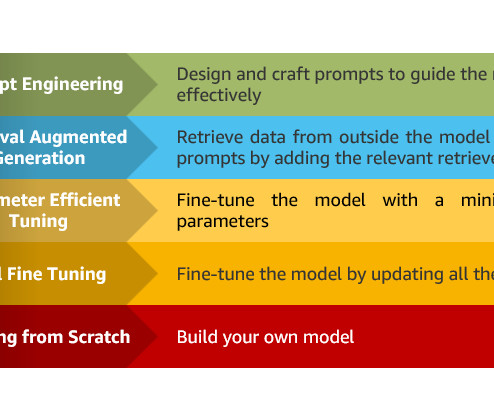

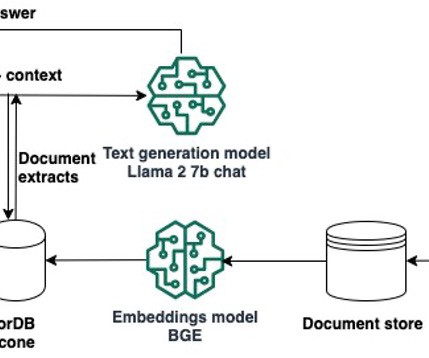

Enter RAFT (Retrieval Augmented Fine Tuning), a novel approach that combines the strengths of retrieval-augmented generation (RAG) and fine-tuning, tailored specifically for domain-specific question answering tasks. The retrieval process in RAG starts with a user's query.

Let's personalize your content