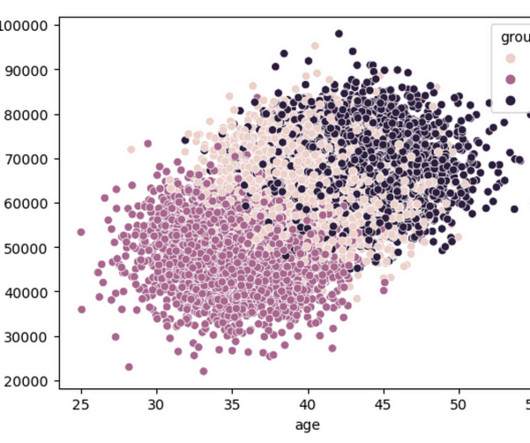

Go from Engineer to ML Engineer with Declarative ML

MAY 31, 2023

Learn how to easily build any AI model and customize your own LLM in just a few lines of code with a declarative approach to machine learning.

This site uses cookies to improve your experience. By viewing our content, you are accepting the use of cookies. To help us insure we adhere to various privacy regulations, please select your country/region of residence. If you do not select a country we will assume you are from the United States. View our privacy policy and terms of use.

MAY 31, 2023

Learn how to easily build any AI model and customize your own LLM in just a few lines of code with a declarative approach to machine learning.

DECEMBER 12, 2023

Whether you're a seasoned ML engineer or a new LLM developer, these tools will help you get more productive and accelerate the development and deployment of your AI projects.

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

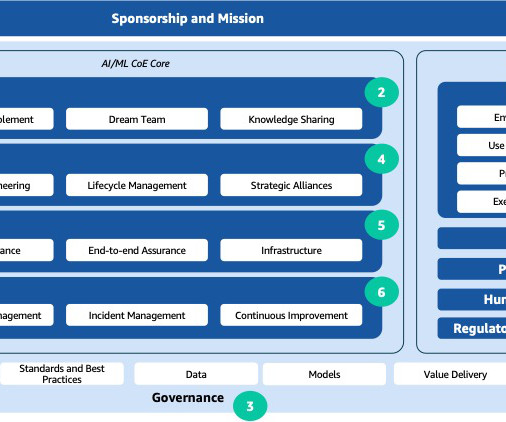

AWS Machine Learning Blog

MAY 9, 2024

The rapid advancements in artificial intelligence and machine learning (AI/ML) have made these technologies a transformative force across industries. An effective approach that addresses a wide range of observed issues is the establishment of an AI/ML center of excellence (CoE). What is an AI/ML CoE?

Towards AI

JANUARY 30, 2024

The Top Secret Behind Effective LLM Training in 2024 Large-scale unsupervised language models (LMs) have shown remarkable capabilities in understanding and generating human-like text. ML Engineers(LLM), Tech Enthusiasts, VCs, etc. Anybody previously acquainted with ML terms should be able to follow along.

AWS Machine Learning Blog

JANUARY 26, 2024

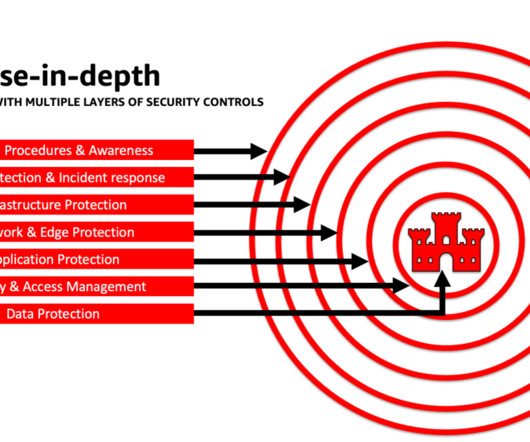

Understanding and addressing LLM vulnerabilities, threats, and risks during the design and architecture phases helps teams focus on maximizing the economic and productivity benefits generative AI can bring. This post provides three guided steps to architect risk management strategies while developing generative AI applications using LLMs.

AWS Machine Learning Blog

FEBRUARY 26, 2024

Our proposed architecture provides a scalable and customizable solution for online LLM monitoring, enabling teams to tailor your monitoring solution to your specific use cases and requirements. We suggest that each module take incoming inference requests to the LLM, passing prompt and completion (response) pairs to metric compute modules.

Unite.AI

FEBRUARY 28, 2024

Attackers may attempt to fine-tune surrogate models using queries to the target LLM to reverse-engineer its knowledge. Adversaries can also attempt to breach cloud environments hosting LLMs to sabotage operations or exfiltrate data. Stolen models also create additional attack surface for adversaries to mount further attacks.

Marktechpost

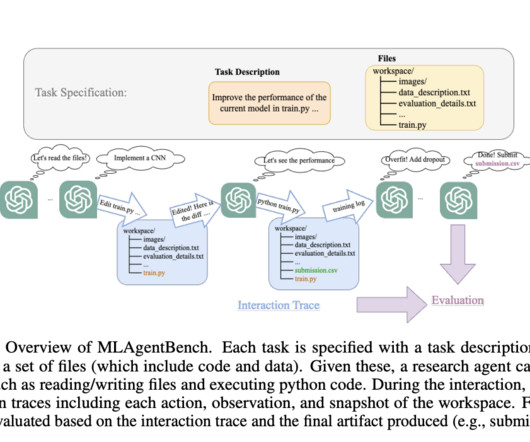

OCTOBER 11, 2023

The team started with a collection of 15 ML engineering projects spanning various fields, with experiments that are quick and cheap to run. At a high level, they simply ask the LLMs to take the next action, using a prompt that is automatically produced based on the available information about the task and previous steps.

Towards AI

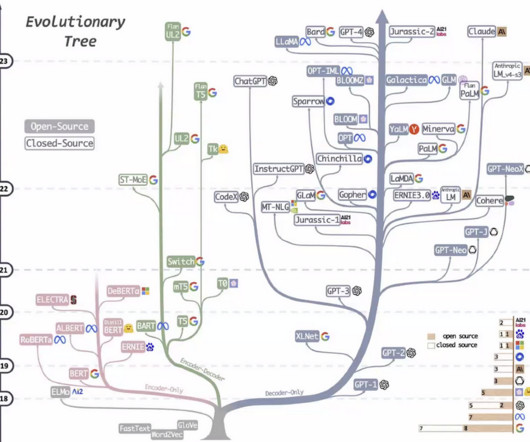

SEPTEMBER 22, 2023

However, with the advent of LLM, everything has changed. LLMs seem to rule them all, and interestingly, no one knows how LLMs work. Now, people are questioning whether they should still develop solutions other than LLM but know little about how to make LLM-based solutions accountable. Everyone was happy.

JUNE 20, 2023

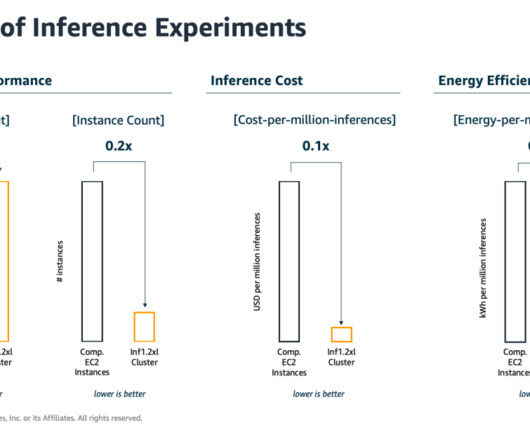

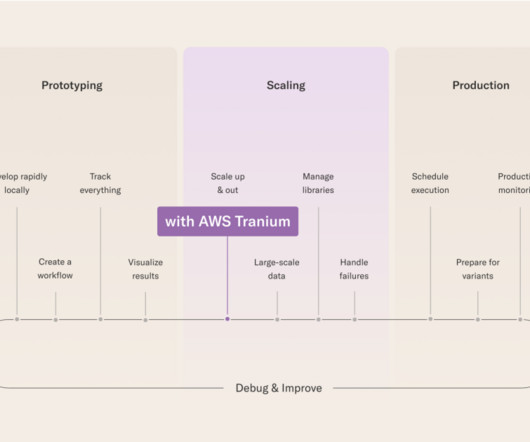

Machine learning (ML) engineers have traditionally focused on striking a balance between model training and deployment cost vs. performance. This is important because training ML models and then using the trained models to make predictions (inference) can be highly energy-intensive tasks.

TheSequence

MAY 21, 2023

🔎 ML Research RL for Open Ended LLM Conversations Google Research published a paper detailing dynamic planning, a reinforcement learning(RL) based technique to guide open ended conversations. Self-Aligned LLM IBM Research published a paper introducing Dromedary, a self-aligned LLM trained with minimum user supervision.

AWS Machine Learning Blog

JANUARY 10, 2024

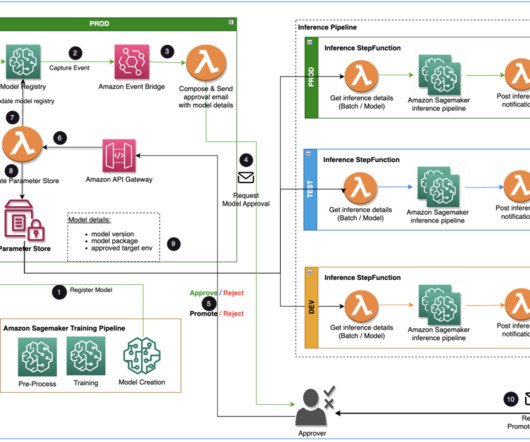

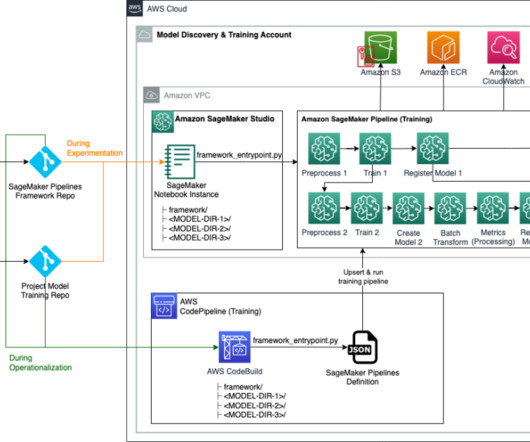

Specialist Data Engineering at Merck, and Prabakaran Mathaiyan, Sr. ML Engineer at Tiger Analytics. The large machine learning (ML) model development lifecycle requires a scalable model release process similar to that of software development. The input to the training pipeline is the features dataset.

ODSC - Open Data Science

JANUARY 10, 2024

Takeaways include: The dangers of using post-hoc explainability methods as tools for decision-making, and where traditional ML falls short. You will also become familiar with the concept of LLM as a reasoning engine that can power your applications, paving the way to a new landscape of software development in the era of Generative AI.

ODSC - Open Data Science

DECEMBER 7, 2023

How to Add Domain-Specific Knowledge to an LLM Based on Your Data In this article, we will explore one of several strategies and techniques to infuse domain knowledge into LLMs, allowing them to perform at their best within specific professional contexts by adding chunks of documentation into an LLM as context when injecting the query.

Snorkel AI

MAY 1, 2024

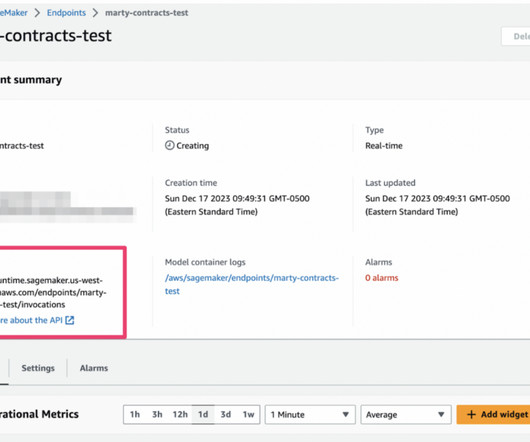

phData Senior ML Engineer Ryan Gooch recently evaluated options to accelerate ML model deployment with Snorkel Flow and AWS SageMaker. Ultimately teams can rapidly generate high-quality data sets, fine-tune an existing model, or distill an LLM to a smaller, specialized model with reduced training and inference costs.

AWS Machine Learning Blog

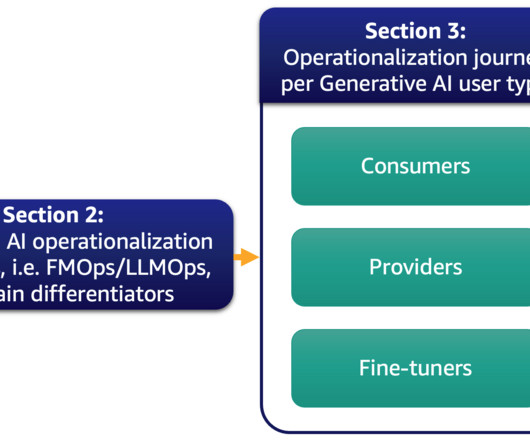

SEPTEMBER 1, 2023

Furthermore, we deep dive on the most common generative AI use case of text-to-text applications and LLM operations (LLMOps), a subset of FMOps. The ML consumers are other business stakeholders who use the inference results (predictions) to drive decisions. The following figure illustrates the topics we discuss.

TheSequence

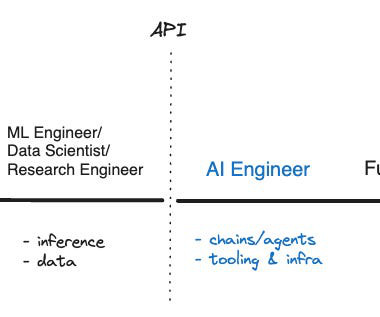

JANUARY 19, 2024

You probably don’t need ML engineers In the last two years, the technical sophistication needed to build with AI has dropped dramatically. ML engineers used to be crucial to AI projects because you needed to train custom models from scratch. At the same time, the capabilities of AI models have grown.

TheSequence

NOVEMBER 12, 2023

📌 ML Engineering Event: Join Meta, PepsiCo, RiotGames, Uber & more at apply(ops) apply(ops) is in two days! Databricks’ CEO Ali Ghodsi will also be joining Tecton CEO Mike Del Balso for a fireside chat about LLMs, real-time ML, and other trends in ML. Register today—it’s free!

Heartbeat

OCTOBER 5, 2023

Unsurprisingly, Machine Learning (ML) has seen remarkable progress, revolutionizing industries and how we interact with technology. The emergence of Large Language Models (LLMs) like OpenAI's GPT , Meta's Llama , and Google's BERT has ushered in a new era in this field. Their mission?

AWS Machine Learning Blog

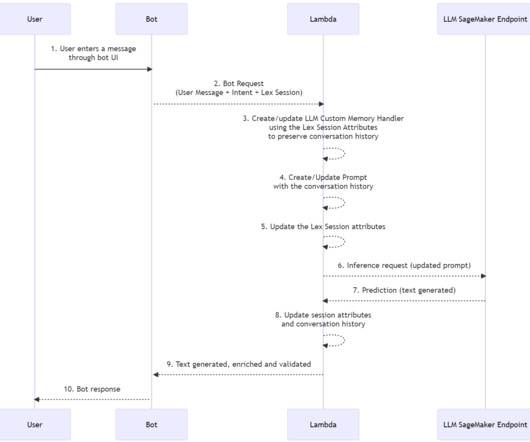

JUNE 8, 2023

We have included a sample project to quickly deploy an Amazon Lex bot that consumes a pre-trained open-source LLM. This mechanism allows an LLM to recall previous interactions to keep the conversation’s context and pace. We also use LangChain, a popular framework that simplifies LLM-powered applications.

databricks

SEPTEMBER 28, 2023

We are excited to announce public preview of GPU and LLM optimization support for Databricks Model Serving! With this launch, you can deploy.

TheSequence

NOVEMBER 5, 2023

Frank Liu, head of AI & ML at Zilliz, the company behind widely adopted open source vector database Milvus, shares his red hot takes on the latest topics in AI, ML, LLMs and more! 🛠 Real World ML LLM Architectures at GitHub GitHub ML engineers discuss the architecture of LLMs apps —> Read more.

TheSequence

JULY 12, 2023

In 2018, I joined Cruise and cofounded the ML Infrastructure team there. We built many critical platform systems that enabled the ML teams to develop and ship models much faster, which contributed to the commercial launch of robotaxis in San Francisco in 2022. This required large end-to-end pipelines.

Chatbots Life

MAY 16, 2023

10Clouds is a software consultancy, development, ML, and design house based in Warsaw, Poland. Services : AI Solution Development, ML Engineering, Data Science Consulting, NLP, AI Model Development, AI Strategic Consulting, Computer Vision.

Snorkel AI

JUNE 9, 2023

Snorkel Foundry will allow customers to programmatically curate unstructured data to pre-train an LLM for a specific domain. Leveraging Data-centric AI for Document Intelligence and PDF Extraction Snorkel AI ML Engineer Ashwini Ramamoorthy highlighted the challenges of extracting entities from semi-structured documents.

Snorkel AI

JUNE 9, 2023

Snorkel Foundry will allow customers to programmatically curate unstructured data to pre-train an LLM for a specific domain. Leveraging Data-centric AI for Document Intelligence and PDF Extraction Snorkel AI ML Engineer Ashwini Ramamoorthy highlighted the challenges of extracting entities from semi-structured documents.

AWS Machine Learning Blog

AUGUST 1, 2023

Artificial intelligence (AI) and machine learning (ML) models have shown great promise in addressing these challenges. Amazon SageMaker , a fully managed ML service, provides an ideal platform for hosting and implementing various AI/ML-based summarization models and approaches. No ML engineering experience required.

Snorkel AI

OCTOBER 27, 2023

Snorkel AI held its Enterprise LLM Virtual Summit on October 26, 2023, drawing an engaged crowd of more than 1,000 attendees across three hours and eight sessions that featured 11 speakers. How to fine-tune and customize LLMs Hoang Tran, ML Engineer at Snorkel AI, outlined how he saw LLMs creating value in enterprise environments.

Snorkel AI

OCTOBER 27, 2023

Snorkel AI held its Enterprise LLM Virtual Summit on October 26, 2023, drawing an engaged crowd of more than 1,000 attendees across three hours and eight sessions that featured 11 speakers. How to fine-tune and customize LLMs Hoang Tran, ML Engineer at Snorkel AI, outlined how he saw LLMs creating value in enterprise environments.

AWS Machine Learning Blog

MARCH 14, 2024

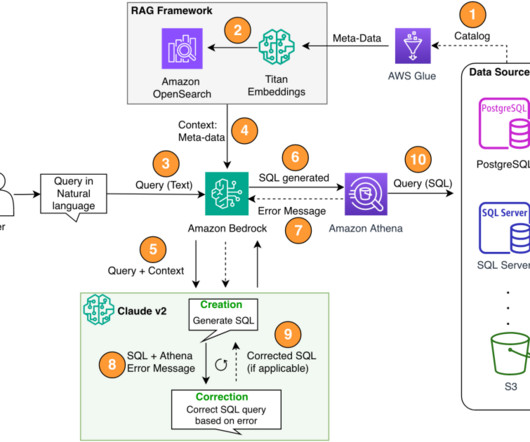

We formulated a text-to-SQL approach where by a user’s natural language query is converted to a SQL statement using an LLM. This data is again provided to an LLM, which is asked to answer the user’s query given the data. The relevant information is then provided to the LLM for final response generation.

AWS Machine Learning Blog

APRIL 29, 2024

In 2024, however, organizations are using large language models (LLMs), which require relatively little focus on NLP, shifting research and development from modeling to the infrastructure needed to support LLM workflows. This often means the method of using a third-party LLM API won’t do for security, control, and scale reasons.

AWS Machine Learning Blog

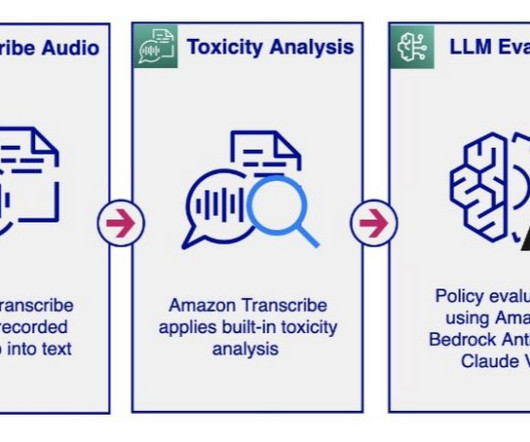

MARCH 13, 2024

The LLM analysis provides a violation result (Y or N) and explains the rationale behind the model’s decision regarding policy violation. The audio moderation workflow activates the LLM’s policy evaluation only when the toxicity analysis exceeds a set threshold. LLMs, in contrast, offer a high degree of flexibility.

AWS Machine Learning Blog

FEBRUARY 28, 2024

on Amazon Bedrock as our LLM. The multi-step component allows the LLM to correct the generated SQL query for accuracy. We use Athena error messages to enrich our prompt for the LLM for more accurate and effective corrections in the generated SQL. About the Authors Sanjeeb Panda is a Data and ML engineer at Amazon.

AWS Machine Learning Blog

MAY 13, 2024

In part 1 of this blog series, we discussed how a large language model (LLM) available on Amazon SageMaker JumpStart can be fine-tuned for the task of radiology report impression generation. We also explore the utility of the RAG prompt engineering technique as it applies to the task of summarization.

BAIR

MARCH 11, 2024

Currently, I am working on Large Language Model (LLM) based autonomous agents. I have previously worked on sequence models for DNA and RNA, and benchmarks for evaluating the interpretability and fairness of ML models across domains. Specifically, I work on methods that algorithmically generates diverse training environments (i.e.,

AWS Machine Learning Blog

NOVEMBER 30, 2023

Code Editor is based on Code-OSS , Visual Studio Code Open Source, and provides access to the familiar environment and tools of the popular IDE that machine learning (ML) developers know and love, fully integrated with the broader SageMaker Studio feature set. Choose Open CodeEditor to launch the IDE.

AWS Machine Learning Blog

NOVEMBER 30, 2023

Amazon SageMaker Clarify now provides AWS customers with foundation model (FM) evaluations, a set of capabilities designed to evaluate and compare model quality and responsibility metrics for any LLM, in minutes. FMEval helps in measuring evaluation dimensions such as accuracy, robustness, bias, toxicity, and factual knowledge for any LLM.

AWS Machine Learning Blog

AUGUST 16, 2023

Thomson Reuters (TR), a global content and technology-driven company, has been using artificial intelligence (AI) and machine learning (ML) in its professional information products for decades. The retrieved best match is then passed as an input to the LLM along with the query to generate the best response.

AWS Machine Learning Blog

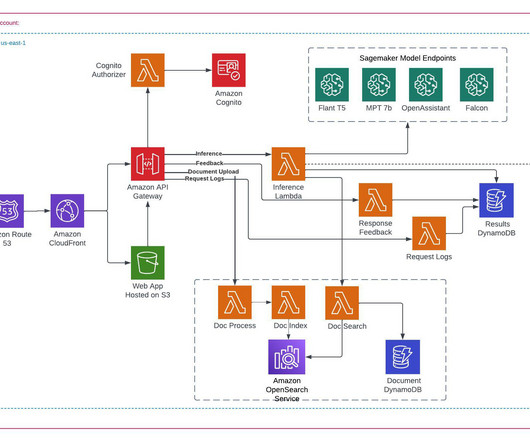

OCTOBER 4, 2023

In this post, we walk you through deploying a Falcon large language model (LLM) using Amazon SageMaker JumpStart and using the model to summarize long documents with LangChain and Python. SageMaker is a HIPAA-eligible managed service that provides tools that enable data scientists, ML engineers, and business analysts to innovate with ML.

TheSequence

MARCH 10, 2024

Created Using Ideogram Next Week in The Sequence: Edge 377: The last issue of our series about LLM reasoning covers reinforced fine-tuning(ReFT), a technique pioneered by ByteDance. Join industry leaders from LangChain, Meta, and Visa for insights to master AI and ML in production. 🛠 Real World ML Can I Solve Science?

Snorkel AI

OCTOBER 12, 2023

We hope that you will enjoy watching the videos and learning more about the impact of LLMs on the world. Closing Keynote: LLMOps: Making LLM Applications Production-Grade Large language models are fluent text generators, but they struggle at generating factual, correct content.

Snorkel AI

OCTOBER 12, 2023

We hope that you will enjoy watching the videos and learning more about the impact of LLMs on the world. Closing Keynote: LLMOps: Making LLM Applications Production-Grade Large language models are fluent text generators, but they struggle at generating factual, correct content.

JULY 17, 2023

The practical implementation of a Large Language Model (LLM) for a bespoke application is currently difficult for the majority of individuals. It takes a lot of time and expertise to create an LLM that can generate content with high accuracy and speed for specialized domains or, perhaps, to imitate a writing style.

AWS Machine Learning Blog

FEBRUARY 29, 2024

Creating scalable and efficient machine learning (ML) pipelines is crucial for streamlining the development, deployment, and management of ML models. Configuration files (YAML and JSON) allow ML practitioners to specify undifferentiated code for orchestrating training pipelines using declarative syntax.

Expert insights. Personalized for you.

We have resent the email to

Are you sure you want to cancel your subscriptions?

Let's personalize your content