Hyperparameter Tuning in Machine Learning: A Key to Optimize Model Performance

Heartbeat

SEPTEMBER 22, 2023

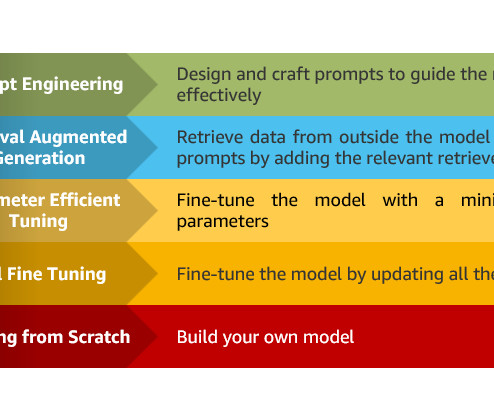

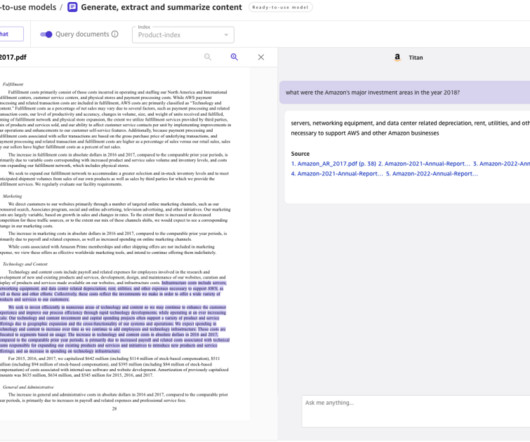

I write about Machine Learning on Medium || Github || Kaggle || Linkedin. ? Introduction In the world of machine learning, where algorithms learn from data to make predictions, it’s important to get the best out of our models. Machine Learning Lifecycle (Image by Author) 2.

Let's personalize your content