Generative AI Pushed Us to the AI Tipping Point

Unite.AI

OCTOBER 26, 2023

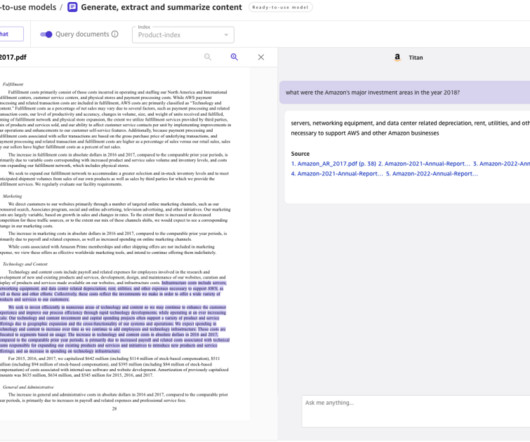

Before artificial intelligence (AI) was launched into mainstream popularity due to the accessibility of Generative AI (GenAI), data integration and staging related to Machine Learning was one of the trendier business priorities.

Let's personalize your content